Hi, everybody!

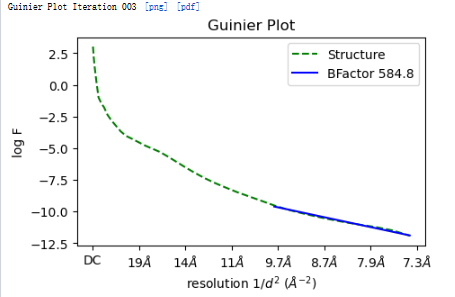

I ran a Nu-refine job, but during the process, the BFactor kept increasing, and the map quality kept decreasing.

Does this mean the map is not suitable?

Thanks!

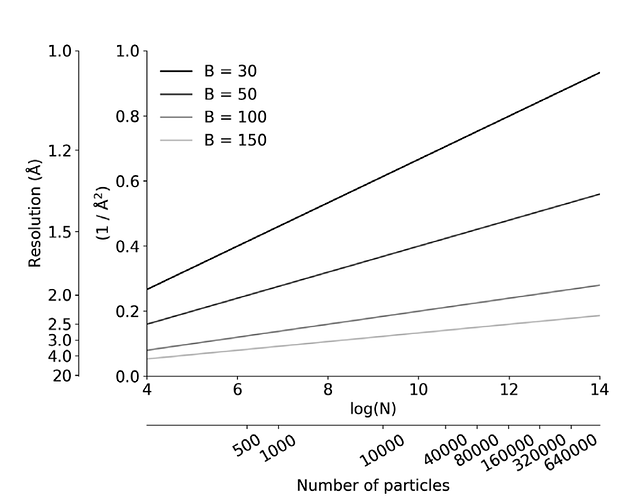

Generally if you have b factors over 150 the map is not suitable to build a good model, i.e. the map is not model buildable quality. It is possible but unlikely collecting more particles from this grid prep will decrease the b factor and you might benefit from making another sample with optimized ice/orientations.

The reason for this is because the map resolution scales linearly with log number of particles while the b factor sets the slope of the line (see image). Basically the higher the b factor, the slower the resolution will improve by incorporating more particles. Essentially at very high b-factors the map does not improve much by collecting more data and so grid optimization is probably best.

Hi @shimmer!

What is a B-factor?

The B-factor is, informally, a measure of the difference in the power of the map signal at each frequency compared to what we’d expect a map to look like, based on x-ray crystallography data. In simpler terms: given your map’s nominal resolution, x-ray data tells us the Fourier spectrum ought to follow certain basic trends, and the B-factor tells us the deviation between these expectations and your actual map.

The B-factor we use here was originally described in Henderson and Rosenthal, 2003. A perhaps more approachable explanation is available in section 4.7 of Single-Particle Cryo-EM of Biological Macromolecules, ed. Glaeser, Nogales, Chiu.

Quoting from various parts of that section:

At any given resolution a three-dimensional reconstruction obtained by single- particle averaging is often much blurrier (its features appear ‘less sharp’) than an ideal map of the macromolecule at that resolution, such as might be generated by a simulation from an atomic model.

A useful way to quantify signal loss is to derive or measure attenuation envelopes, which describe, as a function of spatial frequency, the scaling factor applied to the signal.

As it turns out, a number of signal attenuation envelopes (for example, those due to optical image deterioration, or translational and rotational alignment errors) have the form of a Gaussian function of s, the spatial frequency:

E(s) = exp(−1/4 Bs^2). This is analogous to the temperature factors used in x-ray crystallography to describe the effect of thermal displacements of atoms from their ideal crystalline position. The notation B, and the common usage of the phrase ‘B factor’ (units of Å^2) in cryo-EM, are thus used to describe these envelopes, even when they do not arise from thermal motions.

So, the B-factor describes the exponential falloff of signal at higher resolutions. A high B-factor means you’ve lost a lot of high-resolution information, so as @dimkol94 says, you may not be able to build a model into the map.

What does this mean for your map?

The B-factor in this case incorporates signal attenuation from all sources, not just image quality, between the expected curve and your map, up to the GSFSC resolution. It may be that your images are too poor to achieve a high-resolution map; or it may be that the map (and therefore B-factor) may improve with a cleaner particle stack, a different refinement approach, etc. It’s hard to say from just a single number!

I hope that’s helpful!

okay, thanks for your replies.

For single-particle maps where data was collected on a direct detector with motion-correction, we usually see B-factors ranging from 50-300. A b-factor around 600 would cause me to closely examine if something is wrong with the data or processing.

HTH.

I’m surprised no one has commented on the resolution.

B-factor estimation is flaky at best (and outright dangerous at worst) at resolutions worse than about 5A.

With a 7.x A map, I would not trust any b-factor estimate - RELION has the same issue, albeit less severely*. Right now I have a 9A map and both CryoSPARC and RELION are estimating b-factors in the (-)400-900 range. I do block-based reconstruction in RELION, hit 5A, b-factor estimate immediately drops to a much saner (-)160… which I still think is too high.

When checking model “sanity” during refinement, I check the sharpened map but rarely worry about it too much in CryoSPARC as it always seems over sharpened. I’m more concerned the unsharpened map is in line with what I would expect for a reported resolution.

*The difference in how CryoSPARC and RELION handle b-factor has been discussed in the past. ![]()

That’s a fair point really and good that you brought this up. I assumed @shimmer was near the end of their reconstruction to the point where the NU job was aiming at a model buildable map. This may not be the case though as you mention. I also ever look at the b factor when I use the sharpened map to build my final model.