Hi,

In the latest version, heterogenous refinement sometimes (frequently) seems to run anomalously slowly - to the point where the whole system seems to choke up, when running a single heterogeneous refinement job without excessive box size or unusually large number of particles.

Has anyone else noticed this? It is slow to the point that 10 sub-iterations is taking 1hr (!). We have seen this on two systems with different OS and GPU config, so it is not a system-specific issue.

Other job types run fine, it seems to be tied to hetero refinement specifically.

The slowdown seems to be tied to the number of classes - A job with 8 classes is taking 5-10 min per iteration, but only 10s (!) per iteration if I reduce to 5 classes.

Cheers

olaf

February 26, 2023, 10:18pm

2

It may not be relevant at all, but when it happens again next time, try clearing page cache on the worker node to see if it might help, e.g.,

echo 3 > /proc/sys/vm/drop_caches

Cheers

2 Likes

@olibclarke There’s a chance this could be related to an issue in CUDA 11.8 that we worked around in v4.2.0 (just released), it may be worth updating to try

It’s unclear why this slowdown would be happening now and not in previous versions but the dependence on size of the job (num classes) suggests that it is related to system RAM. As @olaf suggested, you can try doing

echo 1 > /proc/sys/vm/drop_caches

(NB the 3 drops all caches, 1 drops only the filesystem cache which should be all that’s needed)htop

1 Like

Hi @apunjani

Thanks! I’ve tried this, but it doesn’t seem to help (and output of htop doesn’t seem to change before/after).

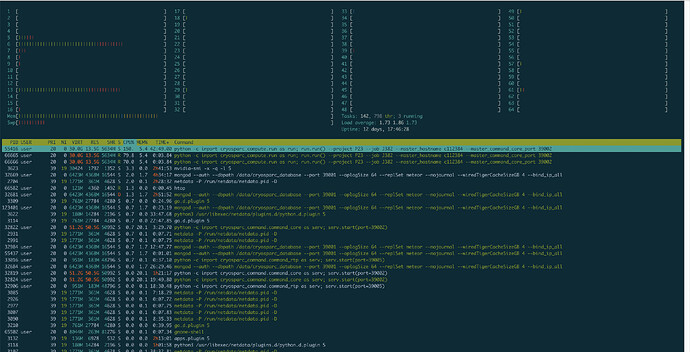

Here is the output of htop:

We will try to reproduce the performance problems you experience with heterogeneous refinement. What were box size, particle count and applied symmetry for affected jobs?cryosparc_worker installations for which you have observed the problem (or only one installation if that is shared between the two GPU hosts), please can you post

Please could you also email us the job reports for affected jobs.

We would also be interested in the file produced bycryosparcm snaplogs, as we spotted unexpectedly heavy memory use by the command_core process

Did you observe this previously/regularly?

Hi @wtempel , for this particular affected job:

box size: 200 (but raw particles are 600px)

But we have seen it in a variety of contexts since upgrading to 4.1.

The cryosparc version is 4.1.3-privatebeta.1 (but we saw the same behavior with earlier 4.1x releases).

cryosparcw call which nvcc:

cryosparcw call nvcc --version:

cryosparcw call python -c "import pycuda.driver; print(pycuda.driver.get_version())"

Will send job reports & snaplogs via DM. I have seen this heavy memory usage from command_core during previous times that cryosparc is running slowly, yes.

Cheers

olibclarke:

cryosparcw call nvcc --version:

cryosparcw call python -c "import pycuda.driver; print(pycuda.driver.get_version())"

The inconsistency between CUDA version is not expected. Question (not suggestion): Did you runcryosparcw install-3dflex for this cryosparc_worker installation?

cryosparcw call conda list

cryosparcw call python -c "import torch; print(torch.cuda.is_available())"

?

cryosparcw call conda list:

# packages in environment at /home/user/software/cryosparc/cryosparc2_worker/deps/anaconda/envs/cryosparc_worker_env:

#

# Name Version Build Channel

_libgcc_mutex 0.1 conda_forge conda-forge

_openmp_mutex 4.5 2_gnu conda-forge

absl-py 0.15.0 pyhd8ed1ab_0 conda-forge

aiohttp 3.8.3 py38h0a891b7_1 conda-forge

aiosignal 1.3.1 pyhd8ed1ab_0 conda-forge

aom 3.5.0 h27087fc_0 conda-forge

appdirs 1.4.4 pyh9f0ad1d_0 conda-forge

astor 0.8.1 pyh9f0ad1d_0 conda-forge

astunparse 1.6.3 pyhd8ed1ab_0 conda-forge

async-timeout 4.0.2 pyhd8ed1ab_0 conda-forge

attrs 22.1.0 pyh71513ae_1 conda-forge

backcall 0.2.0 pyh9f0ad1d_0 conda-forge

backports 1.0 pyhd8ed1ab_3 conda-forge

backports.functools_lru_cache 1.6.4 pyhd8ed1ab_0 conda-forge

bcrypt 3.2.2 py38h0a891b7_1 conda-forge

blinker 1.5 pyhd8ed1ab_0 conda-forge

blosc 1.21.3 hafa529b_0 conda-forge

brotli 1.0.9 h166bdaf_8 conda-forge

brotli-bin 1.0.9 h166bdaf_8 conda-forge

brotlipy 0.7.0 py38h0a891b7_1005 conda-forge

brunsli 0.1 h9c3ff4c_0 conda-forge

bzip2 1.0.8 h7f98852_4 conda-forge

c-ares 1.18.1 h7f98852_0 conda-forge

c-blosc2 2.6.1 hf91038e_0 conda-forge

ca-certificates 2022.12.7 ha878542_0 conda-forge

cachetools 5.2.0 pyhd8ed1ab_0 conda-forge

certifi 2022.12.7 py38h06a4308_0

cffi 1.15.1 py38h4a40e3a_2 conda-forge

cfitsio 4.1.0 hd9d235c_0 conda-forge

charls 2.3.4 h9c3ff4c_0 conda-forge

charset-normalizer 2.1.1 pyhd8ed1ab_0 conda-forge

click 7.1.2 pyh9f0ad1d_0 conda-forge

cloudpickle 2.2.0 pyhd8ed1ab_0 conda-forge

cryptography 38.0.4 py38h2b5fc30_0 conda-forge

cuda-cccl 11.7.91 0 nvidia/label/cuda-11.7.1

cuda-command-line-tools 11.7.1 0 nvidia/label/cuda-11.7.1

cuda-compiler 11.7.1 0 nvidia/label/cuda-11.7.1

cuda-cudart 11.7.99 0 nvidia/label/cuda-11.7.1

cuda-cudart-dev 11.7.99 0 nvidia/label/cuda-11.7.1

cuda-cuobjdump 11.7.91 0 nvidia/label/cuda-11.7.1

cuda-cupti 11.7.101 0 nvidia/label/cuda-11.7.1

cuda-cuxxfilt 11.7.91 0 nvidia/label/cuda-11.7.1

cuda-documentation 11.7.91 0 nvidia/label/cuda-11.7.1

cuda-driver-dev 11.7.99 0 nvidia/label/cuda-11.7.1

cuda-gdb 11.7.91 0 nvidia/label/cuda-11.7.1

cuda-libraries 11.7.1 0 nvidia/label/cuda-11.7.1

cuda-libraries-dev 11.7.1 0 nvidia/label/cuda-11.7.1

cuda-memcheck 11.7.91 0 nvidia/label/cuda-11.7.1

cuda-nsight 11.7.91 0 nvidia/label/cuda-11.7.1

cuda-nsight-compute 11.7.1 0 nvidia/label/cuda-11.7.1

cuda-nvcc 11.7.99 0 nvidia/label/cuda-11.7.1

cuda-nvdisasm 11.7.91 0 nvidia/label/cuda-11.7.1

cuda-nvml-dev 11.7.91 0 nvidia/label/cuda-11.7.1

cuda-nvprof 11.7.101 0 nvidia/label/cuda-11.7.1

cuda-nvprune 11.7.91 0 nvidia/label/cuda-11.7.1

cuda-nvrtc 11.7.99 0 nvidia/label/cuda-11.7.1

cuda-nvrtc-dev 11.7.99 0 nvidia/label/cuda-11.7.1

cuda-nvtx 11.7.91 0 nvidia/label/cuda-11.7.1

cuda-nvvp 11.7.101 0 nvidia/label/cuda-11.7.1

cuda-sanitizer-api 11.7.91 0 nvidia/label/cuda-11.7.1

cuda-toolkit 11.7.1 0 nvidia/label/cuda-11.7.1

cuda-tools 11.7.1 0 nvidia/label/cuda-11.7.1

cuda-visual-tools 11.7.1 0 nvidia/label/cuda-11.7.1

cycler 0.11.0 pyhd8ed1ab_0 conda-forge

cytoolz 0.12.0 py38h0a891b7_1 conda-forge

dask-core 2022.12.0 pyhd8ed1ab_0 conda-forge

dav1d 1.0.0 h166bdaf_1 conda-forge

decorator 4.4.2 py_0 conda-forge

fftw 3.3.10 nompi_hf0379b8_106 conda-forge

flask 1.1.4 pyhd8ed1ab_0 conda-forge

flask-jsonrpc 0.3.1 pypi_0 pypi

flask-pymongo 2.3.0 pypi_0 pypi

flatbuffers 1.12 pypi_0 pypi

fonttools 4.38.0 py38h0a891b7_1 conda-forge

freetype 2.12.1 hca18f0e_1 conda-forge

frozenlist 1.3.3 py38h0a891b7_0 conda-forge

fsspec 2022.11.0 pyhd8ed1ab_0 conda-forge

future 0.18.2 pyhd8ed1ab_6 conda-forge

gast 0.3.3 py_0 conda-forge

gds-tools 1.3.1.18 0 nvidia/label/cuda-11.7.1

giflib 5.2.1 h36c2ea0_2 conda-forge

google-auth 2.15.0 pyh1a96a4e_0 conda-forge

google-auth-oauthlib 0.4.6 pyhd8ed1ab_0 conda-forge

google-pasta 0.2.0 pyh8c360ce_0 conda-forge

grpcio 1.32.0 py38heead2fc_0 conda-forge

h5py 2.10.0 nompi_py38h9915d05_106 conda-forge

hdf5 1.10.6 nompi_h6a2412b_1114 conda-forge

idna 3.4 pyhd8ed1ab_0 conda-forge

imagecodecs 2022.8.8 py38hf09e3b1_5 conda-forge

imageio 2.22.4 pyhfa7a67d_1 conda-forge

importlib-metadata 5.1.0 pyha770c72_0 conda-forge

ipython 7.33.0 py38h578d9bd_0 conda-forge

itsdangerous 1.1.0 py_0 conda-forge

jedi 0.18.2 pyhd8ed1ab_0 conda-forge

jinja2 2.11.3 pyhd8ed1ab_2 conda-forge

joblib 1.2.0 pyhd8ed1ab_0 conda-forge

jpeg 9e h166bdaf_2 conda-forge

jxrlib 1.1 h7f98852_2 conda-forge

keras-preprocessing 1.1.2 pyhd8ed1ab_0 conda-forge

keyutils 1.6.1 h166bdaf_0 conda-forge

kiwisolver 1.4.4 py38h43d8883_1 conda-forge

krb5 1.20.1 hf9c8cef_0 conda-forge

lcms2 2.14 h6ed2654_0 conda-forge

ld_impl_linux-64 2.39 hcc3a1bd_1 conda-forge

lerc 4.0.0 h27087fc_0 conda-forge

libaec 1.0.6 h9c3ff4c_0 conda-forge

libavif 0.10.1 h5cdd6b5_2 conda-forge

libblas 3.9.0 16_linux64_openblas conda-forge

libbrotlicommon 1.0.9 h166bdaf_8 conda-forge

libbrotlidec 1.0.9 h166bdaf_8 conda-forge

libbrotlienc 1.0.9 h166bdaf_8 conda-forge

libcblas 3.9.0 16_linux64_openblas conda-forge

libcublas 11.10.3.66 0 nvidia/label/cuda-11.7.1

libcublas-dev 11.10.3.66 0 nvidia/label/cuda-11.7.1

libcufft 10.7.2.91 0 nvidia/label/cuda-11.7.1

libcufft-dev 10.7.2.91 0 nvidia/label/cuda-11.7.1

libcufile 1.3.1.18 0 nvidia/label/cuda-11.7.1

libcufile-dev 1.3.1.18 0 nvidia/label/cuda-11.7.1

libcurand 10.2.10.91 0 nvidia/label/cuda-11.7.1

libcurand-dev 10.2.10.91 0 nvidia/label/cuda-11.7.1

libcurl 7.86.0 h6312ad2_2 conda-forge

libcusolver 11.4.0.1 0 nvidia/label/cuda-11.7.1

libcusolver-dev 11.4.0.1 0 nvidia/label/cuda-11.7.1

libcusparse 11.7.4.91 0 nvidia/label/cuda-11.7.1

libcusparse-dev 11.7.4.91 0 nvidia/label/cuda-11.7.1

libdeflate 1.14 h166bdaf_0 conda-forge

libedit 3.1.20191231 he28a2e2_2 conda-forge

libev 4.33 h516909a_1 conda-forge

libffi 3.4.2 h7f98852_5 conda-forge

libgcc-ng 12.2.0 h65d4601_19 conda-forge

libgfortran-ng 12.2.0 h69a702a_19 conda-forge

libgfortran5 12.2.0 h337968e_19 conda-forge

libgomp 12.2.0 h65d4601_19 conda-forge

liblapack 3.9.0 16_linux64_openblas conda-forge

libllvm10 10.0.1 he513fc3_3 conda-forge

libnghttp2 1.47.0 hdcd2b5c_1 conda-forge

libnpp 11.7.4.75 0 nvidia/label/cuda-11.7.1

libnpp-dev 11.7.4.75 0 nvidia/label/cuda-11.7.1

libnsl 2.0.0 h7f98852_0 conda-forge

libnvjpeg 11.8.0.2 0 nvidia/label/cuda-11.7.1

libnvjpeg-dev 11.8.0.2 0 nvidia/label/cuda-11.7.1

libopenblas 0.3.21 pthreads_h78a6416_3 conda-forge

libpng 1.6.39 h753d276_0 conda-forge

libprotobuf 3.21.11 h3eb15da_0 conda-forge

libsqlite 3.40.0 h753d276_0 conda-forge

libssh2 1.10.0 haa6b8db_3 conda-forge

libstdcxx-ng 12.2.0 h46fd767_19 conda-forge

libtiff 4.4.0 h55922b4_4 conda-forge

libuuid 2.32.1 h7f98852_1000 conda-forge

libwebp-base 1.2.4 h166bdaf_0 conda-forge

libxcb 1.13 h7f98852_1004 conda-forge

libzlib 1.2.13 h166bdaf_4 conda-forge

libzopfli 1.0.3 h9c3ff4c_0 conda-forge

llvmlite 0.34.0 py38h4f45e52_2 conda-forge

locket 1.0.0 pyhd8ed1ab_0 conda-forge

lz4-c 1.9.3 h9c3ff4c_1 conda-forge

mako 1.2.4 pyhd8ed1ab_0 conda-forge

markdown 3.4.1 pyhd8ed1ab_0 conda-forge

markupsafe 2.0.1 py38h497a2fe_1 conda-forge

matplotlib-base 3.5.3 py38h38b5ce0_2 conda-forge

matplotlib-inline 0.1.6 pyhd8ed1ab_0 conda-forge

multidict 6.0.2 py38h0a891b7_2 conda-forge

munkres 1.1.4 pyh9f0ad1d_0 conda-forge

ncurses 6.3 h27087fc_1 conda-forge

networkx 2.8.8 pyhd8ed1ab_0 conda-forge

nsight-compute 2022.2.1.3 0 nvidia/label/cuda-11.7.1

numba 0.51.2 py38hc5bc63f_0 conda-forge

numpy 1.19.5 py38h8246c76_3 conda-forge

oauthlib 3.2.2 pyhd8ed1ab_0 conda-forge

openjpeg 2.5.0 h7d73246_1 conda-forge

openssl 1.1.1s h0b41bf4_1 conda-forge

opt-einsum 3.3.0 pypi_0 pypi

packaging 22.0 pyhd8ed1ab_0 conda-forge

pandas 1.4.4 py38h47df419_0 conda-forge

parso 0.8.3 pyhd8ed1ab_0 conda-forge

partd 1.3.0 pyhd8ed1ab_0 conda-forge

pbzip2 1.1.13 0 conda-forge

pexpect 4.8.0 pyh1a96a4e_2 conda-forge

pickleshare 0.7.5 py_1003 conda-forge

pillow 9.2.0 py38h9eb91d8_3 conda-forge

pip 22.3.1 pyhd8ed1ab_0 conda-forge

prompt-toolkit 3.0.36 pyha770c72_0 conda-forge

protobuf 4.21.11 py38h8dc9893_0 conda-forge

psutil 5.9.4 py38h0a891b7_0 conda-forge

pthread-stubs 0.4 h36c2ea0_1001 conda-forge

ptyprocess 0.7.0 pyhd3deb0d_0 conda-forge

pyasn1 0.4.8 py_0 conda-forge

pyasn1-modules 0.2.7 py_0 conda-forge

pybind11 2.10.1 py38h43d8883_0 conda-forge

pybind11-global 2.10.1 py38h43d8883_0 conda-forge

pycparser 2.21 pyhd8ed1ab_0 conda-forge

pycrypto 2.6.1 py38h497a2fe_1006 conda-forge

pycuda 2020.1 pypi_0 pypi

pyfftw 0.12.0 py38h9e8fb0f_3 conda-forge

pygments 2.13.0 pyhd8ed1ab_0 conda-forge

pyjwt 2.6.0 pyhd8ed1ab_0 conda-forge

pylibtiff 0.4.2 py38hd5759d1_7 conda-forge

pymongo 3.13.0 py38hfa26641_0 conda-forge

pyopenssl 22.1.0 pyhd8ed1ab_0 conda-forge

pyparsing 3.0.9 pyhd8ed1ab_0 conda-forge

pysocks 1.7.1 pyha2e5f31_6 conda-forge

python 3.8.15 h257c98d_0_cpython conda-forge

python-dateutil 2.8.2 pyhd8ed1ab_0 conda-forge

python-slugify 5.0.2 pyhd8ed1ab_0 conda-forge

python-snappy 0.6.1 py38h1ddbb56_0 conda-forge

python_abi 3.8 3_cp38 conda-forge

pytools 2020.4.4 pyhd3deb0d_0 conda-forge

pytz 2022.6 pyhd8ed1ab_0 conda-forge

pyu2f 0.1.5 pyhd8ed1ab_0 conda-forge

pywavelets 1.3.0 py38h71d37f0_1 conda-forge

pyyaml 6.0 py38h0a891b7_5 conda-forge

readline 8.1.2 h0f457ee_0 conda-forge

requests 2.28.1 pyhd8ed1ab_1 conda-forge

requests-oauthlib 1.3.1 pyhd8ed1ab_0 conda-forge

requests-toolbelt 0.10.1 pyhd8ed1ab_0 conda-forge

rsa 4.9 pyhd8ed1ab_0 conda-forge

scikit-image 0.17.2 py38h51da96c_4 conda-forge

scikit-learn 0.23.2 py38h5d63f67_3 conda-forge

scipy 1.9.1 py38hea3f02b_0 conda-forge

semver 2.13.0 pyh9f0ad1d_0 conda-forge

setuptools 65.5.1 pyhd8ed1ab_0 conda-forge

six 1.15.0 pyh9f0ad1d_0 conda-forge

sleef 3.5.1 h9b69904_2 conda-forge

snappy 1.1.9 hbd366e4_2 conda-forge

tabulate 0.9.0 pyhd8ed1ab_1 conda-forge

tensorboard 2.8.0 pyhd8ed1ab_1 conda-forge

tensorboard-data-server 0.6.1 py38h2b5fc30_4 conda-forge

tensorboard-plugin-wit 1.8.1 pyhd8ed1ab_0 conda-forge

tensorflow 2.4.4 pypi_0 pypi

tensorflow-estimator 2.4.0 pyh9656e83_0 conda-forge

termcolor 1.1.0 pyhd8ed1ab_3 conda-forge

text-unidecode 1.3 py_0 conda-forge

threadpoolctl 3.1.0 pyh8a188c0_0 conda-forge

tifffile 2022.10.10 pyhd8ed1ab_0 conda-forge

tk 8.6.12 h27826a3_0 conda-forge

toolz 0.12.0 pyhd8ed1ab_0 conda-forge

torch 1.13.1 pypi_0 pypi

traitlets 5.7.1 pyhd8ed1ab_0 conda-forge

typing-extensions 3.7.4.3 0 conda-forge

typing_extensions 3.7.4.3 py_0 conda-forge

unicodedata2 15.0.0 py38h0a891b7_0 conda-forge

unidecode 1.3.6 pyhd8ed1ab_0 conda-forge

urllib3 1.26.13 pyhd8ed1ab_0 conda-forge

wcwidth 0.2.5 pyh9f0ad1d_2 conda-forge

werkzeug 1.0.1 pyh9f0ad1d_0 conda-forge

wheel 0.38.4 pyhd8ed1ab_0 conda-forge

wrapt 1.12.1 py38h497a2fe_3 conda-forge

xorg-libxau 1.0.9 h7f98852_0 conda-forge

xorg-libxdmcp 1.1.3 h7f98852_0 conda-forge

xz 5.2.6 h166bdaf_0 conda-forge

yaml 0.2.5 h7f98852_2 conda-forge

yarl 1.8.1 py38h0a891b7_0 conda-forge

zfp 1.0.0 h27087fc_3 conda-forge

zipp 3.11.0 pyhd8ed1ab_0 conda-forge

zlib 1.2.13 h166bdaf_4 conda-forge

zlib-ng 2.0.6 h166bdaf_0 conda-forge

zstd 1.5.2 h6239696_4 conda-forge

And the output of this one is “True”

re install-3dflex I believe so but not sure… @kookjookeem ?

Hi,

Yes, 3dflex dependencies were installed via cryosparcw install-3dflex.

Best,

1 Like

Hi @wtempel ,

Any luck reproducing this issue? It is still troubling us, and seems specific to heterogeneous refinement

kreddy

March 31, 2023, 7:43pm

13

Hi all,

Just wanted to chime in, we are experiencing the exact same problem on our workstation (Exxact), 750k particles, 64px box size. 8 classes dramatically slows down the entire system, but 4 works great. I also experienced a similar issue with 2D classification - a major slowdown with 300 classes, but perfectly fine with 100. We’re currently on cryoSPARC 4.2.0, but since we’ve only just started to use the workstation I can’t speak to if this issue was present in cryoSPARC 3.

We have not installed 3d-flex dependencies, and we’re on CUDA 11.7. Happy to provide more info and hone in on this issue.

Thanks,

Krishna

Hi @kookjookeem , @kreddy , @olibclarke ,

We’re still not able to reproduce these slowdowns on our systems, so we’ve created a modified version of the job that outputs low-level timings to the Event Log. Looking at these timings will help us determine which part of the job is causing the slowdown, whether it’s I/O, GPU, or CPU code.

1 Like

Hi @stephan ,

Here are the first timings for a job with 128px particles and default params otherwise, on 2080Ti card (just as a baseline):

ITER 37 DEV 1 THR 0 NUM 2500 TOTAL 8.4547293 ELAPSED 8.5376183 --

set_models : 0.000013828

set_data_preparation : 0.003960609

load_image_data_allocate : 0.039718390

load_image_data_cpu_arrange : 0.310912848

load_image_data_download : 0.027438641

load_image_data_real_prepare : 0.002835035

load_image_data_transform : 0.009402514

setup_current_data_and_ctf_xys : 0.007085085

setup_current_data_and_ctf_datactf : 0.101437330

debug : 0.000048399

setup_current_noise : 0.003782511

compute_image_power : 0.002274752

setup_current_poses_and_shifts_poses : 1.881572247

setup_current_poses_and_shifts_shifts : 0.010146856

setup_current_poses_and_shifts_cpu : 0.239060640

compute_resid_pow : 2.143128157

compute_error : 0.000139475

cull_candidates : 3.028272867

subdivide : 0.222292662

find_and_set_best_pose_shift_minargmin : 0.003963470

find_and_set_best_pose_shift_findbest : 0.002699614

find_and_set_best_pose_shift_set : 0.307797432

normalize_and_set_posterior_thresh : 0.035582304

backproject : 0.071163654

[CPU: 3.12 GB Avail: 365.60 GB]

Processed 2500.000 images with 5 models in 9.849s.

[CPU: 3.12 GB Avail: 365.60 GB]

-- Effective number of classes per image: min 1.00 | 25-pct 1.05 | median 1.45 | 75-pct 2.08 | max 4.47

[CPU: 3.12 GB Avail: 365.60 GB]

-- Class 0: 20.44%

[CPU: 3.12 GB Avail: 365.60 GB]

-- Class 1: 23.70%

[CPU: 3.12 GB Avail: 365.60 GB]

-- Class 2: 47.81%

[CPU: 3.12 GB Avail: 365.60 GB]

-- Class 3: 6.01%

[CPU: 3.12 GB Avail: 365.60 GB]

-- Class 4: 2.05%

[CPU: 3.00 GB Avail: 365.69 GB]

Learning rate 0.060

[CPU: 2.90 GB Avail: 363.74 GB]

Done iteration 37 in 22.254s. Total time so far 888.578s

[CPU: 2.90 GB Avail: 363.74 GB]

ITER 37 TIME 22.257610 --

iter_setup : 0.012524843

iter_noise_estimate : 0.002909422

iter_batch_and_prep_structures : 0.017100573

engine_setup : 0.090946436

engine_transfer_models : 0.041432619

engine_allocate_accumulators : 0.002585888

engine_work : 17.850639343

engine_acumulate_and_upload_from_devs : 0.134565830

engine_free_gpu_mem : 0.007005692

engine_gc_collect : 0.000002623

engine_postprocess_backprojected_results : 0.349249601

iter_gc_collect : 1.160237789

iter_update_noise_model : 0.001674175

iter_accumulate_average_halfmaps : 0.410770416

iter_get_learning_rate : 0.003729582

iter_compute_fscs : 0.000006199

iter_solve_structures : 2.089152336

iter_check_convergence : 0.000003576

iter_plot : 0.000001192

kwang

April 12, 2023, 8:06pm

16

Hi @olibclarke , thanks for the baseline timings! When you get the chance, can you also send timings for a job with the slowdown (same GPU, input data, and params except for number of classes)

1 Like

Will do next time we see it, thanks!

jennk

February 15, 2024, 5:48pm

18

Hi all,

Did you ever find a resolution to this problem? We recently installed cryoSPARC v4.4, and heterogeneous refinement is running very slowly (>24 hrs for ~900,000 particles and 5 classes). Ab initio reconstruction seems abnormally slow as well. Not sure if it’s the same issue that you all ran into, but if you have any suggestions, I’d really appreciate it!

Thanks,

wtempel

February 15, 2024, 6:26pm

19

Welcome to the forum @jennk .

Do you happen to have a pair of jobs that ran with same inputs, same parameters and on the same host, with one job completed more rapidly on older version, the other more slowly on v4.4?

jennk

February 15, 2024, 6:40pm

20

No, I haven’t processed the same exact data on an older version, as this is a brand new dataset, but things were much faster using an analogous dataset with similar numbers of particles and classes on v4.2. For example, using ~800,00 particles and 5 classes, heterogeneous refinement took ~6 hours.