I just cloned a job from the older dataset to run in v4.4 to see if there’s a difference. Will update here once I see how that goes.

I ended up cloning two different jobs from an older dataset to test if number of particles/classes makes a difference. Neither has finished running yet, but it’s clear both are significantly slower than when I ran identical jobs in v4.2.

For one job, there are ~750,000 particles and 5 classes. In v4.2, this took ~6 hrs total with 162 iterations. In v4.4, the same job has been running for over 5 hrs and is only at iteration 24. Looking at the time per iteration, v4.2 had ~90 sec per iteration and stayed pretty constant until the last few iterations, which took up to ~4000 sec. For v4.4, overall each iteration is taking much longer, but there is a lot more variability in how long each iteration is taking, ranging from ~250-1000 sec.

In the other job, there are ~150,000 particles and 3 classes. In v4.2, this took ~1.5 hrs total with 82 iterations. In v4.4, it’s currently at iteration 30 after ~3.5 hrs. Similar to the other job, in v4.2, the time per iteration was very consistent at ~50 sec except for the last few, which took up to ~750 sec. v4.4 showed more variability so far, ranging from ~200-600 sec.

In order to upgrade to v4.4, we launched a new HPC cluster with newer versions of CUDA and Nvidia drivers. So potentially that could be related, but I’m not sure how to diagnose what the problem is. I’ve only noticed this slowdown in heterogeneous refinement and ab initio reconstruction so far (though I haven’t yet tested cloning an old ab initio run to do a direct comparison). Other jobs like 2D classification and particle extraction seem to be running similar to before.

@jennk Thanks for trying this. Please can you email us the job error reports for the two pairs of jobs. I will let you know a suitable email address via direct message.

Does the new cluster use the same storage devices for

- project directories

- particle cache

as the old cluster?

Just sent the reports! And yes, we’re still using the same storage devices. We don’t use an SSD for particle caching.

Also, after testing some more things, it seems this slowdown is affecting any job that uses GPUs, including NU refinement and 2D classification, but only when I use a particular type of GPU (aws g5.4xlarge). When I ran the jobs mentioned above in v4.2, I had been using g5.4xlarge GPUs too except with older CUDA and Nvidia drivers. If I use more powerful gpus (g5.12xlarge), the same jobs mentioned finish twice as fast in v4.4 than when I had run them in v4.2. It makes sense to me that g5.12xlarge would be faster, and I can use those GPUs from now on for everything, but it seems strange that the g5.4x large GPUs are so much slower now after the upgrade.

Our new infrastructure also experience this. RockyLinux 9, RTX 4090, NVIDIA Driver: 555.42.06

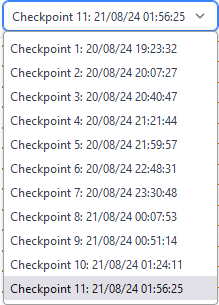

As you can see it worked but then it just “stops” with new checkpoints:

After doing

echo 1 > /proc/sys/vm/drop_caches

which took quite a bit until it was done it’s seams to work again. At least the GUI is updating the line:

DEV 0 THR 0 NUM 1506500 TOTAL 87427111. ELAPSED 27399.763 --

@biocit What version of CryoSPARC do you use? Was Cache particle images on SSD enabled for this job?

Version is v4.5.3+240807

Yes this option was active