@wtempel, the previous problem is completely fixed (database, interface access and license validation).

Here are the worker environment details:

$ eval $(/home/victor/cryosparc/cryosparc_worker/bin/cryosparcw env)

$ env | grep PATH

LIBRARY_PATH=

LD_LIBRARY_PATH=/usr/local/cuda-10.0/lib64:/home/victor/cryosparc/cryosparc_worker/deps/external/cudnn/lib:/usr/local/relion-3/lib:/usr/local/cuda/lib:/usr/local/cuda/lib64:/usr/local/cuda-9.2/lib:/usr/local/cuda-9.2/lib64:/usr/local/cuda-9.1/lib:/usr/local/cuda-9.1/lib64:/usr/local/cuda-8.0/lib:/usr/local/cuda-8.0/lib64:/usr/local/cuda-7.5/lib:/usr/local/cuda-7.5/lib64:/usr/local/IMOD/lib

CPATH=

PATH=/usr/local/cuda-10.0/bin:/home/victor/cryosparc/cryosparc_worker/bin:/home/victor/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/bin:/home/victor/cryosparc/cryosparc_worker/deps/anaconda/condabin:/home/victor/cryosparc/cryosparc_master/bin:/usr/local/EMAN_2.21/condabin:/usr/local/relion-3/bin:/usr/local/mpich-3.2.1/bin:/usr/local/cuda/bin:/usr/local/IMOD/bin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/var/lib/snapd/snap/bin:/usr/local/motioncorr_v2.1/bin:/usr/local/Gctf_v1.06/bin:/usr/local/Gctf_v0.50/bin:/usr/local/ResMap:/usr/local/summovie_1.0.2/bin:/usr/local/unblur_1.0.2/bin:/usr/local/EMAN_2.21/bin:/home/victor/.local/bin:/home/victor/bin

CRYOSPARC_PATH=/home/victor/cryosparc/cryosparc_worker/bin

PYTHONPATH=/home/victor/cryosparc/cryosparc_worker

CRYOSPARC_CUDA_PATH=/usr/local/cuda-10.0

$ which nvcc

/usr/local/cuda-10.0/bin/nvcc

$ python -c "import pycuda.driver; print(pycuda.driver.get_version())"

(10, 0, 0)

$ uname -a

Linux c105627 3.10.0-1160.24.1.el7.x86_64 #1 SMP Thu Apr 8 19:51:47 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux

$ free -g

total used free shared buff/cache available

Mem: 376 12 1 0 362 362

Swap: 9 0 9

$ nvidia-smi

Wed May 3 10:36:41 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 465.19.01 Driver Version: 465.19.01 CUDA Version: 11.3 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... On | 00000000:1A:00.0 Off | N/A |

| 29% 26C P8 8W / 250W | 45MiB / 11019MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 NVIDIA GeForce ... On | 00000000:1B:00.0 Off | N/A |

| 30% 27C P8 10W / 250W | 1MiB / 11019MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 2 NVIDIA GeForce ... On | 00000000:60:00.0 Off | N/A |

| 30% 27C P8 1W / 250W | 1MiB / 11019MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 3 NVIDIA GeForce ... On | 00000000:61:00.0 Off | N/A |

| 29% 28C P8 16W / 250W | 1MiB / 11019MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 4 NVIDIA GeForce ... On | 00000000:B1:00.0 Off | N/A |

| 29% 27C P8 7W / 250W | 1MiB / 11019MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 5 NVIDIA GeForce ... On | 00000000:B2:00.0 Off | N/A |

| 29% 26C P8 1W / 250W | 1MiB / 11019MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 6 NVIDIA GeForce ... On | 00000000:DA:00.0 Off | N/A |

| 28% 25C P8 7W / 250W | 1MiB / 11019MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 7 NVIDIA GeForce ... On | 00000000:DB:00.0 Off | N/A |

| 29% 26C P8 1W / 250W | 1MiB / 11019MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 3345 G /usr/bin/X 27MiB |

| 0 N/A N/A 4012 G /usr/bin/gnome-shell 15MiB |

+-----------------------------------------------------------------------------+

Logs:

$ cryosparcm log command_core

2023-05-02 18:54:19,243 COMMAND.DATA dump_project INFO | Exporting project P6

2023-05-02 18:54:19,244 COMMAND.DATA dump_project INFO | Exported project P6 to /data1/VICTOR_RAW_DATA/P6/project.json in 0.00s

2023-05-02 18:54:19,943 COMMAND.DATA dump_project INFO | Exporting project P7

2023-05-02 18:54:19,945 COMMAND.DATA dump_project INFO | Exported project P7 to /data2/CRYOSPARC-DATA/P7/project.json in 0.00s

2023-05-02 18:54:22,520 COMMAND.DATA dump_project INFO | Exporting project P8

2023-05-02 18:54:22,522 COMMAND.DATA dump_project INFO | Exported project P8 to /data1/VICTOR_RAW_DATA/220518_PG86_R596_Xsslink/cryosparc_project/P8/project.json in 0.00s

2023-05-02 18:54:23,265 COMMAND.DATA dump_project INFO | Exporting project P9

2023-05-02 18:54:23,266 COMMAND.DATA dump_project INFO | Exported project P9 to /data1/VICTOR_RAW_DATA/P9/project.json in 0.00s

2023-05-02 18:54:23,911 COMMAND.DATA dump_project INFO | Exporting project P10

2023-05-02 18:54:23,914 COMMAND.DATA dump_project INFO | Exported project P10 to /data1/VICTOR_RAW_DATA/P10/project.json in 0.00s

2023-05-02 18:54:26,107 COMMAND.DATA dump_project INFO | Exporting project P11

2023-05-02 18:54:26,109 COMMAND.DATA dump_project INFO | Exported project P11 to /data1/VICTOR_RAW_DATA/P11/project.json in 0.00s

2023-05-02 18:54:26,510 COMMAND.DATA dump_project INFO | Exporting project P12

2023-05-02 18:54:26,511 COMMAND.DATA dump_project INFO | Exported project P12 to /data1/VICTOR_RAW_DATA/221020_MTG14_tRNA/P12/project.json in 0.00s

2023-05-02 18:54:27,114 COMMAND.DATA dump_project INFO | Exporting project P13

2023-05-02 18:54:27,116 COMMAND.DATA dump_project INFO | Exported project P13 to /data1/VICTOR_RAW_DATA/230119_JD116-CS-proccessing/P13/project.json in 0.00s

2023-05-02 19:31:44,958 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

2023-05-02 19:39:45,389 COMMAND.DATA dump_project INFO | Exporting project P14

2023-05-02 19:39:45,395 COMMAND.DATA dump_project INFO | Exported project P14 to /Bobcat/230406-JD116_monomer/P14/project.json in 0.01s

2023-05-02 19:39:45,877 COMMAND.DATA dump_project INFO | Exporting project P15

2023-05-02 19:39:45,882 COMMAND.DATA dump_project INFO | Exported project P15 to /Bobcat/230501_JD116-R582/CS-230501-jd116-r582-/project.json in 0.01s

2023-05-02 20:31:45,257 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

2023-05-02 21:31:45,398 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

2023-05-02 22:31:46,122 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

2023-05-02 23:31:46,128 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

2023-05-03 00:31:47,124 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

2023-05-03 01:31:47,625 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

2023-05-03 02:31:48,401 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

2023-05-03 03:31:48,910 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

2023-05-03 04:31:49,049 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

2023-05-03 05:31:49,286 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

2023-05-03 06:31:49,524 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

2023-05-03 07:31:50,363 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

2023-05-03 08:31:51,083 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

2023-05-03 09:31:51,556 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

2023-05-03 10:31:52,536 COMMAND.BG_WORKER background_worker INFO | License does not have telemetry enabled; will re-check license in 1 hour.

Waiting for data... (interrupt to abort)

Error in 3D Refinement,

$ cryosparcm joblog P14 J96

~

~

~

================= CRYOSPARCW ======= 2023-05-02 18:14:05.777105 =========

Project P14 Job J96

Master c105627.dhcp.swmed.org Port 39002

===========================================================================

========= monitor process now starting main process at 2023-05-02 18:14:05.777159

MAINPROCESS PID 157830

MAIN PID 157830

refine.newrun cryosparc_compute.jobs.jobregister

**** handle exception rc

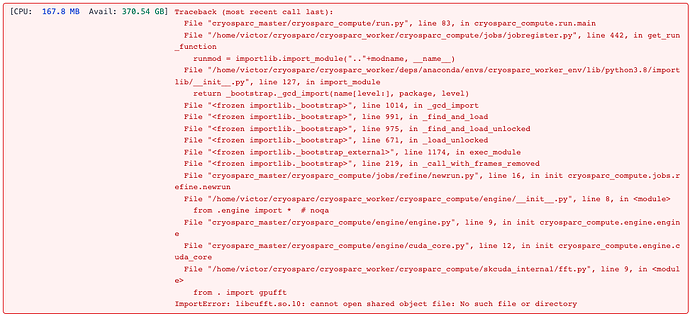

Traceback (most recent call last):

File "cryosparc_master/cryosparc_compute/run.py", line 83, in cryosparc_compute.run.main

File "/home/victor/cryosparc/cryosparc_worker/cryosparc_compute/jobs/jobregister.py", line 442, in get_run_function

runmod = importlib.import_module(".."+modname, __name__)

File "/home/victor/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/importlib/__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "<frozen importlib._bootstrap>", line 1014, in _gcd_import

File "<frozen importlib._bootstrap>", line 991, in _find_and_load

File "<frozen importlib._bootstrap>", line 975, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 671, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 1174, in exec_module

File "<frozen importlib._bootstrap>", line 219, in _call_with_frames_removed

File "cryosparc_master/cryosparc_compute/jobs/refine/newrun.py", line 16, in init cryosparc_compute.jobs.refine.newrun

File "/home/victor/cryosparc/cryosparc_worker/cryosparc_compute/engine/__init__.py", line 8, in <module>

from .engine import * # noqa

File "cryosparc_master/cryosparc_compute/engine/engine.py", line 9, in init cryosparc_compute.engine.engine

File "cryosparc_master/cryosparc_compute/engine/cuda_core.py", line 12, in init cryosparc_compute.engine.cuda_core

File "/home/victor/cryosparc/cryosparc_worker/cryosparc_compute/skcuda_internal/fft.py", line 9, in <module>

from . import gpufft

ImportError: libcufft.so.10: cannot open shared object file: No such file or directory

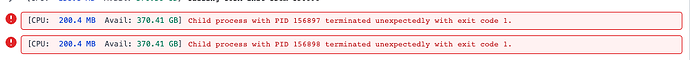

set status to failed

========= monitor process now waiting for main process

========= main process now complete at 2023-05-02 18:14:09.727332.

========= monitor process now complete at 2023-05-02 18:14:09.732920.

Error in patch motion cor:

cryosparcm joblog P15 J6

ssing/queues.py", line 245, in _feed

send_bytes(obj)

File "/home/victor/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 200, in send_bytes

self._send_bytes(m[offset:offset + size])

File "/home/victor/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 411, in _send_bytes

self._send(header + buf)

File "/home/victor/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 368, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

Traceback (most recent call last):

File "/home/victor/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/queues.py", line 245, in _feed

send_bytes(obj)

File "/home/victor/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 200, in send_bytes

self._send_bytes(m[offset:offset + size])

File "/home/victor/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 411, in _send_bytes

self._send(header + buf)

File "/home/victor/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 368, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

Traceback (most recent call last):

File "/home/victor/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/queues.py", line 245, in _feed

send_bytes(obj)

File "/home/victor/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 200, in send_bytes

self._send_bytes(m[offset:offset + size])

File "/home/victor/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 411, in _send_bytes

self._send(header + buf)

File "/home/victor/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 368, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

/home/victor/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numpy/core/fromnumeric.py:3372: RuntimeWarning: Mean of empty slice.

return _methods._mean(a, axis=axis, dtype=dtype,

/home/victor/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numpy/core/_methods.py:170: RuntimeWarning: invalid value encountered in double_scalars

ret = ret.dtype.type(ret / rcount)

========= main process now complete at 2023-05-02 18:09:13.903234.

========= monitor process now complete at 2023-05-02 18:09:14.002068.

I hope this helps