Did you run cryosparcw connect on the workers (not the master)?

Yes, and I also see these nodes among available ones in “Queue job” in cryosparc web-gui.

cryosparcm cli "get_scheduler_targets()"

----------------------------------------

cache_path: /data/cryosparc_cache

cache_quota_mb: None

cache_reserve_mb: 10000

desc: None

gpus: [{'id': 0, 'mem': 11554717696, 'name': 'NVIDIA GeForce RTX 2080 Ti'}, {'id': 1, 'mem': 11554717696, 'name': 'NVIDIA GeForce RTX 2080 Ti'}, {'id': 2, 'mem': 11554717696, 'name': 'NVIDIA GeForce RTX 2080 Ti'}, {'id': 3, 'mem': 11554717696, 'name': 'NVIDIA GeForce RTX 2080 Ti'}]

hostname: cmm-1

lane: default

monitor_port: None

name: cmm-1

resource_fixed: {'SSD': True}

resource_slots: {'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17], 'GPU': [0, 1, 2, 3], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29]}

ssh_str: cryosparcuser@cmm-1

title: Worker node cmm-1

type: node

worker_bin_path: /opt/cryosparc/cryosparc_worker/bin/cryosparcw

----------------------------------------

cache_path: /home/cryosparcuser/cache

cache_quota_mb: None

cache_reserve_mb: 10000

desc: None

gpus: [{'id': 1, 'mem': 11554717696, 'name': 'GeForce RTX 2080 Ti'}, {'id': 2, 'mem': 11554717696, 'name': 'GeForce RTX 2080 Ti'}, {'id': 3, 'mem': 11554717696, 'name': 'GeForce RTX 2080 Ti'}]

hostname: cmm2

lane: slow_lane

monitor_port: None

name: cmm2

resource_fixed: {'SSD': True}

resource_slots: {'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17], 'GPU': [1, 2, 3], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29]}

ssh_str: cryosparcuser@cmm2

title: Worker node cmm2

type: node

worker_bin_path: /opt/cryosparc/cryosparc_worker/bin/cryosparcw

----------------------------------------

cache_path: /storage/cryosparcuser/cache

cache_quota_mb: None

cache_reserve_mb: 10000

desc: None

hostname: cmm3

lane: cpu_only

monitor_port: None

name: cmm3

resource_fixed: {'SSD': True}

resource_slots: {'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127], 'GPU': [], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29]}

ssh_str: cryosparcuser@cmm3

title: Worker node cmm3

type: node

worker_bin_path: /storage/apps/cryosparc/cryosparc_worker/bin/cryosparcw

----------------------------------------

cache_path: /data/cryosparc_cache

cache_quota_mb: None

cache_reserve_mb: 10000

desc: None

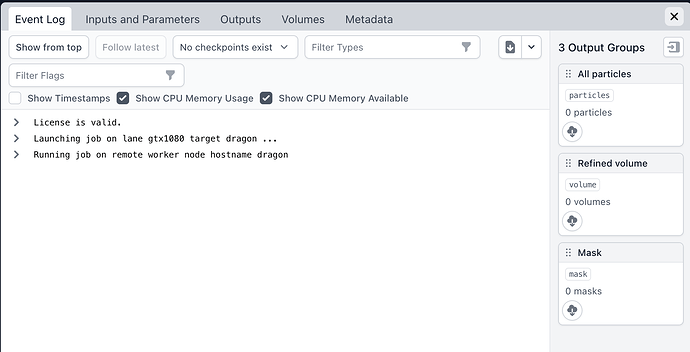

gpus: [{'id': 1, 'mem': 11721506816, 'name': 'GeForce GTX 1080 Ti'}, {'id': 2, 'mem': 11721506816, 'name': 'GeForce GTX 1080 Ti'}, {'id': 3, 'mem': 11721506816, 'name': 'GeForce GTX 1080 Ti'}]

hostname: dragon

lane: gtx1080

monitor_port: None

name: dragon

resource_fixed: {'SSD': True}

resource_slots: {'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87], 'GPU': [1, 2, 3], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15]}

ssh_str: cryosparcuser@dragon

title: Worker node dragon

type: node

worker_bin_path: /home/cryosparcuser/cryosparc_app/cryosparc_worker/bin/cryosparcw

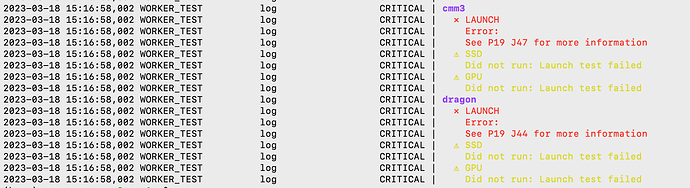

cryosparcm eventlog P19 J47

(base) cryosparcuser@cmm-1:~$ cryosparcm eventlog P19 J47

License is valid.

Launching job on lane cpu_only target cmm3 ...

Running job on remote worker node hostname cmm3

**** Kill signal sent by unknown user ****