Hi,

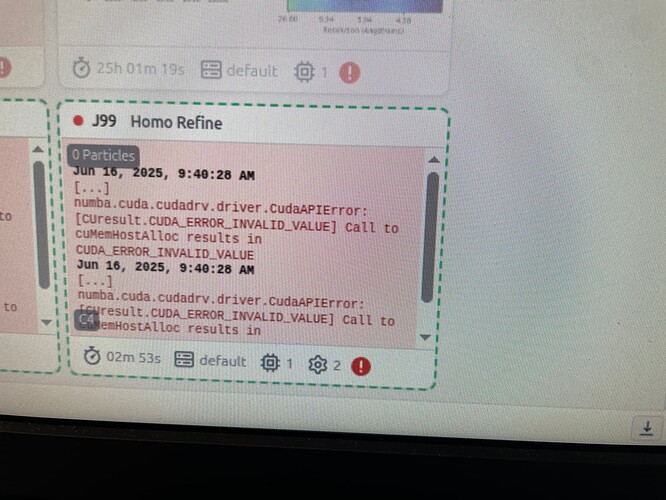

I’m using the latest version of CryoSPARC, but homogeneous refinement keeps failing on my dataset. What could be the reasons for this failure, and how can I troubleshoot it?

I have attached the images here for your review.

@shubhi Please can you post

- the error messages as text

- the output of these commands (run on the CryoSPARC worker where the job failed)

uname -a free -h nvidia-smi

Sure here it is:

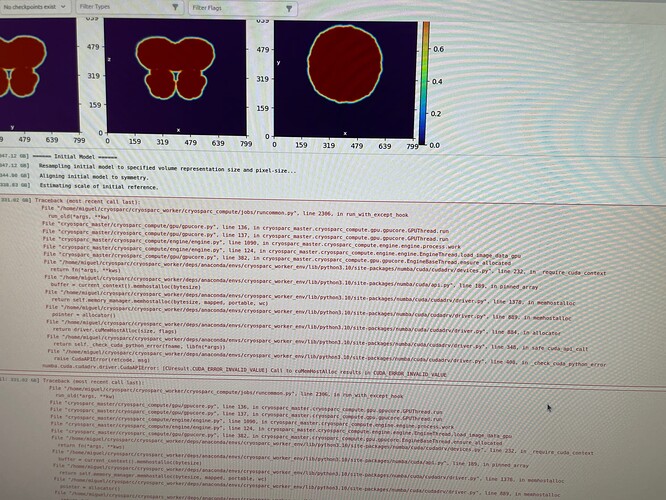

Traceback (most recent call last):

File "/home/miguel/cryosparc/cryosparc_worker/cryosparc_compute/jobs/runcommon.py", line 2306, in run_with_except_hook

run_old(*args, **kw)

File "cryosparc_master/cryosparc_compute/gpu/gpucore.py", line 136, in cryosparc_master.cryosparc_compute.gpu.gpucore.GPUThread.run

File "cryosparc_master/cryosparc_compute/gpu/gpucore.py", line 137, in cryosparc_master.cryosparc_compute.gpu.gpucore.GPUThread.run

File "cryosparc_master/cryosparc_compute/engine/engine.py", line 1090, in cryosparc_master.cryosparc_compute.engine.engine.process.work

File "cryosparc_master/cryosparc_compute/engine/engine.py", line 124, in cryosparc_master.cryosparc_compute.engine.engine.EngineThread.load_image_data_gpu

File "cryosparc_master/cryosparc_compute/gpu/gpucore.py", line 382, in cryosparc_master.cryosparc_compute.gpu.gpucore.EngineBaseThread.ensure_allocated

File "/home/miguel/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/devices.py", line 232, in _require_cuda_context

return fn(*args, **kws)

File "/home/miguel/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/api.py", line 189, in pinned_array

buffer = current_context().memhostalloc(bytesize)

File "/home/miguel/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py", line 1378, in memhostalloc

return self.memory_manager.memhostalloc(bytesize, mapped, portable, wc)

File "/home/miguel/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py", line 889, in memhostalloc

pointer = allocator()

File "/home/miguel/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py", line 884, in allocator

return driver.cuMemHostAlloc(size, flags)

File "/home/miguel/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py", line 348, in safe_cuda_api_call

return self._check_cuda_python_error(fname, libfn(*args))

File "/home/miguel/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py", line 408, in _check_cuda_python_error

raise CudaAPIError(retcode, msg)

numba.cuda.cudadrv.driver.CudaAPIError: [CUresult.CUDA_ERROR_INVALID_VALUE] Call to cuMemHostAlloc results in CUDA_ERROR_INVALID_VALUE

Linux miguel-System-Product-Name 6.11.0-26-generic #26~24.04.1-Ubuntu SMP PREEMPT_DYNAMIC Thu Apr 17 19:20:47 UTC 2 x86_64 x86_64 x86_64 GNU/Linux

Mon Jun 16 14:33:16 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.144.03 Driver Version: 550.144.03 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce RTX 4090 Off | 00000000:41:00.0 Off | Off |

| 0% 36C P8 10W / 450W | 412MiB / 24564MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

| 1 NVIDIA GeForce RTX 4090 Off | 00000000:42:00.0 On | Off |

| 0% 32C P8 15W / 450W | 344MiB / 24564MiB | 2% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 3049 G /usr/lib/xorg/Xorg 4MiB |

| 0 N/A N/A 3391 C+G ...libexec/gnome-remote-desktop-daemon 390MiB |

| 1 N/A N/A 3049 G /usr/lib/xorg/Xorg 195MiB |

| 1 N/A N/A 3467 G /usr/bin/gnome-shell 74MiB |

| 1 N/A N/A 4765 G /opt/google/chrome/chrome 6MiB |

| 1 N/A N/A 4815 G ...seed-version=20250613-050032.546000 47MiB |

+-----------------------------------------------------------------------------------------+

total used free shared buff/cache available

Mem: 377Gi 31Gi 46Gi 317Mi 303Gi 346Gi

Swap: 8.0Gi 512Ki 8.0Gi

@shubhi Please can you try adding the line

export CRYOSPARC_NO_PAGELOCK=true

to the file

/home/miguel/cryosparc/cryosparc_worker/config.sh

and let us know if the problem persists?