Hello,

Thank you very much for the welcoming.

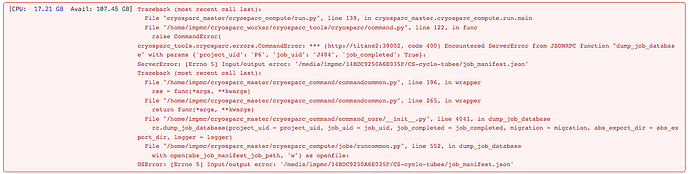

Sorry, the Traceback text is the following:

Traceback (most recent call last):

File “cryosparc_master/cryosparc_compute/run.py”, line 139, in cryosparc_master.cryosparc_compute.run.main

File “/home/impmc/cryosparc_worker/cryosparc_tools/cryosparc/command.py”, line 122, in func

raise CommandError(

cryosparc_tools.cryosparc.errors.CommandError: *** (http://titane2:39002, code 400) Encountered ServerError from JSONRPC function “dump_job_database” with params {‘project_uid’: ‘P6’, ‘job_uid’: ‘J404’, ‘job_completed’: True}:

ServerError: [Errno 5] Input/output error: ‘/media/impmc/14BDC9250A6E035F/CS-cyclo-tubes/job_manifest.json’

Traceback (most recent call last):

File “/home/impmc/cryosparc_master/cryosparc_command/commandcommon.py”, line 196, in wrapper

res = func(*args, **kwargs)

File “/home/impmc/cryosparc_master/cryosparc_command/commandcommon.py”, line 265, in wrapper

return func(*args, **kwargs)

File “/home/impmc/cryosparc_master/cryosparc_command/command_core/init.py”, line 4041, in dump_job_database

rc.dump_job_database(project_uid = project_uid, job_uid = job_uid, job_completed = job_completed, migration = migration, abs_export_dir = abs_export_dir, logger = logger)

File “/home/impmc/cryosparc_master/cryosparc_compute/jobs/runcommon.py”, line 552, in dump_job_database

with open(abs_job_manifest_job_path, ‘w’) as openfile:

OSError: [Errno 5] Input/output error: ‘/media/impmc/14BDC9250A6E035F/CS-cyclo-tubes/job_manifest.json’

Concerning the commands you suggested these are the results:

-

df -Th

df -Th

Sys. de fichiers Type Taille Utilisé Dispo Uti% Monté sur

sde2 fuseblk 15T 14T 1,3T 92% /media/impmc/14BDC9250A6E035F

-

ps -eo user:16,command | grep cryosparc_

impmc python /home/impmc/**cryosparc_**master/deps/anaconda/envs/**cryosparc_**master_env/bin/supervisord -c /home/impmc/**cryosparc_**master/supervisord.conf

impmc mongod --auth --dbpath /home/impmc/**cryosparc_**database --port 39001 --oplogSize 64 --replSet meteor --wiredTigerCacheSizeGB 4 --bind_ip_all

impmc python /home/impmc/**cryosparc_**master/deps/anaconda/envs/**cryosparc_**master_env/bin/gunicorn -n command_core -b 0.0.0.0:39002 **cryosparc_**command.command_core:start() -c /home/impmc/**cryosparc_**master/gunicorn.conf.py

impmc python /home/impmc/**cryosparc_**master/deps/anaconda/envs/**cryosparc_**master_env/bin/gunicorn -n command_core -b 0.0.0.0:39002 **cryosparc_**command.command_core:start() -c /home/impmc/**cryosparc_**master/gunicorn.conf.py

impmc python /home/impmc/**cryosparc_**master/deps/anaconda/envs/**cryosparc_**master_env/bin/gunicorn **cryosparc_**command.command_vis:app -n command_vis -b 0.0.0.0:39003 -c /home/impmc/**cryosparc_**master/gunicorn.conf.py

impmc python /home/impmc/**cryosparc_**master/deps/anaconda/envs/**cryosparc_**master_env/bin/gunicorn **cryosparc_**command.command_vis:app -n command_vis -b 0.0.0.0:39003 -c /home/impmc/**cryosparc_**master/gunicorn.conf.py

impmc python /home/impmc/**cryosparc_**master/deps/anaconda/envs/**cryosparc_**master_env/bin/gunicorn **cryosparc_**command.command_rtp:start() -n command_rtp -b 0.0.0.0:39005 -c /home/impmc/**cryosparc_**master/gunicorn.conf.py

impmc python /home/impmc/**cryosparc_**master/deps/anaconda/envs/**cryosparc_**master_env/bin/gunicorn **cryosparc_**command.command_rtp:start() -n command_rtp -b 0.0.0.0:39005 -c /home/impmc/**cryosparc_**master/gunicorn.conf.py

impmc /home/impmc/**cryosparc_**master/**cryosparc_**app/nodejs/bin/node ./bundle/main.js

impmc grep --color=auto cryosparc_

- ls -la /path/to/Cs-cyclo-tubes

ls: cannot access ‘/path/to/Cs-cyclo-tubes’: No files or folders of this type

The last command line gave an abnormal result. In fact I can access the folders and see all the jobs I already finished.

I can provide you more information if needed.