Dear CryoSPARC team,

I am running into an issue with my CryoSPARC installation (seems to be the worker) after upgrading to v4.0.x (originally 4.0.1 and now 4.0.3). Both worker and master are in the same version and both have the correct license ID in their config.sh files. I have also forced an override update for the cryosparc_worker and disconnected it and reconnected it from the master to no avail.

It appears that the jobs that require a worker (E.g. Extract from Micrographs) are hanging (originally they were hanging in the launched state, but after fixing the cuda path to >10 they are now hanging in the running state). Here is the current output of the Event Log of CryoSPARC, in which the job is hanging:

License is valid.

Launching job on lane default target em504-02.ibex.kaust.edu.sa ...

Running job on master node hostname em504-02.ibex.kaust.edu.sa

[CPU: 85.3 MB]

Job J142 Started

[CPU: 85.4 MB]

Master running v4.0.3, worker running v4.0.3

[CPU: 85.6 MB]

Working in directory: /ibex/scratch/projects/c2121/Brandon/cryosparc_datasets/P5/J142

[CPU: 85.6 MB]

Running on lane default

[CPU: 85.6 MB]

Resources allocated:

[CPU: 85.6 MB]

Worker: em504-02.ibex.kaust.edu.sa

[CPU: 85.6 MB]

CPU : [0, 1, 2, 3]

[CPU: 85.6 MB]

GPU : [0, 1]

[CPU: 85.6 MB]

RAM : [0]

[CPU: 85.6 MB]

SSD : False

[CPU: 85.6 MB]

--------------------------------------------------------------

[CPU: 85.6 MB]

Importing job module for job type extract_micrographs_multi...

[CPU: 205.7 MB]

Job ready to run

[CPU: 205.7 MB]

***************************************************************

[CPU: 425.5 MB]

Particles do not have CTF estimates but micrographs do: micrograph CTFs will be recorded in particles output.

[CPU: 470.2 MB]

Collecting micrograph particle selection information...

[CPU: 720.8 MB]

Starting multithreaded pipeline ...

[CPU: 721.0 MB]

Started pipeline

Testing the installation with “cryosparcm test install” proves successful:

Running installation tests...

✓ Running as cryoSPARC owner

✓ Running on master node

✓ CryoSPARC is running

✓ Connected to command_core at http://em504-02.ibex.kaust.edu.sa:39002

✓ CRYOSPARC_LICENSE_ID environment variable is set

✓ License has correct format

✓ Insecure mode is disabled

✓ License server set to “https://get.cryosparc.com”

✓ Connection to license server succeeded

✓ License server returned success status code 200

✓ License server returned valid JSON response

✓ License exists and is valid

✓ CryoSPARC is running v4.0.3

✓ Running the latest version of CryoSPARC

Could not get latest patch (status code 404)

✓ Patch update not required

✓ Admin user has been created

✓ GPU worker connected.

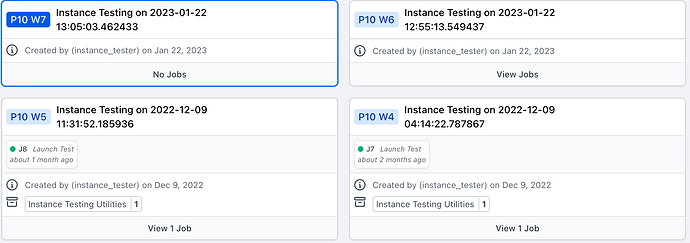

But testing the workers prove unsuccessful (we are running the master + worker on the same node):

[zahodnbd@em504-02 ~]$ cryosparcm test workers P10

Using project P10

Running worker tests...

2022-12-08 16:06:00,532 WORKER_TEST log CRITICAL | Worker test results

2022-12-08 16:06:00,533 WORKER_TEST log CRITICAL | em504-02.ibex.kaust.edu.sa

2022-12-08 16:06:00,533 WORKER_TEST log CRITICAL | ✕ LAUNCH

2022-12-08 16:06:00,533 WORKER_TEST log CRITICAL | Error:

2022-12-08 16:06:00,533 WORKER_TEST log CRITICAL | See P10 J5 for more information

2022-12-08 16:06:00,533 WORKER_TEST log CRITICAL | ⚠ SSD

2022-12-08 16:06:00,533 WORKER_TEST log CRITICAL | Did not run: Launch test failed

2022-12-08 16:06:00,533 WORKER_TEST log CRITICAL | ⚠ GPU

2022-12-08 16:06:00,533 WORKER_TEST log CRITICAL | Did not run: Launch test failed

The event log from P10/J5 can be found below, where there is a strange error that says it was killed by an unknown user:

License is valid.

Launching job on lane default target em504-02.ibex.kaust.edu.sa ...

Running job on master node hostname em504-02.ibex.kaust.edu.sa

**** Kill signal sent by unknown user ****

[CPU: 82.0 MB]

Job J5 Started

[CPU: 82.0 MB]

Master running v4.0.3, worker running v4.0.3

[CPU: 82.0 MB]

Working in directory: /ibex/scratch/projects/c2121/Brandon/cryosparc_datasets/CS-test-project/J5

[CPU: 82.0 MB]

Running on lane default

[CPU: 82.0 MB]

Resources allocated:

[CPU: 82.0 MB]

Worker: em504-02.ibex.kaust.edu.sa

[CPU: 82.0 MB]

CPU : [8]

[CPU: 82.0 MB]

GPU : []

[CPU: 82.0 MB]

RAM : [2]

[CPU: 82.0 MB]

SSD : False

[CPU: 82.0 MB]

--------------------------------------------------------------

[CPU: 82.0 MB]

Importing job module for job type instance_launch_test...

[CPU: 190.4 MB]

Job ready to run

[CPU: 190.4 MB]

***************************************************************

[CPU: 190.5 MB]

Job successfully running

[CPU: 190.5 MB]

--------------------------------------------------------------

[CPU: 190.5 MB]

Compiling job outputs...

[CPU: 190.5 MB]

Updating job size...

[CPU: 190.5 MB]

Exporting job and creating csg files...

[CPU: 190.5 MB]

***************************************************************

[CPU: 190.5 MB]

Job complete. Total time 3.82s

CryoSPARC is installed on an isolated single node on our cluster, with 8GPU 40 CPUs, and was successfully running prior to the upgrade to 4.0. Our IT support team cannot locate the issues.

From the last working state of CryoSPARC: I created a backup of my database, upgraded to v4.0.1, then faced an issue that my jobs were stuck in the “launched” state. So, I deleted CryoSPARC and installed fresh to v4.0.3, where I then restored the database from the backup created prior to the upgrade. After this, I can run jobs that only run on the master (Import Micrographs) but face the above issue.

Additionally, due to the reinstallation of CryoSPARC, I also faced another error (below) in which there is a mismatch of expected instance ID’s, however in the examples above, P10 is a newly created project directory from this proper installation instance, which made me think this error is not contributing to the issue with the hanging job.

Unable to detach P1: ServerError: validation error: instance id mismatch for P1. Expected 82432f20-b84a-4f7c-a4f2-ccb135a8f658, actual e1b5b125-f99e-44e9-adb2-7bb6c91e1b60. This indicates that P1 was attached to another cryoSPARC instance without detaching from this one.

Any help is appreciated! Thank you!

cryoSPARC instance information

- Type: master-worker

- Software version: v4.0.3 from

cryosparcm status

[zahodnbd@em504-02 ~]$ uname -a && free -g

Linux em504-02 3.10.0-1160.76.1.el7.x86_64 #1 SMP Wed Aug 10 16:21:17 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux

total used free shared buff/cache available

Mem: 376 8 358 7 9 359

Swap: 29 0 29

CryoSPARC worker environment

Cuda Toolkit Path: /sw/csgv/cuda/11.2.2/el7.9_binary/

Pycuda information:

[zahodnbd@em504-02 ~]$ python -c "import pycuda.driver; print(pycuda.driver.get_version())"

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "/ibex/scratch/projects/c2121/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/site-packages/pycuda/driver.py", line 62, in <module>

from pycuda._driver import * # noqa

ImportError: libcurand.so.9.0: cannot open shared object file: No such file or directory

[zahodnbd@em504-02 ~]$ uname -a && free -g && nvidia-smi

Linux em504-02 3.10.0-1160.76.1.el7.x86_64 #1 SMP Wed Aug 10 16:21:17 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux

total used free shared buff/cache available

Mem: 376 8 358 7 9 359

Swap: 29 0 29

Thu Dec 8 18:40:23 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 520.61.05 Driver Version: 520.61.05 CUDA Version: 11.8 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... On | 00000000:1A:00.0 Off | N/A |

| 29% 25C P8 10W / 250W | 1MiB / 11264MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 NVIDIA GeForce ... On | 00000000:1C:00.0 Off | N/A |

| 30% 28C P8 10W / 250W | 1MiB / 11264MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 2 NVIDIA GeForce ... On | 00000000:1D:00.0 Off | N/A |

| 30% 27C P8 17W / 250W | 1MiB / 11264MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 3 NVIDIA GeForce ... On | 00000000:1E:00.0 Off | N/A |

| 31% 27C P8 18W / 250W | 1MiB / 11264MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 4 NVIDIA GeForce ... On | 00000000:3D:00.0 Off | N/A |

| 30% 24C P8 4W / 250W | 1MiB / 11264MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 5 NVIDIA GeForce ... On | 00000000:3F:00.0 Off | N/A |

| 30% 26C P8 26W / 250W | 1MiB / 11264MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 6 NVIDIA GeForce ... On | 00000000:40:00.0 Off | N/A |

| 31% 25C P8 2W / 250W | 1MiB / 11264MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 7 NVIDIA GeForce ... On | 00000000:41:00.0 Off | N/A |

| 30% 26C P8 10W / 250W | 1MiB / 11264MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

The pycuda information is surprising, as I also changed the cuda path to a different path, and it had mentioned it was successful in redownloading pycuda.