CryoSPARC instance information

Cryosparc is installed onto a PBS cluster system.

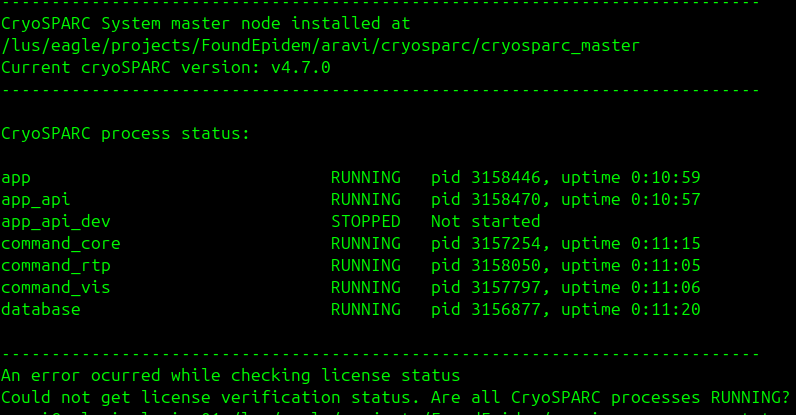

Output of cryosparcm status:

We are aware of the license status issue; it seems like it only fails to verify it as CryoSPARC is running.

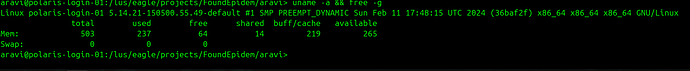

Output of uname -a && free -g

nvidia_smi output:

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.154.05 Driver Version: 535.154.05 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA A100 80GB PCIe On | 00000000:A3:00.0 Off | On |

| N/A 38C P0 47W / 300W | 0MiB / 81920MiB | N/A Default |

| | | Enabled |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| MIG devices: |

+------------------+--------------------------------+-----------+-----------------------+

| GPU GI CI MIG | Memory-Usage | Vol| Shared |

| ID ID Dev | BAR1-Usage | SM Unc| CE ENC DEC OFA JPG |

| | | ECC| |

|==================+================================+===========+=======================|

| No MIG devices found |

+---------------------------------------------------------------------------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| No running processes found |

+---------------------------------------------------------------------------------------+

Issue:

This is an issue with running test jobs onto a PBS cluster- when we start the test jobs, it is stuck in the launch state and in the event log we can see that it retries indefinitely. We’re leaning towards the issue being that the jobs are sent to the login node’s hostname rather than the correct main cluster hostname. We are wondering if there is a way to change the value of variables such as Submit_Host , server, and/or PBS_O_HOST?

Here is the event log output:

License is valid.

Launching job on lane Polaris target Polaris ...

Launching job on cluster Polaris

====================== Cluster submission script: ========================

==========================================================================

#!/bin/bash

#PBS -N cryosparc_job

#PBS -l select=1:system=polaris,walltime=01:00:00

#PBS -l filesystems=home:eagle

#PBS -A FoundEpidem

#PBS -q debug

module load nvhpc/23.9 PrgEnv-nvhpc/8.5.0

cd /lus/eagle/projects/FoundEpidem/aravi/CS-testing/J3

/lus/eagle/projects/FoundEpidem/aravi/cryosparc/cryosparc_worker/bin/cryosparcw run --project P1 --job J3 --master_hostname polaris.alcf.anl.gov --master_command_core_port 39002 > /lus/eagle/projects/FoundEpidem/aravi/CS-testing/J3/job.log 2>&1

==========================================================================

==========================================================================

-------- Submission command:

qsub /lus/eagle/projects/FoundEpidem/aravi/CS-testing/J3/queue_sub_script.sh

-------- Cluster Job ID:

5131771.polaris-pbs-01.hsn.cm.polaris.alcf.anl.gov

-------- Queued on cluster at 2025-06-18 16:25:46.632431

-------- Cluster job status at 2025-06-18 16:25:47.234604 (0 retries)

Job Id: 5131771.polaris-pbs-01.hsn.cm.polaris.alcf.anl.gov

Job_Name = cryosparc_job

Job_Owner = aravi@polaris-login-01.hsn.cm.polaris.alcf.anl.gov

job_state = Q

queue = debug

server = polaris-pbs-01.hsn.cm.polaris.alcf.anl.gov

Account_Name = FoundEpidem

Checkpoint = u

ctime = Wed Jun 18 16:25:46 2025

Error_Path = polaris-login-01.hsn.cm.polaris.alcf.anl.gov:/lus/eagle/projec

ts/FoundEpidem/aravi/cryosparc/cryosparc_master/cryosparc_job.e5131771

Hold_Types = n

Join_Path = n

Keep_Files = doe

Mail_Points = a

Mail_Users = aravi@anl.gov

mtime = Wed Jun 18 16:25:46 2025

Output_Path = polaris-login-01.hsn.cm.polaris.alcf.anl.gov:/lus/eagle/proje

cts/FoundEpidem/aravi/cryosparc/cryosparc_master/cryosparc_job.o5131771

Priority = 0

qtime = Wed Jun 18 16:25:46 2025

Rerunable = False

Resource_List.allow_account_check_failure = True

Resource_List.allow_negative_allocation = True

Resource_List.award_category = INCITE

Resource_List.award_type = INCITE-2025

Resource_List.backfill_factor = 84600

Resource_List.backfill_max = 50

Resource_List.base_score = 51

Resource_List.burn_ratio = 0.2610

Resource_List.current_allocation = 11173063680

Resource_List.eagle_fs = True

Resource_List.enable_backfill = 0

Resource_List.enable_fifo = 1

Resource_List.enable_wfp = 0

Resource_List.fifo_factor = 1800

Resource_List.filesystems = home:eagle

Resource_List.home_fs = True

Resource_List.mig_avail = True

Resource_List.ncpus = 64

Resource_List.ni_resource = polaris

Resource_List.nodect = 1

Resource_List.overburn = False

Resource_List.place = free

Resource_List.preempt_targets = NONE

Resource_List.project_priority = 25

Resource_List.route_backfill = False

Resource_List.score_boost = 0

Resource_List.select = 1:system=polaris

Resource_List.start_xserver = False

Resource_List.total_allocation = 15120000000

Resource_List.total_cpus = 560

Resource_List.walltime = 01:00:00

Resource_List.wfp_factor = 100000

substate = 10

Variable_List = PBS_O_HOME=/home/aravi,PBS_O_LANG=en_US.UTF-8,

PBS_O_LOGNAME=aravi,

PBS_O_PATH=/lus/eagle/projects/FoundEpidem/aravi/cryosparc/cryosparc_m

aster/deps/external/mongodb/bin:/lus/eagle/projects/FoundEpidem/aravi/c

ryosparc/cryosparc_master/bin:/lus/eagle/projects/FoundEpidem/aravi/cry

osparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin:/lu

s/eagle/projects/FoundEpidem/aravi/cryosparc/cryosparc_master/deps/anac

onda/condabin:/lus/eagle/projects/FoundEpidem/aravi/cryosparc/cryosparc

_master/deps/external/mongodb/bin:/lus/eagle/projects/FoundEpidem/aravi

/nodejs/bin:/lus/eagle/projects/FoundEpidem/aravi/cryosparc/cryosparc_m

aster/deps/external/mongodb/bin:/lus/eagle/projects/FoundEpidem/aravi/c

ryosparc/cryosparc_worker/bin:/lus/eagle/projects/FoundEpidem/aravi/cry

osparc/cryosparc_master/bin:/home/aravi/anaconda3/bin:/lus/eagle/projec

ts/FoundEpidem/aravi/cryosparc/cryosparc_master/deps/external/mongodb/b

in:/lus/eagle/projects/FoundEpidem/aravi/nodejs/bin:/lus/eagle/projects

/FoundEpidem/aravi/cryosparc/cryosparc_master/deps/external/mongodb/bin

:/lus/eagle/projects/FoundEpidem/aravi/cryosparc/cryosparc_worker/bin:/

lus/eagle/projects/FoundEpidem/aravi/cryosparc/cryosparc_master/bin:/ho

me/aravi/anaconda3/bin:/soft/xalt/3.0.2-202408282050/bin:/soft/perftool

s/darshan/darshan-3.4.4/bin:/opt/cray/pe/perftools/23.12.0/bin:/opt/cra

y/pe/papi/7.0.1.2/bin:/opt/cray/libfabric/1.15.2.0/bin:/opt/cray/pals/1

.3.4/bin:/opt/cray/pe/mpich/8.1.28/ofi/nvidia/23.3/bin:/opt/cray/pe/mpi

ch/8.1.28/bin:/opt/cray/pe/craype/2.7.30/bin:/opt/nvidia/hpc_sdk/Linux_

x86_64/23.9/compilers/extras/qd/bin:/opt/nvidia/hpc_sdk/Linux_x86_64/23

.9/compilers/bin:/opt/nvidia/hpc_sdk/Linux_x86_64/23.9/cuda/bin:/opt/cl

mgr/sbin:/opt/clmgr/bin:/opt/sgi/sbin:/opt/sgi/bin:/usr/local/bin:/usr/

bin:/bin:/opt/c3/bin:/dbhome/db2cat/sqllib/bin:/dbhome/db2cat/sqllib/ad

m:/dbhome/db2cat/sqllib/misc:/dbhome/db2cat/sqllib/gskit/bin:/usr/lib/m

it/bin:/usr/lib/mit/sbin:/opt/pbs/bin:/sbin:/opt/cray/pe/bin:/home/arav

i/.local/bin:/home/aravi/bin,PBS_O_MAIL=/var/spool/mail/aravi,

PBS_O_SHELL=/bin/bash,PBS_O_INTERACTIVE_AUTH_METHOD=resvport,

PBS_O_HOST=polaris-login-01.hsn.cm.polaris.alcf.anl.gov,

PBS_O_WORKDIR=/lus/eagle/projects/FoundEpidem/aravi/cryosparc/cryospar

c_master,PBS_O_SYSTEM=Linux,PBS_O_QUEUE=debug

etime = Wed Jun 18 16:25:46 2025

umask = 22

eligible_time = 00:00:01

Submit_arguments = /lus/eagle/projects/FoundEpidem/aravi/CS-testing/J3/queu

e_sub_script.sh

project = FoundEpidem

Submit_Host = polaris-login-01.hsn.cm.polaris.alcf.anl.gov