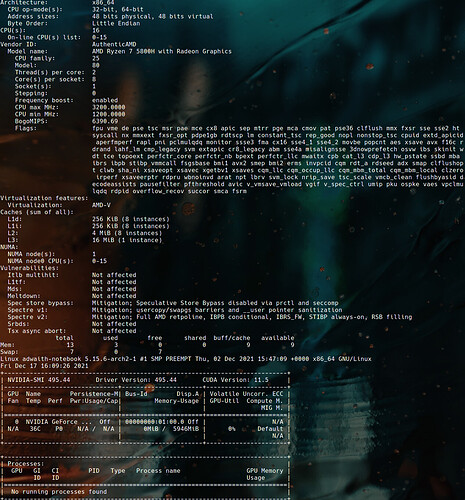

I’ve been using cryoSPARC on Arch Linux for a while and never had this issue until installing it on a new box (running Manjaro, which is Arch based) today. I realise this topic is a few weeks old now, but as I stumbled onto this issue installing cryoSPARC, and have a workaround, I thought I’d sign up and post it for future reference.

In my so far limited testing this does not appear to break anything in cryoSPARC, although I have not yet tested exhaustively…

The issue, as the Python traceback indicates, is with PyCUDA, although it actually appears to be because the latest CUDA available on Arch is expecting a newer libstdc++ than the Anaconda install for cryoSPARC provides.

The workaround was fairly simple. I do not know if it will “stick” between cryoSPARC updates, but I have just quickly run through import, patch correction, CTF estimation, picking and 2D classification of a small in-house dataset to check it wasn’t going to immediately panic. I’ll be setting up a longer run overnight.

Anyway.

Install cryoSPARC until error occurs during worker connection. Open new terminal, navigate to:

$cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib

Where $cryosparc_worker is wherever you installed the cryoSPARC worker, then run:

mv libstdc++.so.6.0.28 libstdc++.so.6.0.28.backup

ln -s /usr/lib64/libstdc++.so.6.0.29 libstdc++.so.6.0.28

Open another terminal, navigate to:

$cryosparc_worker

And run:

./bin/cryosparcw gpulist

This should output a list of GPUs; if the libstdc++ symlink is bad (or missing) it will throw the same PyCUDA error. After confirming it’s OK, run:

./bin/cryosparcw connect --worker [workerHostname] --master [masterHostname] --[anyOtherFlagsYouNeed]

Check the cryoSPARC install page for what other flags can be used. This should output the final configuration table, listing hostname, GPUs, resource slots, etc., and cryoSPARC should run jobs successfully.

I’m not a fan of playing with symlinking of system libraries (at least at the system level) but as the cryoSPARC Anaconda environment is self-contained (and doesn’t get called except by cryoSPARC) it shouldn’t affect anything else on the system. It will probably break if you get a system libstdc++ update, or need reapplying with an updated symlink. If a system libstdc++ update does break it, it will probably manifest as cryoSPARC refusing to get past the “License is valid.” notification at the start of any run.