Dear CS team/users,

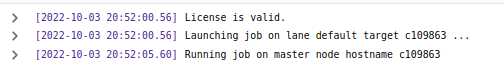

We just updated to the latest 4.0 version. Everything seems to go well and fantastic user interface! However, all the jobs are queued and launched but not really running. It was stuck at this step:

Could you let me know how to resolve this issue? Please let me know if there is any additional information that I can provide for debugging. Thanks!

Best regards,

Wei

Hi Wei,

I had the same issue and realized that the worker didn’t update properly - maybe work checking that? You can try the update again with --override flag to force update of the worker (or do it manually in the cryosparc worker directory with bin/cryosparcw update provided the updated worker archive has been downloaded).

Cheers

Oli

Dear Oli,

Thanks for your reply! I actually updated the worker manually and I just tried --override option to update it again. But unfortunately, it is still the same result. I don’t know whether this could be related to the Linux version. Mine is running CentOS 7. Thanks!

Best,

Wei

Hey @wxh180, thanks for reporting this. Can you send us the job error report for this job? For more information on how to do this, see Guide: Download Error Reports - CryoSPARC Guide

@stephan this is another great feature. Here are the job logs. Let me know if any additional information is needed. Thanks!

Hi Wei and Stephan,

Just piggy-backing to say that I’m getting the same errors in the job log (also using CentOS 7). I haven’t tried running a job yet, but I got this error when I ran ‘cryosparcm test workers’ after installation. Here’s the test output:

[wucci@wucci-014 FF14_TitanF4_Test]$ cryosparcm test workers P15

Using project P15

Running worker tests…

2022-10-04 10:00:56,717 WORKER_TEST log CRITICAL | Worker test results

2022-10-04 10:00:56,717 WORKER_TEST log CRITICAL | wucci-014.wucon.wustl.edu

2022-10-04 10:00:56,718 WORKER_TEST log CRITICAL | ✕ LAUNCH

2022-10-04 10:00:56,718 WORKER_TEST log CRITICAL | Error:

2022-10-04 10:00:56,718 WORKER_TEST log CRITICAL | See P15 J72 for more information

2022-10-04 10:00:56,718 WORKER_TEST log CRITICAL |  SSD

SSD

2022-10-04 10:00:56,718 WORKER_TEST log CRITICAL | Did not run: Launch test failed

2022-10-04 10:00:56,718 WORKER_TEST log CRITICAL |  GPU

GPU

2022-10-04 10:00:56,718 WORKER_TEST log CRITICAL | Did not run: Launch test failed

Hope this helps with troubleshooting.

Hi @wxh180,

Thanks for sending that over. Can you ensure the CRYOSPARC_LICENSE_ID field inside cryosparc_worker/config.sh matches the CRYOSPARC_LICENSE_ID inside cryosparc_master/config.sh?

Can you send the error report for this job? Also, can you ensure the CRYOSPARC_LICENSE_ID field inside cryosparc_worker/config.sh matches the CRYOSPARC_LICENSE_ID inside cryosparc_master/config.sh ?

Hi @stephan,

The license ID matches in each config.sh file for worker and master. Here’s the error report. Hope this helps. Thanks!

================= CRYOSPARCW ======= 2022-10-04 10:00:46.197276 =========

Project P15 Job J72

Master wucci-014.wucon.wustl.edu Port 39002

===========================================================================

========= monitor process now starting main process

MAINPROCESS PID 261037

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "cryosparc_worker/cryosparc_compute/run.py", line 162, in cryosparc_compute.run.run

File "/home/wucci/cryosparc/cryosparc_worker/cryosparc_compute/jobs/runcommon.py", line 97, in connect

assert cli.test_connection(), "Job could not connect to master instance at %s:%s" % (master_hostname, str(master_command_core_port))

File "/home/wucci/cryosparc/cryosparc_worker/cryosparc_compute/client.py", line 65, in func

assert 'error' not in res, f"Encountered error for method \"{key}\" with params {params}:\n{res['error']['message'] if 'message' in res['error'] else res['error']}"

AssertionError: Encountered error for method "test_connection" with params ():

ServerError: Authentication failed

Process Process-1:

Traceback (most recent call last):

File "/home/wucci/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/multiprocessing/process.py", line 297, in _bootstrap

self.run()

File "/home/wucci/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/multiprocessing/process.py", line 99, in run

self._target(*self._args, **self._kwargs)

File "cryosparc_worker/cryosparc_compute/run.py", line 31, in cryosparc_compute.run.main

File "/home/wucci/cryosparc/cryosparc_worker/cryosparc_compute/jobs/runcommon.py", line 97, in connect

assert cli.test_connection(), "Job could not connect to master instance at %s:%s" % (master_hostname, str(master_command_core_port))

File "/home/wucci/cryosparc/cryosparc_worker/cryosparc_compute/client.py", line 65, in func

assert 'error' not in res, f"Encountered error for method \"{key}\" with params {params}:\n{res['error']['message'] if 'message' in res['error'] else res['error']}"

AssertionError: Encountered error for method "test_connection" with params ():

ServerError: Authentication failed

Hi @kbasore,

Can you confirm your worker updated correctly? What is inside the file cryosparc_worker/version?

If it’s not v4.0.0, can you do the following:

Inside cryosparc_master, you’ll find a binary called cryosparc_worker.tar.gz.

Copy this into the cryosparc_worker directory.

Once that’s done, inside the cryosparc_worker directory, run ./bin/cryosparcw update

@stephan Yes, this fixes the problem. Thanks!

Hi @stephan,

Looks like I had v3.3.2. I copied the cryosparc_worker.tar.gz and ran the cryosparcw update. The launch test now passes, but I’m getting a GPU error:

[wucci@wucci-014 FF14_TitanF4_Test]$ cryosparcm test workers P15

Using project P15

Running worker tests...

^[[B2022-10-04 10:33:50,291 WORKER_TEST log CRITICAL | Worker test results

2022-10-04 10:33:50,292 WORKER_TEST log CRITICAL | wucci-014.wucon.wustl.edu

2022-10-04 10:33:50,292 WORKER_TEST log CRITICAL | ✓ LAUNCH

2022-10-04 10:33:50,292 WORKER_TEST log CRITICAL | ✓ SSD

2022-10-04 10:33:50,292 WORKER_TEST log CRITICAL | ✕ GPU

2022-10-04 10:33:50,292 WORKER_TEST log CRITICAL | Error: Tensorflow detected 0 of 4 GPUs.

2022-10-04 10:33:50,292 WORKER_TEST log CRITICAL | See P15 J75 for more information

P15/J75/job.log:

================= CRYOSPARCW ======= 2022-10-04 10:33:00.428998 =========

Project P15 Job J75

Master wucci-014.wucon.wustl.edu Port 39002

===========================================================================

========= monitor process now starting main process

MAINPROCESS PID 267316

MAIN PID 267316

instance_testing.run cryosparc_compute.jobs.jobregister

========= monitor process now waiting for main process

2022-10-04 10:33:07.883113: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /home/wucci/cryosparc/cryosparc_worker/cryosparc_compute/blobio:/home/wucci/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib:/home/wucci/cryosparc/cryosparc_worker/deps/external/cudnn/lib:/usr/local/cuda/lib64:/home/wucci/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib:/home/wucci/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib

2022-10-04 10:33:07.883231: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

2022-10-04 10:33:09.185174: I tensorflow/compiler/jit/xla_cpu_device.cc:41] Not creating XLA devices, tf_xla_enable_xla_devices not set

2022-10-04 10:33:09.185301: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcuda.so.1

2022-10-04 10:33:09.186850: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1720] Found device 0 with properties:

pciBusID: 0000:18:00.0 name: NVIDIA GeForce RTX 2080 Ti computeCapability: 7.5

coreClock: 1.545GHz coreCount: 68 deviceMemorySize: 10.76GiB deviceMemoryBandwidth: 573.69GiB/s

2022-10-04 10:33:09.188052: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1720] Found device 1 with properties:

pciBusID: 0000:3b:00.0 name: NVIDIA GeForce RTX 2080 Ti computeCapability: 7.5

coreClock: 1.545GHz coreCount: 68 deviceMemorySize: 10.76GiB deviceMemoryBandwidth: 573.69GiB/s

2022-10-04 10:33:09.189212: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1720] Found device 2 with properties:

pciBusID: 0000:86:00.0 name: NVIDIA GeForce RTX 2080 Ti computeCapability: 7.5

coreClock: 1.545GHz coreCount: 68 deviceMemorySize: 10.76GiB deviceMemoryBandwidth: 573.69GiB/s

2022-10-04 10:33:09.190381: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1720] Found device 3 with properties:

pciBusID: 0000:af:00.0 name: NVIDIA GeForce RTX 2080 Ti computeCapability: 7.5

coreClock: 1.545GHz coreCount: 68 deviceMemorySize: 10.76GiB deviceMemoryBandwidth: 573.69GiB/s

2022-10-04 10:33:09.190627: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /home/wucci/cryosparc/cryosparc_worker/cryosparc_compute/blobio:/home/wucci/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib:/home/wucci/cryosparc/cryosparc_worker/deps/external/cudnn/lib:/usr/local/cuda/lib64:/home/wucci/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib:/home/wucci/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib

2022-10-04 10:33:09.190780: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'libcublas.so.11'; dlerror: libcublas.so.11: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /home/wucci/cryosparc/cryosparc_worker/cryosparc_compute/blobio:/home/wucci/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib:/home/wucci/cryosparc/cryosparc_worker/deps/external/cudnn/lib:/usr/local/cuda/lib64:/home/wucci/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib:/home/wucci/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib

2022-10-04 10:33:09.190915: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'libcublasLt.so.11'; dlerror: libcublasLt.so.11: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /home/wucci/cryosparc/cryosparc_worker/cryosparc_compute/blobio:/home/wucci/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib:/home/wucci/cryosparc/cryosparc_worker/deps/external/cudnn/lib:/usr/local/cuda/lib64:/home/wucci/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib:/home/wucci/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib

2022-10-04 10:33:09.190959: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcufft.so.10

2022-10-04 10:33:09.191007: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcurand.so.10

2022-10-04 10:33:09.232231: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcusolver.so.10

2022-10-04 10:33:09.232696: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'libcusparse.so.11'; dlerror: libcusparse.so.11: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /home/wucci/cryosparc/cryosparc_worker/cryosparc_compute/blobio:/home/wucci/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib:/home/wucci/cryosparc/cryosparc_worker/deps/external/cudnn/lib:/usr/local/cuda/lib64:/home/wucci/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib:/home/wucci/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib

2022-10-04 10:33:09.234134: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcudnn.so.8

2022-10-04 10:33:09.234208: W tensorflow/core/common_runtime/gpu/gpu_device.cc:1757] Cannot dlopen some GPU libraries. Please make sure the missing libraries mentioned above are installed properly if you would like to use GPU. Follow the guide at https://www.tensorflow.org/install/gpu for how to download and setup the required libraries for your platform.

Skipping registering GPU devices...

***************************************************************

**** handle exception rc

set status to failed

========= main process now complete.

========= monitor process now complete.

I’m running CUDA 10.2, and I know that version 11 is needed for the Deep Picker. Maybe that’s why I’m getting this error? Let me try running a job (not Deep Picker) that uses GPUs and I’ll get back to you in a minute. Thank you so much for your help!

Job has launched, started, and is running just fine! Thanks again, @stephan!

Hi @kbasore,

Glad to see it’s running properly, did you mean the Deep Picker jobs are running fine? Odd that Tensorflow failed in the worker test but not in the Deep Picker job itself

Hi @stephan,

I ran a NU refinement job.

Okay that makes sense then. Yes, you’ll most likely need to install CUDA 11 in order to get the Deep Picker jobs to work based on this error.

I just had an issue with Centos7 upgrade also and it had different LICENSE_ID in master and worker, which when changed made everything work. Is this some sort of bug?

Hi @donaldb,

This isn’t related to CentOS7. It’s also not a bug: the master and worker packages are expected to have the same License ID. This is now enforced in v4, as the authentication layer relies on this.

You can read more about it here: CryoSPARC Architecture and System Requirements - CryoSPARC Guide

Thanks for that. Could there be a way to add a warning output when they don’t match for easier troubleshooting?

Hi @donaldb,

Thanks for the suggestion, we’ll add this in our next release.