I try to run a job on another worker node. I’ve run the command /bin/cryosparcw connect

./bin/cryosparcw connect --worker yu-desktop --master 13900k.lan --port 61000 --ssdpath /home/yu/cryosparc/ssd_cache --update --sshstr yu@192.168.0.165

---------------------------------------------------------------

CRYOSPARC CONNECT --------------------------------------------

---------------------------------------------------------------

Attempting to register worker yu-desktop to command 13900k.lan:61002

Connecting as unix user yu

Will register using ssh string: yu@192.168.0.165

If this is incorrect, you should re-run this command with the flag --sshstr <ssh string>

---------------------------------------------------------------

Connected to master.

---------------------------------------------------------------

Current connected workers:

13900k.lan

yu-desktop

---------------------------------------------------------------

Worker will be registered with 16 CPUs.

---------------------------------------------------------------

Updating target yu-desktop

Current configuration:

cache_path : /home/yu/cryosparc/ssd_cache

cache_quota_mb : None

cache_reserve_mb : 10000

desc : None

gpus : [{'id': 0, 'mem': 8325693440, 'name': 'NVIDIA GeForce RTX 3060 Ti'}]

hostname : yu-desktop

lane : default

monitor_port : None

name : yu-desktop

resource_fixed : {'SSD': True}

resource_slots : {'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15], 'GPU': [0], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7]}

ssh_str : yu@yu-desktop

title : Worker node yu-desktop

type : node

worker_bin_path : /home/yu/cryosparc/cryosparc_worker/bin/cryosparcw

---------------------------------------------------------------

SSH connection string will be updated to yu@192.168.0.165

SSD will be enabled.

Worker will be registered with SSD cache location /home/yu/cryosparc/ssd_cache

SSD path will be updated to /home/yu/cryosparc/ssd_cache

---------------------------------------------------------------

Updating..

Done.

---------------------------------------------------------------

Final configuration for yu-desktop

cache_path : /home/yu/cryosparc/ssd_cache

cache_quota_mb : None

cache_reserve_mb : 10000

desc : None

gpus : [{'id': 0, 'mem': 8325693440, 'name': 'NVIDIA GeForce RTX 3060 Ti'}]

hostname : yu-desktop

lane : default

monitor_port : None

name : yu-desktop

resource_fixed : {'SSD': True}

resource_slots : {'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15], 'GPU': [0], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7]}

ssh_str : yu@192.168.0.165

title : Worker node yu-desktop

type : node

worker_bin_path : /home/yu/cryosparc/cryosparc_worker/bin/cryosparcw

---------------------------------------------------------------

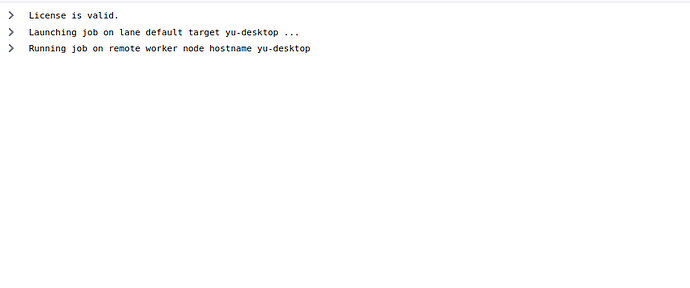

When I tried to run the job, it stayed in ‘Running job on remote worker node hostname yu-desktop’