Hi,

I’ve noticed an occasional weird bug (?), or at least unexpected behavior, when performing heterogeneous refinement with non-identical references.

Let’s say I have one good reference, and a bunch of bad ones, either random density, or generated from bad/contaminant 2D classes.

Sometimes, with default parameters, zero particles will be assigned to the “good” class after iteration 0, leading to a 100Å garbage volume in place of my nice ab initio volume.

This sometimes somehow corrects itself by iteration 20, but often it does not. If I set “random initial assignment orientations for identical references” to 0, this behaviour goes away completely.

According to this post, this parameter shouldn’t affect anything if no reference pairs are identical, but it definitely seems to make a difference. Is this a bug?

Cheers

Oli

1 Like

Hey @olibclarke – investigating this! Do you mind sharing the parameters (any non-default?) of the hetero refine job where this happened?

One lead: if all the initial volumes are distinct, the Number of initial random assignment iterations parameter controls the amount of initial iterations during which we perform ‘hard’ classification (without randomizing). Perhaps this hard classification is what’s causing this behaviour initially.

Hi Valentin,

Sure - nothing unusual, the only non-default parameter was that I was using 5 final full iterations rather than the default of 2. 12 classes - 8 genuine species identified by ab initio, and 4 random density volumes.

One thing I have noticed is that the initial class distribution is kind of weird when using the defaults:

[CPU: 4.24 GB]

-- Class 0: 2.00%

[CPU: 4.24 GB]

-- Class 1: 2.06%

[CPU: 4.24 GB]

-- Class 2: 16.17%

[CPU: 4.24 GB]

-- Class 3: 28.55%

[CPU: 4.24 GB]

-- Class 4: 2.92%

[CPU: 4.24 GB]

-- Class 5: 2.97%

[CPU: 4.24 GB]

-- Class 6: 13.15%

[CPU: 4.24 GB]

-- Class 7: 5.62%

[CPU: 4.24 GB]

-- Class 8: 17.77%

[CPU: 4.24 GB]

-- Class 9: 1.14%

[CPU: 4.24 GB]

-- Class 10: 7.43%

[CPU: 4.24 GB]

-- Class 11: 0.23%

This is weird because class 0 is the “good” class, and the one with the largest population at the end of classification. When I do zero initial random assignment iterations, this is what I get:

[CPU: 4.18 GB]

-- Class 0: 7.97%

[CPU: 4.18 GB]

-- Class 1: 8.01%

[CPU: 4.18 GB]

-- Class 2: 9.82%

[CPU: 4.18 GB]

-- Class 3: 10.33%

[CPU: 4.18 GB]

-- Class 4: 8.12%

[CPU: 4.18 GB]

-- Class 5: 7.57%

[CPU: 4.18 GB]

-- Class 6: 7.91%

[CPU: 4.18 GB]

-- Class 7: 8.47%

[CPU: 4.18 GB]

-- Class 8: 9.41%

[CPU: 4.18 GB]

-- Class 9: 7.45%

[CPU: 4.18 GB]

-- Class 10: 8.70%

[CPU: 4.18 GB]

-- Class 11: 6.24%

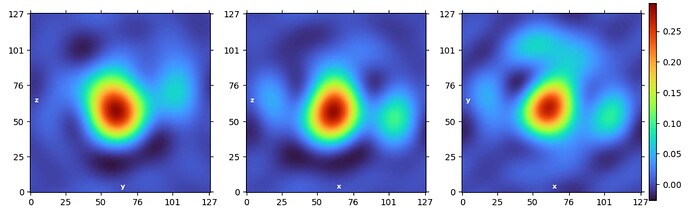

So my current theory is that with the defaults, the class assignment is a bit unstable, and sometimes we end up with ~zero particles being assigned to the “good” class, resulting in an iteration 0 volume that looks like this:

Hey @olibclarke – a quick note on this older thread that this should be fixed in v4.4. Let us know if you see this behaviour again! Thanks and happy holidays

2 Likes