Hi all,

we just upgraded our CS from 4.3 to 4.4.

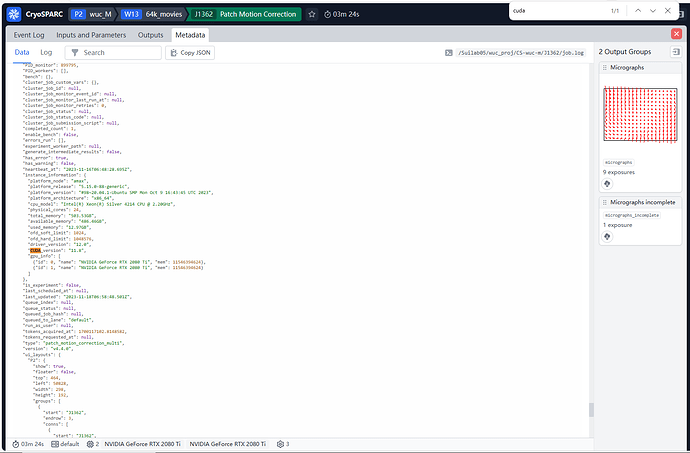

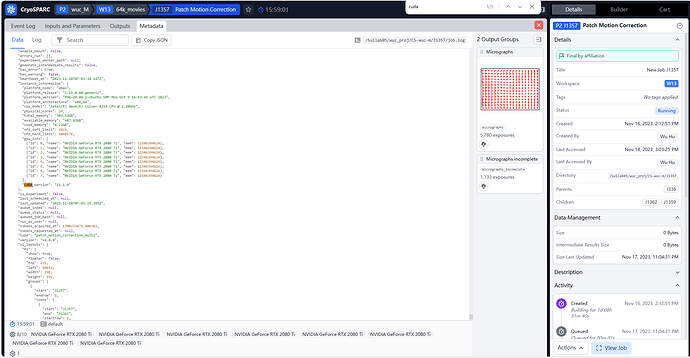

I tried to run Extensive validation but already the 3rd job (Patch Motion Correction) fails due to:

RuntimeError: Could not allocate GPU array: CUDA_ERROR_OUT_OF_MEMORY

I tried and cloned this job an selected the ‘Low-memory mode’ and got the same result. I can see that during the job execution the GPU RAM usage gets above 99%.

This was not happening before the update.

Basic info:

-

single workstation

-

Current cryoSPARC version: v4.4.0 -

uname -a && free -g

Linux thorin-d11 4.15.0-128-generic #131-Ubuntu SMP Wed Dec 9 06:57:35 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

total used free shared buff/cache available

Mem: 187 5 115 1 66 175

Swap: 0 0 0`

nvidia-smi

Fri Nov 10 17:04:48 2023

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 530.41.03 Driver Version: 530.41.03 CUDA Version: 12.1 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce GTX 1080 Off| 00000000:18:00.0 Off | N/A |

| 33% 36C P8 5W / 200W| 0MiB / 8192MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 1 NVIDIA GeForce GTX 1080 Off| 00000000:3B:00.0 Off | N/A |

| 33% 30C P8 10W / 200W| 0MiB / 8192MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 2 NVIDIA GeForce GTX 1080 Off| 00000000:86:00.0 Off | N/A |

| 33% 27C P8 6W / 200W| 0MiB / 8192MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

| 3 NVIDIA GeForce GTX 1080 Off| 00000000:AF:00.0 Off | N/A |

| 33% 28C P8 9W / 200W| 0MiB / 8192MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| No running processes found |

+---------------------------------------------------------------------------------------+

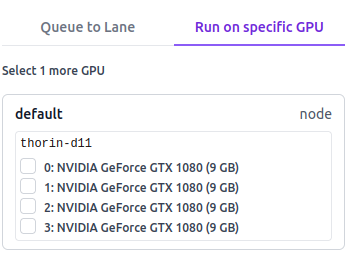

- not other task were running on the GPUs at the time

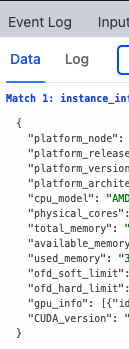

What is perhaps interesting, but I am not sure how it was before the update, is the fact that CS reports the correct model of the GPU card but the GPU RAM is rounded up. See the screenshot:

From nvidia-smi reported above you can see that the card has 8192 MB of RAM.

The workstation and the cryosparc have both been restarted since the update.

I did though first update CS to 4.4, it reported the error about the unsupported version, updated the Nvidia drivers, restarted the workstation and then ended up with this error I am reporting here.

Any ideas?

Thanks!