Hi @nfrasser ,

It’s still the problem of the submission file. By using the script below, I can run job now. But I have another problem: connection error. I guess the worker can’t connect with master although I have ~/.ssh/config file in my home directory on the cluster. The base port that is used is 36000. Do you know how to solve it? Thank you.

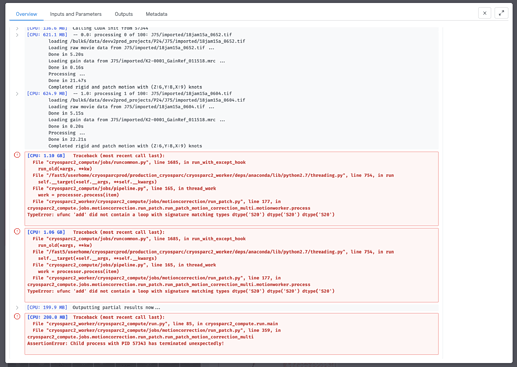

Here’s the job.log:

================= CRYOSPARCW ======= 2020-05-12 17:46:02.451091 =========

Project P1 Job J2

Master graham.computecanada.ca Port 36002

===========================================================================

========= monitor process now starting main process

MAINPROCESS PID 6211

*** client.py: command (http://graham.computecanada.ca:36002/api) did not reply within timeout of 300 seconds, attempt 1 of 3

*** client.py: command (http://graham.computecanada.ca:36002/api) did not reply within timeout of 300 seconds, attempt 2 of 3

*** client.py: command (http://graham.computecanada.ca:36002/api) did not reply within timeout of 300 seconds, attempt 3 of 3

Traceback (most recent call last):

File "<string>", line 1, in <module>

Process Process-1:

Traceback (most recent call last):

File "/home/user/cryosparc/cryosparc2_worker/deps/anaconda/lib/python2.7/multiprocessing/process.py", line 267, in _bootstrap

self.run()

File "/home/user/cryosparc/cryosparc2_worker/deps/anaconda/lib/python2.7/multiprocessing/process.py", line 114, in run

self._target(*self._args, **self._kwargs)

File "cryosparc2_worker/cryosparc2_compute/run.py", line 31, in cryosparc2_compute.run.main

File "cryosparc2_worker/cryosparc2_compute/run.py", line 158, in cryosparc2_compute.run.run

File "cryosparc2_compute/jobs/runcommon.py", line 89, in connect

File "cryosparc2_compute/jobs/runcommon.py", line 89, in connect

cli = client.CommandClient(master_hostname, int(master_command_core_port))

File "cryosparc2_compute/client.py", line 33, in __init__

self._reload()

File "cryosparc2_compute/client.py", line 61, in _reload

system = self._get_callable('system.describe')()

File "cryosparc2_compute/client.py", line 49, in func

r = requests.post(self.url, data = json.dumps(data, cls=NumpyEncoder), headers = header, timeout=self.timeout)

File "/home/user/cryosparc/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/requests/api.py", line 116, in post

cli = client.CommandClient(master_hostname, int(master_command_core_port))

File "cryosparc2_compute/client.py", line 33, in __init__

self._reload()

File "cryosparc2_compute/client.py", line 61, in _reload

system = self._get_callable('system.describe')()

File "cryosparc2_compute/client.py", line 49, in func

r = requests.post(self.url, data = json.dumps(data, cls=NumpyEncoder), headers = header, timeout=self.timeout)

File "/home/user/cryosparc/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/requests/api.py", line 116, in post

return request('post', url, data=data, json=json, **kwargs)

File "/home/user/cryosparc/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/requests/api.py", line 60, in request

return request('post', url, data=data, json=json, **kwargs)

File "/home/user/cryosparc/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/requests/api.py", line 60, in request

return session.request(method=method, url=url, **kwargs)

return session.request(method=method, url=url, **kwargs)

File "/home/user/cryosparc/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/requests/sessions.py", line 533, in request

File "/home/user/cryosparc/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/requests/sessions.py", line 533, in request

resp = self.send(prep, **send_kwargs)

File "/home/user/cryosparc/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/requests/sessions.py", line 646, in send

resp = self.send(prep, **send_kwargs)

File "/home/user/cryosparc/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/requests/sessions.py", line 646, in send

r = adapter.send(request, **kwargs)

File "/home/user/cryosparc/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/requests/adapters.py", line 516, in send

r = adapter.send(request, **kwargs)

File "/home/user/cryosparc/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/requests/adapters.py", line 516, in send

raise ConnectionError(e, request=request)

requests.exceptions.ConnectionError: HTTPConnectionPool(host='graham.computecanada.ca', port=36002): Max retries exceeded with url: /api (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x2aeaa7a94150>: Failed to establish a new connection: [Errno 110] Connection timed out',))

raise ConnectionError(e, request=request)

ConnectionError: HTTPConnectionPool(host='graham.computecanada.ca', port=36002): Max retries exceeded with url: /api (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x2aeaa7a95190>: Failed to establish a new connection: [Errno 110] Connection timed out',))

*** client.py: command (http://graham.computecanada.ca:36002/api) did not reply within timeout of 300 seconds, attempt 1 of 3

*** client.py: command (http://graham.computecanada.ca:36002/api) did not reply within timeout of 300 seconds, attempt 2 of 3

*** client.py: command (http://graham.computecanada.ca:36002/api) did not reply within timeout of 300 seconds, attempt 3 of 3

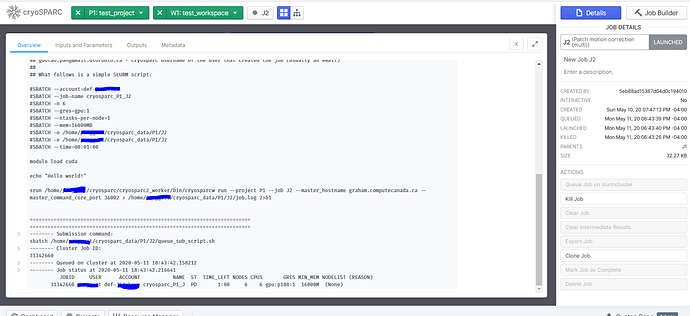

Here’s the submission file I’m using.

#!/bin/bash

#SBATCH --account=def-supervisor

#SBATCH --gres=gpu:1

#SBATCH --mem=16000M

#SBATCH --time=0-00:10

#SBATCH --cpus-per-task=4

/home/user/cryosparc/cryosparc2_worker/bin/cryosparcw run --project P1 --job J2 --master_hostname graham.computecanada.ca --master_command_core_port 36002 > /home/user/cryosparc_data/P1/J2/job.log 2>&1