Hi, I have another question about ram for cryosaparc. On the Queue age, it only show 32 GM. I have much more than that. How to increase the ram? thanks, lan

Please can you post:

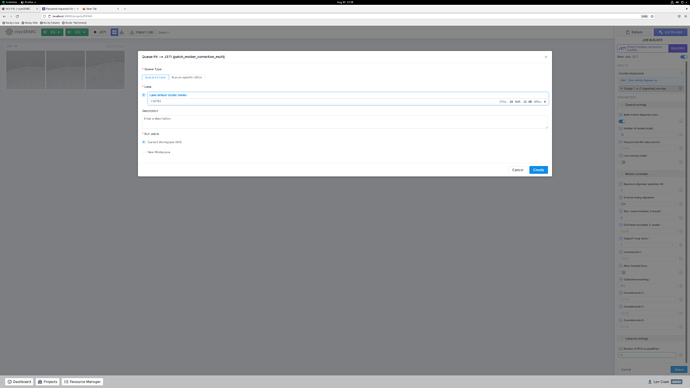

- a screenshot of the queue page

- the outputs of shell commands:

free -gcryosparcm cli "get_scheduler_targets()"

Hi,

free -g

total used free shared buff/cache available

Mem: 376Gi 3.7Gi 369Gi 36Mi 2.9Gi 370Gi

Swap: 9Gi 0B 9Gi

[cryosparc_user@r16763 ~]$ free -g

total used free shared buff/cache available

Mem: 376 5 41 0 328 368

Swap: 9 0 9

cryosparcm cli "get_scheduler_targets()"

[cryosparc_user@r16763 ~]$ cryosparcm cli “get_schedular_targets()”

Traceback (most recent call last):

File “/home/cryosparc_user/software/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.7/runpy.py”, line 193, in _run_module_as_main

“main”, mod_spec)

File “/home/cryosparc_user/software/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.7/runpy.py”, line 85, in _run_code

exec(code, run_globals)

File “/home/cryosparc_user/software/cryosparc/cryosparc_master/cryosparc_compute/client.py”, line 92, in

print(eval(“cli.”+command))

File “”, line 1, in

AttributeError: ‘CommandClient’ object has no attribute ‘get_schedular_targets’

[cryosparc_user@r16763 ~]$ cryosparcm cli “get_scheduler_targets()”

[{‘cache_path’: ‘/scr/cryosparc_cache’, ‘cache_quota_mb’: None, ‘cache_reserve_mb’: 10000, ‘desc’: None, ‘gpus’: [{‘id’: 0, ‘mem’: 8369799168, ‘name’: ‘NVIDIA GeForce RTX 3070’}, {‘id’: 1, ‘mem’: 8366784512, ‘name’: ‘NVIDIA GeForce RTX 3070’}, {‘id’: 2, ‘mem’: 8369799168, ‘name’: ‘NVIDIA GeForce RTX 3070’}, {‘id’: 3, ‘mem’: 8369799168, ‘name’: ‘NVIDIA GeForce RTX 3070’}], ‘hostname’: ‘r16763’, ‘lane’: ‘default’, ‘monitor_port’: None, ‘name’: ‘r16763’, ‘resource_fixed’: {‘SSD’: True}, ‘resource_slots’: {‘CPU’: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19], ‘GPU’: [0, 1, 2, 3], ‘RAM’: [0, 1, 2, 3]}, ‘ssh_str’: ‘cryosparc_user@r16763’, ‘title’: ‘Worker node r16763’, ‘type’: ‘node’, ‘worker_bin_path’: ‘/home/cryosparc_user/software/cryosparc/cryosparc_worker/bin/cryosparcw’}]

[cryosparc_user@r16763 ~]$ cryosparcm cli “get_schedular_targets()”

Traceback (most recent call last):

File “/home/cryosparc_user/software/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.7/runpy.py”, line 193, in _run_module_as_main

“main”, mod_spec)

File “/home/cryosparc_user/software/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.7/runpy.py”, line 85, in _run_code

exec(code, run_globals)

File “/home/cryosparc_user/software/cryosparc/cryosparc_master/cryosparc_compute/client.py”, line 92, in

print(eval(“cli.”+command))

File “”, line 1, in

AttributeError: ‘CommandClient’ object has no attribute ‘get_schedular_targets’

[cryosparc_user@r16763 ~]$ cryosparcm cli “get_scheduler_targets()”

[{‘cache_path’: ‘/scr/cryosparc_cache’, ‘cache_quota_mb’: None, ‘cache_reserve_mb’: 10000, ‘desc’: None, ‘gpus’: [{‘id’: 0, ‘mem’: 8369799168, ‘name’: ‘NVIDIA GeForce RTX 3070’}, {‘id’: 1, ‘mem’: 8366784512, ‘name’: ‘NVIDIA GeForce RTX 3070’}, {‘id’: 2, ‘mem’: 8369799168, ‘name’: ‘NVIDIA GeForce RTX 3070’}, {‘id’: 3, ‘mem’: 8369799168, ‘name’: ‘NVIDIA GeForce RTX 3070’}], ‘hostname’: ‘r16763’, ‘lane’: ‘default’, ‘monitor_port’: None, ‘name’: ‘r16763’, ‘resource_fixed’: {‘SSD’: True}, ‘resource_slots’: {‘CPU’: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19], ‘GPU’: [0, 1, 2, 3], ‘RAM’: [0, 1, 2, 3]}, ‘ssh_str’: ‘cryosparc_user@r16763’, ‘title’: ‘Worker node r16763’, ‘type’: ‘node’, ‘worker_bin_path’: ‘/home/cryosparc_user/software/cryosparc/cryosparc_worker/bin/cryosparcw’}]

The Ram number on the querer page is only 32 GB, but I have much more than that.

The RAM specification is defined during cryoSPARC installation.

The RAM on your computer may have been upgraded after cryoSPARC installation, or the full RAM was not detected during installation.

You may attempt the following (ensuring usage of the correct (various) quote characters):

- “un-register” the cryoSPARC worker (docs):

cryosparcm cli "remove_scheduler_target_node('r16763')"

- re-connect the cryoSPARC worker (docs, note backslash after first line of two-line command):

/home/cryosparc_user/software/cryosparc/cryosparc_worker/bin/cryosparcw connect --worker r16763 \

--master 127.0.0.1 --ssdpath /scr/cryosparc_cache --port 39000

What was the output of the second command?

CRYOSPARC CONNECT --------------------------------------------

Attempting to register worker r16763 to command 127.0.0.1:39002

Connecting as unix user cryosparc_user

Will register using ssh string: cryosparc_user@r16763

If this is incorrect, you should re-run this command with the flag --sshstr

Connected to master.

Current connected workers:

Autodetecting available GPUs…

Detected 4 CUDA devices.

id pci-bus name

0 0000:18:00.0 NVIDIA GeForce RTX 3070

1 0000:3B:00.0 NVIDIA GeForce RTX 3070

2 0000:86:00.0 NVIDIA GeForce RTX 3070

3 0000:AF:00.0 NVIDIA GeForce RTX 3070

All devices will be enabled now.

This can be changed later using --update

Worker will be registered with SSD cache location /scr/cryosparc_cache

Autodetecting the amount of RAM available…

This machine has 385.19GB RAM .

Registering worker…

Done.

You can now launch jobs on the master node and they will be scheduled

on to this worker node if resource requirements are met.

Final configuration for r16763

cache_path : /scr/cryosparc_cache

cache_quota_mb : None

cache_reserve_mb : 10000

desc : None

gpus : [{‘id’: 0, ‘mem’: 8369799168, ‘name’: ‘NVIDIA GeForce RTX 3070’}, {‘id’: 1, ‘mem’: 8366784512, ‘name’: ‘NVIDIA GeForce RTX 3070’}, {‘id’: 2, ‘mem’: 8369799168, ‘name’: ‘NVIDIA GeForce RTX 3070’}, {‘id’: 3, ‘mem’: 8369799168, ‘name’: ‘NVIDIA GeForce RTX 3070’}]

hostname : r16763

lane : default

monitor_port : None

name : r16763

resource_fixed : {‘SSD’: True}

resource_slots : {‘CPU’: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39], ‘GPU’: [0, 1, 2, 3], ‘RAM’: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47]}

ssh_str : cryosparc_user@r16763

title : Worker node r16763

type : node

worker_bin_path : /home/cryosparc_user/software/cryosparc/cryosparc_worker/bin/cryosparcw

spawn errors

tried to start cryosparc

[cryosparc_user@r16763 ~]$ cryosparcm start

Starting cryoSPARC System master process…

CryoSPARC is not already running.

database: ERROR (spawn error)

[cryosparc_user@r16763 ~]$

This can happen for various reasons. Does the database log indicate a specific error?

cryosparcm log database

Are there any “runaway” cryoSPARC processes running?

To find out, please shutdown cryoSPARC:

cryosparcm stop

Then:

ps aux | grep -e cryosparc -e mongo

and

ls -l /tmp/*sock

Hi, I killed the running job and stop the program before I did your suggestion.

`cryosparcm log database (it is long, I just paste some here)

2022-09-07T13:48:38.966-0500 I CONTROL [initandlisten] options: { net: { port: 39001 }, replication: { oplogSizeMB: 64, replSet: “meteor” }, storage: { dbPath: “/home/cryosparc_user/software/cryosparc/cryosparc_database”, journal: { enabled: false }, wiredTiger: { engineConfig: { cacheSizeGB: 4.0 } } } }

2022-09-07T13:48:38.966-0500 I STORAGE [initandlisten] exception in initAndListen: 98 Unable to lock file: /home/cryosparc_user/software/cryosparc/cryosparc_database/mongod.lock Resource temporarily unavailable. Is a mongod instance already running?, terminating

2022-09-07T13:48:38.966-0500 I NETWORK [initandlisten] shutdown: going to close listening sockets…

2022-09-07T13:48:38.966-0500 I NETWORK [initandlisten] shutdown: going to flush diaglog…

2022-09-07T13:48:38.966-0500 I CONTROL [initandlisten] now exiting

2022-09-07T13:48:38.966-0500 I CONTROL [initandlisten] shutting down with code:100

2022-09-07T13:48:40.999-0500 I CONTROL [initandlisten] MongoDB starting : pid=543815 port=39001 dbpath=/home/cryosparc_user/software/cryosparc/cryosparc_database 64-bit host=r16763

2022-09-07T13:48:40.999-0500 I CONTROL [initandlisten] db version v3.4.10

2022-09-07T13:48:40.999-0500 I CONTROL [initandlisten] git version: 078f28920cb24de0dd479b5ea6c66c644f6326e9

2022-09-07T13:48:40.999-0500 I CONTROL [initandlisten] allocator: tcmalloc

2022-09-07T13:48:40.999-0500 I CONTROL [initandlisten] modules: none

2022-09-07T13:48:40.999-0500 I CONTROL [initandlisten] build environment:

2022-09-07T13:48:40.999-0500 I CONTROL [initandlisten] distarch: x86_64

2022-09-07T13:48:40.999-0500 I CONTROL [initandlisten] target_arch: x86_64

2022-09-07T13:48:40.999-0500 I CONTROL [initandlisten] options: { net: { port: 39001 }, replication: { oplogSizeMB: 64, replSet: “meteor” }, storage: { dbPath: “/home/cryosparc_user/software/cryosparc/cryosparc_database”, journal: { enabled: false }, wiredTiger: { engineConfig: { cacheSizeGB: 4.0 } } } }

2022-09-07T13:48:40.999-0500 I STORAGE [initandlisten] exception in initAndListen: 98 Unable to lock file: /home/cryosparc_user/software/cryosparc/cryosparc_database/mongod.lock Resource temporarily unavailable. Is a mongod instance already running?, terminating

2022-09-07T13:48:40.999-0500 I NETWORK [initandlisten] shutdown: going to close listening sockets…

2022-09-07T13:48:40.999-0500 I NETWORK [initandlisten] shutdown: going to flush diaglog…

2022-09-07T13:48:40.999-0500 I CONTROL [initandlisten] now exiting

2022-09-07T13:48:40.999-0500 I CONTROL [initandlisten] shutting down with code:100

2022-09-07T13:48:44.033-0500 I CONTROL [initandlisten] MongoDB starting : pid=543818 port=39001 dbpath=/home/cryosparc_user/software/cryosparc/cryosparc_database 64-bit host=r16763

2022-09-07T13:48:44.033-0500 I CONTROL [initandlisten] db version v3.4.10

2022-09-07T13:48:44.033-0500 I CONTROL [initandlisten] git version: 078f28920cb24de0dd479b5ea6c66c644f6326e9

2022-09-07T13:48:44.033-0500 I CONTROL [initandlisten] allocator: tcmalloc

2022-09-07T13:48:44.033-0500 I CONTROL [initandlisten] modules: none

2022-09-07T13:48:44.033-0500 I CONTROL [initandlisten] build environment:

2022-09-07T13:48:44.033-0500 I CONTROL [initandlisten] distarch: x86_64

2022-09-07T13:48:44.033-0500 I CONTROL [initandlisten] target_arch: x86_64

2022-09-07T13:48:44.033-0500 I CONTROL [initandlisten] options: { net: { port: 39001 }, replication: { oplogSizeMB: 64, replSet: “meteor” }, storage: { dbPath: “/home/cryosparc_user/software/cryosparc/cryosparc_database”, journal: { enabled: false }, wiredTiger: { engineConfig: { cacheSizeGB: 4.0 } } } }

2022-09-07T13:48:44.033-0500 I STORAGE [initandlisten] exception in initAndListen: 98 Unable to lock file: /home/cryosparc_user/software/cryosparc/cryosparc_database/mongod.lock Resource temporarily unavailable. Is a mongod instance already running?, terminating

2022-09-07T13:48:44.033-0500 I NETWORK [initandlisten] shutdown: going to close listening sockets…

2022-09-07T13:48:44.033-0500 I NETWORK [initandlisten] shutdown: going to flush diaglog…

2022-09-07T13:48:44.033-0500 I CONTROL [initandlisten] now exiting

2022-09-07T13:48:44.033-0500 I CONTROL [initandlisten] shutting down with code:100

Any advice? Thank!

Lan

[cryosparc_user@r16763 ~]$ cryosparcm stop

CryoSPARC is not already running.

If you would like to restart, use cryosparcm restart

[cryosparc_user@r16763 ~]$ ps aux | grep -e cryosparc -e mongo

cryospa+ 9921 0.0 0.0 258132 4792 ? Ss Aug20 5:16 python /home/cryosparc_user/software/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /home/cryosparc_user/software/cryosparc/cryosparc_master/supervisord.conf

cryospa+ 9923 0.5 0.6 4367084 2487596 ? Sl Aug20 144:48 mongod --dbpath /home/cryosparc_user/software/cryosparc/cryosparc_database --port 39001 --oplogSize 64 --replSet meteor --nojournal --wiredTigerCacheSizeGB 4

cryospa+ 10032 0.1 0.0 1522444 190852 ? Sl Aug20 30:02 python -c import cryosparc_command.command_core as serv; serv.start(port=39002)

cryospa+ 10069 0.1 0.0 1780604 67368 ? Sl Aug20 43:32 python -c import cryosparc_command.command_vis as serv; serv.start(port=39003)

cryospa+ 10099 0.2 0.0 1227760 57108 ? Sl Aug20 60:19 python -c import cryosparc_command.command_rtp as serv; serv.start(port=39005)

cryospa+ 10137 0.1 0.0 1404372 142212 ? Sl Aug20 30:57 /home/cryosparc_user/software/cryosparc/cryosparc_master/cryosparc_webapp/nodejs/bin/node ./bundle/main.js

cryospa+ 10162 0.0 0.0 1090568 112896 ? Sl Aug20 20:46 /home/cryosparc_user/software/cryosparc/cryosparc_master/cryosparc_app/api/nodejs/bin/node ./bundle/main.js

cryospa+ 542325 0.0 0.0 257876 19748 ? Ss 13:32 0:00 python /home/cryosparc_user/software/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /home/cryosparc_user/software/cryosparc/cryosparc_master/supervisord.conf

cryospa+ 542418 0.0 0.0 257876 19808 ? Ss 13:33 0:00 python /home/cryosparc_user/software/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /home/cryosparc_user/software/cryosparc/cryosparc_master/supervisord.conf

cryospa+ 542480 0.0 0.0 257876 19680 ? Ss 13:33 0:00 python /home/cryosparc_user/software/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /home/cryosparc_user/software/cryosparc/cryosparc_master/supervisord.conf

cryospa+ 542557 0.0 0.0 257876 19644 ? Ss 13:34 0:00 python /home/cryosparc_user/software/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /home/cryosparc_user/software/cryosparc/cryosparc_master/supervisord.conf

cryospa+ 543084 0.0 0.0 257876 19704 ? Ss 13:38 0:00 python /home/cryosparc_user/software/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /home/cryosparc_user/software/cryosparc/cryosparc_master/supervisord.conf

cryospa+ 543253 0.0 0.0 257876 19724 ? Ss 13:39 0:00 python /home/cryosparc_user/software/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /home/cryosparc_user/software/cryosparc/cryosparc_master/supervisord.conf

cryospa+ 543527 0.0 0.0 257876 19644 ? Ss 13:45 0:00 python /home/cryosparc_user/software/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /home/cryosparc_user/software/cryosparc/cryosparc_master/supervisord.conf

cryospa+ 543801 0.0 0.0 257876 19804 ? Ss 13:48 0:00 python /home/cryosparc_user/software/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /home/cryosparc_user/software/cryosparc/cryosparc_master/supervisord.conf

cryospa+ 545248 0.0 0.0 221936 1104 pts/0 S+ 14:31 0:00 grep --color=auto -e cryosparc -e mongo

[cryosparc_user@r16763 ~]$ ls -l /tmp/*sock

srwx------. 1 cryosparc_user cryosparc_user 0 Aug 20 17:17 /tmp/mongodb-39001.sock

[cryosparc_user@r16763 ~]$ cryosparcm start

Starting cryoSPARC System master process…

CryoSPARC is not already running.

database: ERROR (spawn error)

Hi the problem was solved by restart computer. Thank you very much. Lan