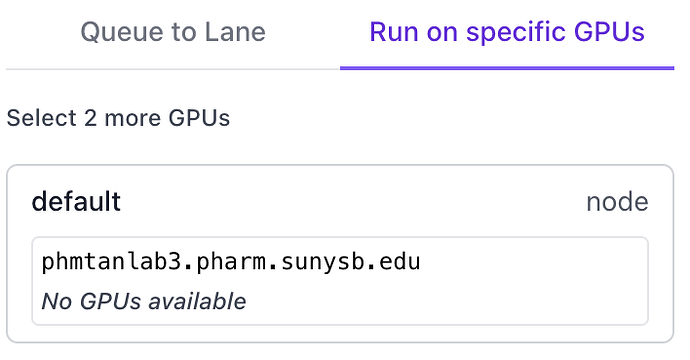

Hi, I need help figuring out why the GPUs are not available for cryoSPARC. I read the previous posts on the same topic but couldn’t identify the cause myself. I also tried upgrading cryosparc to 4.6, reconnect the worker, restarting cryosparc, but nothing works.

The error from the output of “cryosparcm log command_core” are pasted below.

2024-09-30 16:10:41,193 get_gpu_info_run ERROR | Failed to get GPU info on lab3.pharm.sunysb.edu

2024-09-30 16:10:41,193 get_gpu_info_run ERROR | Traceback (most recent call last):

2024-09-30 16:10:41,193 get_gpu_info_run ERROR | File "/mnt/data0/cryosparc_v2/cryosparc_master/cryosparc_command/command_core/__init__.py", line 1520, in get_gpu_info_run

2024-09-30 16:10:41,193 get_gpu_info_run ERROR | value = subprocess.check_output(full_command, stderr=subprocess.STDOUT, shell=shell, timeout=JOB_LAUNCH_TIMEOUT_SECONDS).decode()

2024-09-30 16:10:41,193 get_gpu_info_run ERROR | File "/mnt/data0/cryosparc_v2/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.10/subprocess.py", line 421, in check_output

2024-09-30 16:10:41,193 get_gpu_info_run ERROR | return run(*popenargs, stdout=PIPE, timeout=timeout, check=True,

2024-09-30 16:10:41,193 get_gpu_info_run ERROR | File "/mnt/data0/cryosparc_v2/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.10/subprocess.py", line 526, in run

2024-09-30 16:10:41,193 get_gpu_info_run ERROR | raise CalledProcessError(retcode, process.args,

2024-09-30 16:10:41,193 get_gpu_info_run ERROR | subprocess.CalledProcessError: Command '['bash -c "eval $(/mnt/data0/cryosparc_v2/cryosparc2_worker/bin/cryosparcw env); python /mnt/data0/cryosparc_v2/cryosparc2_worker/cryosparc_compute/get_gpu_info.py"']' returned non-zero exit status 2.

2024-09-30 16:10:51,176 update_all_job_sizes_run INFO | Finished updating all job sizes (0 jobs updated, 0 projects updated)

........

2024-09-30 16:25:23,647 get_gpu_info_run ERROR | Failed to get GPU info on llab3.pharm.sunysb.edu

2024-09-30 16:25:23,647 get_gpu_info_run ERROR | Traceback (most recent call last):

2024-09-30 16:25:23,647 get_gpu_info_run ERROR | File "/mnt/data0/cryosparc_v2/cryosparc_master/cryosparc_command/command_core/__init__.py", line 1520, in get_gpu_info_run

2024-09-30 16:25:23,647 get_gpu_info_run ERROR | value = subprocess.check_output(full_command, stderr=subprocess.STDOUT, shell=shell, timeout=JOB_LAUNCH_TIMEOUT_SECONDS).decode()

2024-09-30 16:25:23,647 get_gpu_info_run ERROR | File "/mnt/data0/cryosparc_v2/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.10/subprocess.py", line 421, in check_output

2024-09-30 16:25:23,647 get_gpu_info_run ERROR | return run(*popenargs, stdout=PIPE, timeout=timeout, check=True,

2024-09-30 16:25:23,647 get_gpu_info_run ERROR | File "/mnt/data0/cryosparc_v2/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.10/subprocess.py", line 526, in run

2024-09-30 16:25:23,647 get_gpu_info_run ERROR | raise CalledProcessError(retcode, process.args,

2024-09-30 16:25:23,647 get_gpu_info_run ERROR | subprocess.CalledProcessError: Command '['bash -c "eval $(/mnt/data0/cryosparc_v2/cryosparc2_worker/bin/cryosparcw env); python /mnt/data0/cryosparc_v2/cryosparc2_worker/cryosparc_compute/get_gpu_info.py"']' returned non-zero exit status 2.

The output of cryosparcm cli “get_scheduler_targets()” is below.

[{‘cache_path’: ‘/home/xxx/cryosparc2_cache’, ‘cache_quota_mb’: None, ‘cache_reserve_mb’: 100urce_fixed’: {‘SSD’: True}, ‘resource_slots’: {‘CPU’: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63], ‘GPU’: [title’: ‘Worker node lab3.pharm.sunysb.edu’, ‘type’: ‘node’, ‘worker_bin_path’: '/mnt/d

This is a single workstation with cryoSPARC version: v4.6.0

Thanks in advance!