Hi Cryosparc Team,

Operationg system Redhat. Cryosparc version: 4.6.0.I have successfully installed master in head node and worker in worker node and connected successfully and everything looks fine but jobs are not running in worker node. After doing top in worker node I can’t see any python process running in worker node which should be there if jobs are successfully running.

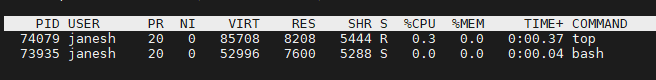

- I can see only below two process running in worker node:

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

74156 janesh 20 0 85708 8208 5444 R 5.9 0.0 0:00.02 top

73935 janesh 20 0 52996 7600 5288 S 0.0 0.0 0:00.04 bash

- Out put of “get_scheduler_targets()”

./cryosparcm cli “get_scheduler_targets()”

[{‘cache_path’: None, ‘cache_quota_mb’: None, ‘cache_reserve_mb’: 10000, ‘desc’: None, ‘gpus’: [{‘id’: 0, ‘mem’: 84987740160, ‘name’: ‘NVIDIA A100-SXM4-80GB’}, {‘id’: 1, ‘mem’: 84987740160, ‘name’: ‘NVIDIA A100-SXM4-80GB’}, {‘id’: 2, ‘mem’: 84987740160, ‘name’: ‘NVIDIA A100-SXM4-80GB’}, {‘id’: 3, ‘mem’: 84987740160, ‘name’: ‘NVIDIA A100-SXM4-80GB’}], ‘hostname’: ‘r04gn04’, ‘lane’: ‘default’, ‘monitor_port’: None, ‘name’: ‘r04gn04’, ‘resource_fixed’: {‘SSD’: False}, ‘resource_slots’: {‘CPU’: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127], ‘GPU’: [0, 1, 2, 3], ‘RAM’: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128]}, ‘ssh_str’: ‘janesh@r04gn04’, ‘title’: ‘Worker node r04gn04’, ‘type’: ‘node’, ‘worker_bin_path’: ‘/home/janesh/cryosparc/cryosparc_worker/bin/cryosparcw’}, {‘cache_path’: None, ‘cache_quota_mb’: None, ‘cache_reserve_mb’: 10000, ‘desc’: None, ‘gpus’: [{‘id’: 0, ‘mem’: 84987740160, ‘name’: ‘NVIDIA A100-SXM4-80GB’}, {‘id’: 1, ‘mem’: 84987740160, ‘name’: ‘NVIDIA A100-SXM4-80GB’}, {‘id’: 2, ‘mem’: 84987740160, ‘name’: ‘NVIDIA A100-SXM4-80GB’}, {‘id’: 3, ‘mem’: 84987740160, ‘name’: ‘NVIDIA A100-SXM4-80GB’}], ‘hostname’: ‘r05gn06’, ‘lane’: ‘default’, ‘monitor_port’: None, ‘name’: ‘r05gn06’, ‘resource_fixed’: {‘SSD’: False}, ‘resource_slots’: {‘CPU’: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127], ‘GPU’: [0, 1, 2, 3], ‘RAM’: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128]}, ‘ssh_str’: ‘janesh@r05gn06’, ‘title’: ‘Worker node r05gn06’, ‘type’: ‘node’, ‘worker_bin_path’: ‘/home/janesh/cryosparc/cryosparc_worker/bin/cryosparcw’}]

-

Two worker nodes are registered(r05gn06 and r05gn06):

cryosparc_worker]$ ./bin/cryosparcw gpulist

Detected 4 CUDA devices.id pci-bus name

0 1 NVIDIA A100-SXM4-80GB 1 65 NVIDIA A100-SXM4-80GB 2 129 NVIDIA A100-SXM4-80GB 3 193 NVIDIA A100-SXM4-80GB

Please help!

Regards,

Aparna