Hello,

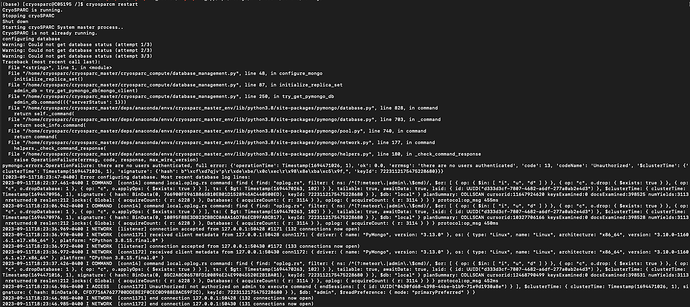

I just updated our cryoSPARC to v. 4.3.1. When I try to restart (i.e. cryosparcm restart), I get the following error:

I looked into the forms a bit to see if others were having issues, and was wondering if my issue was related to this thread. Therefore, I ran the suggested command to look for orphaned processes and this was the output:

78222 ? Ss 0:00 python /home/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /home/cryosparc/cryosparc_master/supervisord.conf

78871 ? Ss 0:00 python /home/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /home/cryosparc/cryosparc_master/supervisord.conf

81211 ? Ss 0:00 python /home/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /home/cryosparc/cryosparc_master/supervisord.conf

86162 ? Ss 0:00 python /home/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /home/cryosparc/cryosparc_master/supervisord.conf

87487 pts/2 S+ 0:00 grep --color=auto -e cryosparc -e mongo

118382 ? Ss 14:54 python /home/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /home/cryosparc/cryosparc_master/supervisord.conf

220020 ? Ss 28:40 python /home/cryosparc/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /home/cryosparc/cryosparc_master/supervisord.conf

220157 ? Sl 2542:22 mongod --auth --dbpath /home/cryosparc/cryosparc_database --port 39001 --oplogSize 64 --replSet meteor --nojournal --wiredTigerCacheSizeGB 4 --bind_ip_all

220292 ? Sl 326:03 python -c import cryosparc_command.command_core as serv; serv.start(port=39002)

220347 ? Sl 184:48 python -c import cryosparc_command.command_vis as serv; serv.start(port=39003)

220388 ? Sl 581:22 python -c import cryosparc_command.command_rtp as serv; serv.start(port=39005)

220477 ? Sl 31:43 /home/cryosparc/cryosparc_master/cryosparc_app/custom-server/nodejs/bin/node dist/server/index.js

220497 ? Sl 221:11 /home/cryosparc/cryosparc_master/cryosparc_app/api/nodejs/bin/node ./bundle/main.js

Can anyone please advise how to move forward so that cryoSPARC can be restarted properly and we can begin using it again? Thanks in advance for your help!

Best,

Kyle