Hi, I installed cryosparc2.15 in an AWS EC2 instance running ubuntu 16.04.23, with a 4TB EBS as (project directory) and a 2TB ssd. The original movies were stored in a S3 bucket.

The installation ran to completion and I was able to run jobs remotely, but nothing was written into the ssd/cryosparc_cache directory. I don’t know where the tmp files were written into. When I compared the cryosparc2_database in AWS with the one in my workstation, there were additional thumbnail directory under each job. Removing them doesn’t seem to impede job running. How can I make cryosparc recognizes the ssd/ drive? Thanks.

Hi @Ricky,

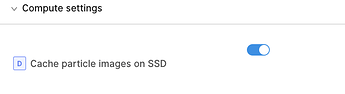

The configured SSD cache is only used by jobs that use particle stacks, such as 2D Classification, Ab Initio, etc. These jobs will have an option to use the cache under its compute settings in the job builder.

Other jobs do not use the cache.

I’m not sure what yoiu mean by this:

Could you please elaborate?

Nick