I encountered some issues while installing CryoSPARC v4.7 and am unsure how to resolve them. My server runs CentOS 7, equipped with four NVIDIA RTX 2080 Ti GPUs, driver version 535.230.02, and CUDA 12.2. After installing CryoSPARC, I attempted to run motion correction using the GPUs, which resulted in the following error. At this point, the cryosparcw gpulist command could still detect my GPUs. However, when I ran motion correction again, the error message changed, and the system could no longer detect the local GPUs.

The first error message (while some movies could still proceed with motion correction normally):

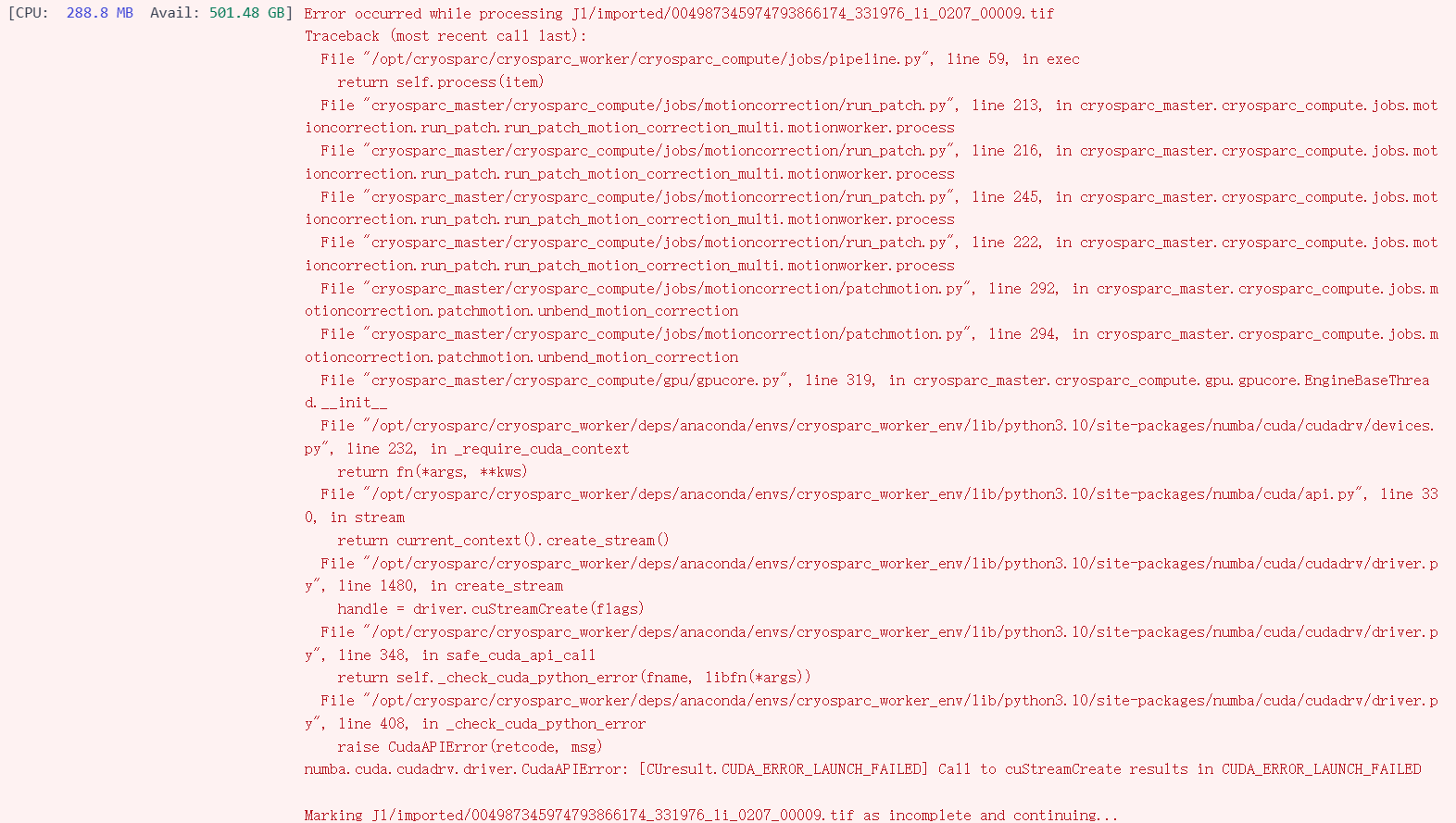

[CPU: 288.8 MB Avail: 501.48 GB]

Error occurred while processing J1/imported/004987345974793866174_331976_li_0207_00009.tif

Traceback (most recent call last):

File “/opt/cryosparc/cryosparc_worker/cryosparc_compute/jobs/pipeline.py”, line 59, in exec

return self.process(item)

File “cryosparc_master/cryosparc_compute/jobs/motioncorrection/run_patch.py”, line 213, in cryosparc_master.cryosparc_compute.jobs.motioncorrection.run_patch.run_patch_motion_correction_multi.motionworker.process

File “cryosparc_master/cryosparc_compute/jobs/motioncorrection/run_patch.py”, line 216, in cryosparc_master.cryosparc_compute.jobs.motioncorrection.run_patch.run_patch_motion_correction_multi.motionworker.process

File “cryosparc_master/cryosparc_compute/jobs/motioncorrection/run_patch.py”, line 245, in cryosparc_master.cryosparc_compute.jobs.motioncorrection.run_patch.run_patch_motion_correction_multi.motionworker.process

File “cryosparc_master/cryosparc_compute/jobs/motioncorrection/run_patch.py”, line 222, in cryosparc_master.cryosparc_compute.jobs.motioncorrection.run_patch.run_patch_motion_correction_multi.motionworker.process

File “cryosparc_master/cryosparc_compute/jobs/motioncorrection/patchmotion.py”, line 292, in cryosparc_master.cryosparc_compute.jobs.motioncorrection.patchmotion.unbend_motion_correction

File “cryosparc_master/cryosparc_compute/jobs/motioncorrection/patchmotion.py”, line 294, in cryosparc_master.cryosparc_compute.jobs.motioncorrection.patchmotion.unbend_motion_correction

File “cryosparc_master/cryosparc_compute/gpu/gpucore.py”, line 319, in cryosparc_master.cryosparc_compute.gpu.gpucore.EngineBaseThread.init

File “/opt/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/devices.py”, line 232, in _require_cuda_context

return fn(*args, **kws)

File “/opt/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/api.py”, line 330, in stream

return current_context().create_stream()

File “/opt/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 1480, in create_stream

handle = driver.cuStreamCreate(flags)

File “/opt/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 348, in safe_cuda_api_call

return self._check_cuda_python_error(fname, libfn(*args))

File “/opt/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 408, in _check_cuda_python_error

raise CudaAPIError(retcode, msg)

numba.cuda.cudadrv.driver.CudaAPIError: [CUresult.CUDA_ERROR_LAUNCH_FAILED] Call to cuStreamCreate results in CUDA_ERROR_LAUNCH_FAILED

Marking J1/imported/004987345974793866174_331976_li_0207_00009.tif as incomplete and continuing…

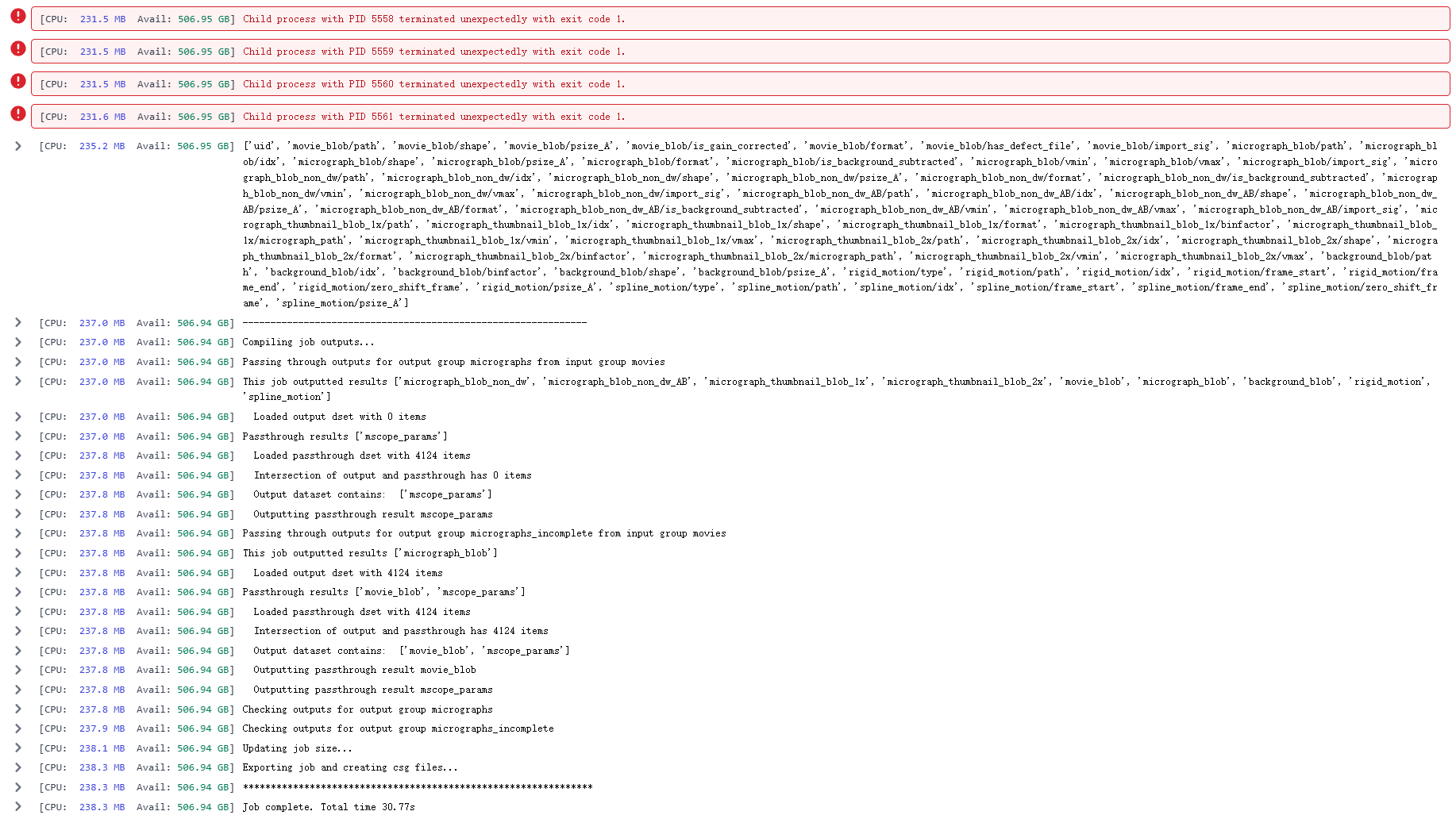

[CPU: 231.5 MB Avail: 506.95 GB]

Child process with PID 5558 terminated unexpectedly with exit code 1.

[CPU: 231.5 MB Avail: 506.95 GB]

Child process with PID 5559 terminated unexpectedly with exit code 1.

[CPU: 231.5 MB Avail: 506.95 GB]

Child process with PID 5560 terminated unexpectedly with exit code 1.

[CPU: 231.6 MB Avail: 506.95 GB]

Child process with PID 5561 terminated unexpectedly with exit code 1.

[CPU: 235.2 MB Avail: 506.95 GB]

[‘uid’, ‘movie_blob/path’, ‘movie_blob/shape’, ‘movie_blob/psize_A’, ‘movie_blob/is_gain_corrected’, ‘movie_blob/format’, ‘movie_blob/has_defect_file’, ‘movie_blob/import_sig’, ‘micrograph_blob/path’, ‘micrograph_blob/idx’, ‘micrograph_blob/shape’, ‘micrograph_blob/psize_A’, ‘micrograph_blob/format’, ‘micrograph_blob/is_background_subtracted’, ‘micrograph_blob/vmin’, ‘micrograph_blob/vmax’, ‘micrograph_blob/import_sig’, ‘micrograph_blob_non_dw/path’, ‘micrograph_blob_non_dw/idx’, ‘micrograph_blob_non_dw/shape’, ‘micrograph_blob_non_dw/psize_A’, ‘micrograph_blob_non_dw/format’, ‘micrograph_blob_non_dw/is_background_subtracted’, ‘micrograph_blob_non_dw/vmin’, ‘micrograph_blob_non_dw/vmax’, ‘micrograph_blob_non_dw/import_sig’, ‘micrograph_blob_non_dw_AB/path’, ‘micrograph_blob_non_dw_AB/idx’, ‘micrograph_blob_non_dw_AB/shape’, ‘micrograph_blob_non_dw_AB/psize_A’, ‘micrograph_blob_non_dw_AB/format’, ‘micrograph_blob_non_dw_AB/is_background_subtracted’, ‘micrograph_blob_non_dw_AB/vmin’, ‘micrograph_blob_non_dw_AB/vmax’, ‘micrograph_blob_non_dw_AB/import_sig’, ‘micrograph_thumbnail_blob_1x/path’, ‘micrograph_thumbnail_blob_1x/idx’, ‘micrograph_thumbnail_blob_1x/shape’, ‘micrograph_thumbnail_blob_1x/format’, ‘micrograph_thumbnail_blob_1x/binfactor’, ‘micrograph_thumbnail_blob_1x/micrograph_path’, ‘micrograph_thumbnail_blob_1x/vmin’, ‘micrograph_thumbnail_blob_1x/vmax’, ‘micrograph_thumbnail_blob_2x/path’, ‘micrograph_thumbnail_blob_2x/idx’, ‘micrograph_thumbnail_blob_2x/shape’, ‘micrograph_thumbnail_blob_2x/format’, ‘micrograph_thumbnail_blob_2x/binfactor’, ‘micrograph_thumbnail_blob_2x/micrograph_path’, ‘micrograph_thumbnail_blob_2x/vmin’, ‘micrograph_thumbnail_blob_2x/vmax’, ‘background_blob/path’, ‘background_blob/idx’, ‘background_blob/binfactor’, ‘background_blob/shape’, ‘background_blob/psize_A’, ‘rigid_motion/type’, ‘rigid_motion/path’, ‘rigid_motion/idx’, ‘rigid_motion/frame_start’, ‘rigid_motion/frame_end’, ‘rigid_motion/zero_shift_frame’, ‘rigid_motion/psize_A’, ‘spline_motion/type’, ‘spline_motion/path’, ‘spline_motion/idx’, ‘spline_motion/frame_start’, ‘spline_motion/frame_end’, ‘spline_motion/zero_shift_frame’, ‘spline_motion/psize_A’]

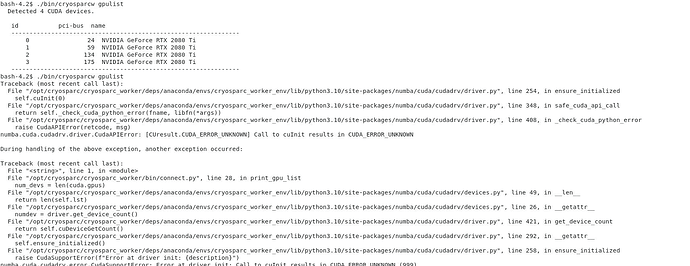

bash-4.2$ ./bin/cryosparcw gpulist

Detected 4 CUDA devices.

id pci-bus name

0 24 NVIDIA GeForce RTX 2080 Ti

1 59 NVIDIA GeForce RTX 2080 Ti

2 134 NVIDIA GeForce RTX 2080 Ti

3 175 NVIDIA GeForce RTX 2080 Ti

bash-4.2$ ./bin/cryosparcw gpulist

Traceback (most recent call last):

File “/opt/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 254, in ensure_initialized

self.cuInit(0)

File “/opt/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 348, in safe_cuda_api_call

return self._check_cuda_python_error(fname, libfn(*args))

File “/opt/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 408, in _check_cuda_python_error

raise CudaAPIError(retcode, msg)

numba.cuda.cudadrv.driver.CudaAPIError: [CUresult.CUDA_ERROR_UNKNOWN] Call to cuInit results in CUDA_ERROR_UNKNOWN

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “”, line 1, in

File “/opt/cryosparc/cryosparc_worker/bin/connect.py”, line 28, in print_gpu_list

num_devs = len(cuda.gpus)

File “/opt/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/devices.py”, line 49, in len

return len(self.lst)

File “/opt/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/devices.py”, line 26, in getattr

numdev = driver.get_device_count()

File “/opt/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 421, in get_device_count

return self.cuDeviceGetCount()

File “/opt/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 292, in getattr

self.ensure_initialized()

File “/opt/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.10/site-packages/numba/cuda/cudadrv/driver.py”, line 258, in ensure_initialized

raise CudaSupportError(f"Error at driver init: {description}")

numba.cuda.cudadrv.error.CudaSupportError: Error at driver init: Call to cuInit results in CUDA_ERROR_UNKNOWN (999)

Welcome to the forum @xyf_123.

Please can you run these commands on the CryoSPARC worker computer and post their output:

cd $(mktemp -d)

/opt/cryosparc/cryosparc_worker/bin/cryosparcw call python -m venv $(pwd)/cudatest

. cudatest/bin/activate

pip install cuda-python~=11.8.0

python <<EOF

try:

# Try to import the CUDA driver API

from cuda.bindings import driver as cuda

# Try to initialize CUDA

result = cuda.cuInit(0)[0]

if result == cuda.CUresult.CUDA_SUCCESS:

print("CUDA initialized successfully!")

device_count = cuda.cuDeviceGetCount()[1]

print(f"Found {device_count} CUDA device(s)")

else:

print(f"CUDA initialization failed with error code: {result}")

except ImportError:

print("Failed to import CUDA libraries. Make sure they are installed and in your PYTHONPATH.")

except Exception as e:

print(f"Error during CUDA initialization: {e}")

EOF

(The python <<EOF and EOF lines enclose a python script where the length (number of spaces) of indentation matters.)