Hello,

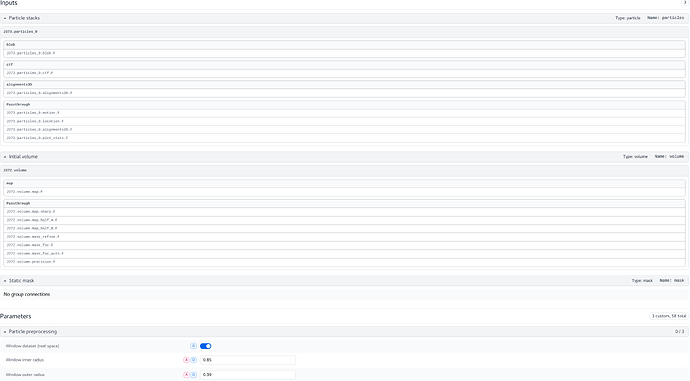

I have been trying to use Reference Based Motion Correction however, after running the job and using the particles for a Homogeneous Refinement, I get the following error:

[CPU: 37.15 GB Avail: 352.05 GB]

====== Initial Model ======

[CPU: 37.15 GB Avail: 352.05 GB]

Resampling initial model to specified volume representation size and pixel-size...

[CPU: 53.94 GB Avail: 336.52 GB]

Traceback (most recent call last):

File "cryosparc_master/cryosparc_compute/run.py", line 95, in cryosparc_master.cryosparc_compute.run.main

File "cryosparc_master/cryosparc_compute/jobs/refine/newrun.py", line 356, in cryosparc_master.cryosparc_compute.jobs.refine.newrun.run_homo_refine

File "/spshared/apps/cryosparc3/cryosparc_worker/cryosparc_compute/newfourier.py", line 417, in resample_resize_real

return ZT( ifft( ZT(fft(x, stack=stack), N_resample, stack=stack), stack=stack), M, stack=stack), psize_final

File "/spshared/apps/cryosparc3/cryosparc_worker/cryosparc_compute/newfourier.py", line 122, in ifft

return ifftcenter3(X, fft_threads)

File "/spshared/apps/cryosparc3/cryosparc_worker/cryosparc_compute/newfourier.py", line 95, in ifftcenter3

v = fftmod.irfftn(tmp, threads=th)

File "/spshared/apps/cryosparc3/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/pyfftw/interfaces/numpy_fft.py", line 293, in irfftn

return _Xfftn(a, s, axes, overwrite_input, planner_effort,

File "/spshared/apps/cryosparc3/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/pyfftw/interfaces/_utils.py", line 128, in _Xfftn

FFTW_object = getattr(builders, calling_func)(*planner_args)

File "/spshared/apps/cryosparc3/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/pyfftw/builders/builders.py", line 543, in irfftn

return _Xfftn(a, s, axes, overwrite_input, planner_effort,

File "/spshared/apps/cryosparc3/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/pyfftw/builders/_utils.py", line 260, in _Xfftn

FFTW_object = pyfftw.FFTW(input_array, output_array, axes, direction,

File "pyfftw/pyfftw.pyx", line 1223, in pyfftw.pyfftw.FFTW.__cinit__

ValueError: ('Strides of the output array must be less than ', '2147483647')

This happens only with the particles from the reference based motion correction and running the same refinement with the original particles does not produce this error.

Thanks for your help.