Hi everyone,

I’m trying to get a fresh installation of cryoSPARC 2.15 set up on my cluster, and I’m failing - can anyone suggest where I could look next?

Many thanks!

Andrew

Symptom:

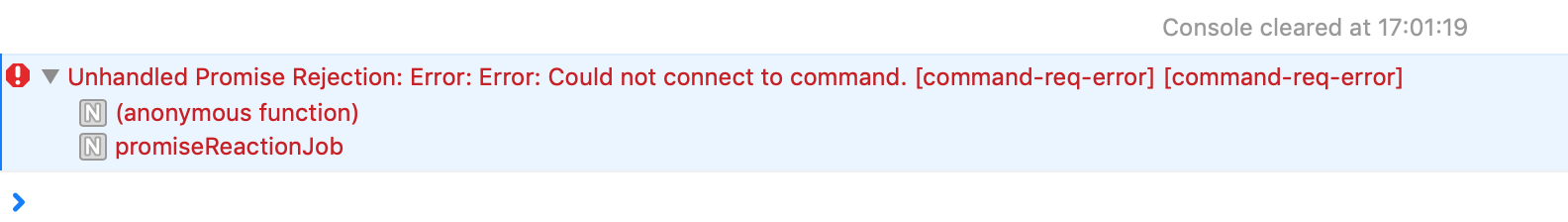

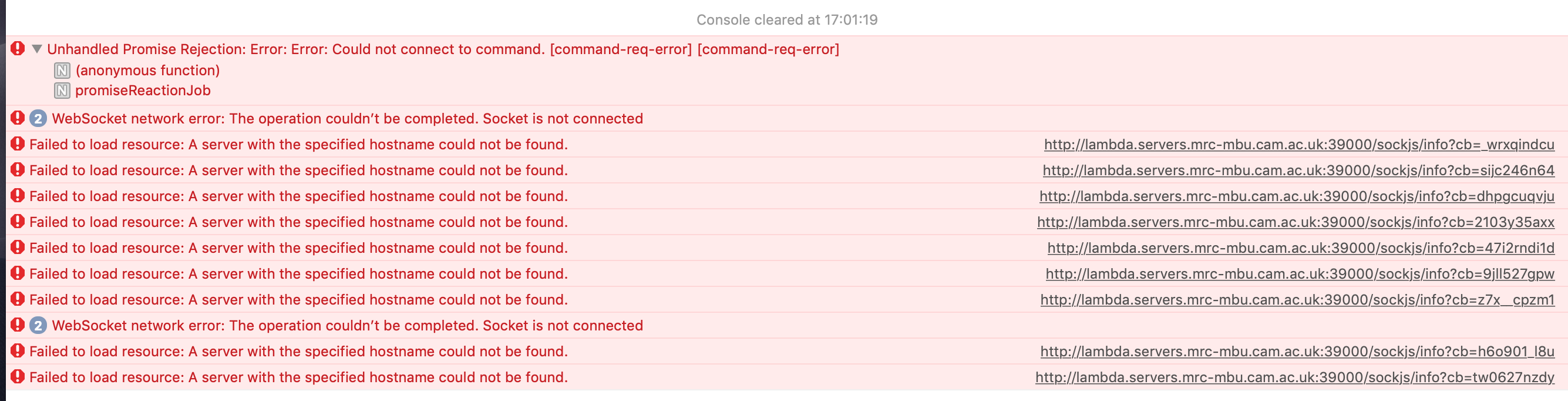

Installation of the cryosparc2_master and cryosparc2_worker packages went OK, but when I log in to the GUI, most actions are greyed out - I presume this is because I haven’t yet accepted the license agreement. When I click on “Accept” I get a notification saying “Cannot connect to command”.

Environment:

- Both the master and worker nodes are running CentOS 7.6

- (The firewall is currently turned off)

- The worker nodes have CUDA 10.1 installed

- The filesystems containing the installation and the database are NFS mounted on all nodes from the cluster’s file-server.

cryoSPARC config:

$ cryosparcm status

CryoSPARC System master node installed at

/nfs4/suffolk/MBU/software/cryosparc/cryosparc2.15/cryosparc2_master

Current cryoSPARC version: v2.15.0

cryosparcm process status:

app STOPPED Not started

app_dev STOPPED Not started

command_core RUNNING pid 40836, uptime 0:13:23

command_proxy RUNNING pid 40866, uptime 0:13:20

command_rtp STOPPED Not started

command_vis RUNNING pid 40859, uptime 0:13:21

database RUNNING pid 40387, uptime 0:13:25

watchdog_dev STOPPED Not started

webapp RUNNING pid 40870, uptime 0:13:18

webapp_dev STOPPED Not started

global config variables:

export CRYOSPARC_LICENSE_ID="***********"

export CRYOSPARC_MASTER_HOSTNAME=“lambda.servers.mrc-mbu.cam.ac.uk”

#export CRYOSPARC_MASTER_HOSTNAME=“lambda.maas”

#export CRYOSPARC_HOSTNAME_CHECK=“lambda.servers.mrc-mbu.cam.ac.uk”

export CRYOSPARC_FORCE_HOSTNAME=true

export CRYOSPARC_DB_PATH="/usr/mbu/cryosparc/database2.15"

export CRYOSPARC_BASE_PORT=39000

export CRYOSPARC_DEVELOP=false

export CRYOSPARC_INSECURE=false

export CRYOSPARC_CLICK_WRAP=true

$ cat cryosparc2_worker/config.sh

export CRYOSPARC_LICENSE_ID="**************"

export CRYOSPARC_USE_GPU=true

export CRYOSPARC_CUDA_PATH="/usr/local/cuda-10.1"

export CRYOSPARC_DEVELOP=false

cryoSPARC log files - (I’ve attempted only to show messages after my most recent start, at 15:02:21 today

Database log:

2020-10-23 15:02:21,260 INFO RPC interface ‘supervisor’ initialized

2020-10-23 15:02:21,260 CRIT Server ‘unix_http_server’ running without any HTTP authentication checking

2020-10-23 15:02:21,261 INFO daemonizing the supervisord process

2020-10-23 15:02:21,268 INFO supervisord started with pid 40385

2020-10-23 15:02:21,491 INFO spawned: ‘database’ with pid 40387

2020-10-23 15:02:22,718 INFO success: database entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2020-10-23 15:02:23,654 INFO spawned: ‘command_core’ with pid 40836

2020-10-23 15:02:25,267 INFO success: command_core entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2020-10-23 15:02:25,560 INFO spawned: ‘command_vis’ with pid 40859

2020-10-23 15:02:26,561 INFO success: command_vis entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2020-10-23 15:02:26,812 INFO spawned: ‘command_proxy’ with pid 40866

2020-10-23 15:02:28,133 INFO success: command_proxy entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2020-10-23 15:02:28,371 INFO spawned: ‘webapp’ with pid 40870

2020-10-23 15:02:29,432 INFO success: webapp entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

Webapp log:

$ cryosparcm log webapp

“key”: “licenseAccepted”,

“value”: true

},

“id”: “3v4ftHJH967RrLSz6”

},

“json”: true

}

{

“method”: “POST”,

“uri”: “http://lambda.maas:39002/api”,

“headers”: {

“CRYOSPARC-USER”: “5f91c87ed1c4c8ca207b020c”

},

“body”: {

“jsonrpc”: “2.0”,

“method”: “set_user_state_var”,

“params”: {

“user_id”: “5f91c87ed1c4c8ca207b020c”,

“key”: “licenseAccepted”,

“value”: true

},

“id”: “pWNTNoqNSXtmQKCw2”

},

“json”: true

}

{

“method”: “POST”,

“uri”: “http://lambda.maas:39002/api”,

“headers”: {

“CRYOSPARC-USER”: “5f91c87ed1c4c8ca207b020c”

},

“body”: {

“jsonrpc”: “2.0”,

“method”: “set_user_state_var”,

“params”: {

“user_id”: “5f91c87ed1c4c8ca207b020c”,

“key”: “licenseAccepted”,

“value”: true

},

“id”: “R3NcZJ79F5pQJyiwi”

},

“json”: true

}

{

“method”: “POST”,

“uri”: “http://lambda.maas:39002/api”,

“headers”: {

“CRYOSPARC-USER”: “5f91c87ed1c4c8ca207b020c”

},

“body”: {

“jsonrpc”: “2.0”,

“method”: “set_user_state_var”,

“params”: {

“user_id”: “5f91c87ed1c4c8ca207b020c”,

“key”: “licenseAccepted”,

“value”: true

},

“id”: “MQzwQ2uNoAZEXxhGX”

},

“json”: true

}

{

“method”: “POST”,

“uri”: “http://lambda.maas:39002/api”,

“headers”: {

“CRYOSPARC-USER”: “5f91c87ed1c4c8ca207b020c”

},

“body”: {

“jsonrpc”: “2.0”,

“method”: “set_user_state_var”,

“params”: {

“user_id”: “5f91c87ed1c4c8ca207b020c”,

“key”: “licenseAccepted”,

“value”: true

},

“id”: “kgRJLHgZ3yjTGLbBA”

},

“json”: true

}

{

“method”: “POST”,

“uri”: “http://lambda.maas:39002/api”,

“headers”: {

“CRYOSPARC-USER”: “5f91c87ed1c4c8ca207b020c”

},

“body”: {

“jsonrpc”: “2.0”,

“method”: “set_user_state_var”,

“params”: {

“user_id”: “5f91c87ed1c4c8ca207b020c”,

“key”: “licenseAccepted”,

“value”: true

},

“id”: “8LqJdKqGXRn3qhFGi”

},

“json”: true

}

cryoSPARC v2

(node:40870) DeprecationWarning: current Server Discovery and Monitoring engine is deprecated, and will be removed in a future version. To use the new Server Discover and Monitoring engine, pass option { useUnifiedTopology: true } to the MongoClient constructor.

Ready to serve GridFS

==== [projects] project query user 5f91c87ed1c4c8ca207b020c IT Support true

==== [workspace] project query user 5f91c87ed1c4c8ca207b020c IT Support true

==== [jobs] project query user 5f91c87ed1c4c8ca207b020c IT Support true

==== [projects] project query user 5f91c87ed1c4c8ca207b020c IT Support true

==== [projects] project query user 5f91c87ed1c4c8ca207b020c IT Support true

==== [workspace] project query user 5f91c87ed1c4c8ca207b020c IT Support true

==== [jobs] project query user 5f91c87ed1c4c8ca207b020c IT Support true

==== [projects] project query user 5f91c87ed1c4c8ca207b020c IT Support true

utility.getLatestVersion ERROR: { RequestError: Error: tunneling socket could not be established, cause=connect EINVAL 0.0.12.56:80 - Local (0.0.0.0:0)

at new RequestError (/nfs4/suffolk/MBU/software/cryosparc/cryosparc2.15/cryosparc2_master/cryosparc2_webapp/bundle/programs/server/npm/node_modules/request-promise-core/lib/errors.js:14:15)

at Request.plumbing.callback (/nfs4/suffolk/MBU/software/cryosparc/cryosparc2.15/cryosparc2_master/cryosparc2_webapp/bundle/programs/server/npm/node_modules/request-promise-core/lib/plumbing.js:87:29)

at Request.RP$callback [as _callback] (/nfs4/suffolk/MBU/software/cryosparc/cryosparc2.15/cryosparc2_master/cryosparc2_webapp/bundle/programs/server/npm/node_modules/request-promise-core/lib/plumbing.js:46:31)

at self.callback (/nfs4/suffolk/MBU/software/cryosparc/cryosparc2.15/cryosparc2_master/cryosparc2_webapp/bundle/programs/server/npm/node_modules/request/request.js:185:22)

at emitOne (events.js:116:13)

at Request.emit (events.js:211:7)

at Request.onRequestError (/nfs4/suffolk/MBU/software/cryosparc/cryosparc2.15/cryosparc2_master/cryosparc2_webapp/bundle/programs/server/npm/node_modules/request/request.js:881:8)

at emitOne (events.js:116:13)

at ClientRequest.emit (events.js:211:7)

at ClientRequest.onError (/nfs4/suffolk/MBU/software/cryosparc/cryosparc2.15/cryosparc2_master/cryosparc2_webapp/bundle/programs/server/npm/node_modules/tunnel-agent/index.js:179:21)

at Object.onceWrapper (events.js:315:30)

at emitOne (events.js:116:13)

at ClientRequest.emit (events.js:211:7)

at Socket.socketErrorListener (_http_client.js:387:9)

at emitOne (events.js:116:13)

at Socket.emit (events.js:211:7)

at emitErrorNT (internal/streams/destroy.js:64:8)

at _combinedTickCallback (internal/process/next_tick.js:138:11)

at process._tickDomainCallback (internal/process/next_tick.js:218:9)

=> awaited here:

at Function.Promise.await (/nfs4/suffolk/MBU/software/cryosparc/cryosparc2.15/cryosparc2_master/cryosparc2_webapp/bundle/programs/server/npm/node_modules/meteor/promise/node_modules/meteor-promise/promise_server.js:56:12)

at Promise.asyncApply (imports/api/Utility/server/methods.js:24:15)

at /nfs4/suffolk/MBU/software/cryosparc/cryosparc2.15/cryosparc2_master/cryosparc2_webapp/bundle/programs/server/npm/node_modules/meteor/promise/node_modules/meteor-promise/fiber_pool.js:43:40

name: ‘RequestError’,

message: ‘Error: tunneling socket could not be established, cause=connect EINVAL 0.0.12.56:80 - Local (0.0.0.0:0)’,

cause: { Error: tunneling socket could not be established, cause=connect EINVAL 0.0.12.56:80 - Local (0.0.0.0:0)

at ClientRequest.onError (/nfs4/suffolk/MBU/software/cryosparc/cryosparc2.15/cryosparc2_master/cryosparc2_webapp/bundle/programs/server/npm/node_modules/tunnel-agent/index.js:177:17)

at Object.onceWrapper (events.js:315:30)

at emitOne (events.js:116:13)

at ClientRequest.emit (events.js:211:7)

at Socket.socketErrorListener (_http_client.js:387:9)

at emitOne (events.js:116:13)

at Socket.emit (events.js:211:7)

at emitErrorNT (internal/streams/destroy.js:64:8)

at _combinedTickCallback (internal/process/next_tick.js:138:11)

at process._tickDomainCallback (internal/process/next_tick.js:218:9) code: ‘ECONNRESET’ },

error: { Error: tunneling socket could not be established, cause=connect EINVAL 0.0.12.56:80 - Local (0.0.0.0:0)

at ClientRequest.onError (/nfs4/suffolk/MBU/software/cryosparc/cryosparc2.15/cryosparc2_master/cryosparc2_webapp/bundle/programs/server/npm/node_modules/tunnel-agent/index.js:177:17)

at Object.onceWrapper (events.js:315:30)

at emitOne (events.js:116:13)

at ClientRequest.emit (events.js:211:7)

at Socket.socketErrorListener (_http_client.js:387:9)

at emitOne (events.js:116:13)

at Socket.emit (events.js:211:7)

at emitErrorNT (internal/streams/destroy.js:64:8)

at _combinedTickCallback (internal/process/next_tick.js:138:11)

at process._tickDomainCallback (internal/process/next_tick.js:218:9) code: ‘ECONNRESET’ },

options:

{ uri: ‘https://get.cryosparc.com/get_update_tag/xxxxxxxxx/v2.15.0’,

callback: [Function: RP$callback],

transform: undefined,

simple: true,

resolveWithFullResponse: false,

transform2xxOnly: false },

response: undefined }

{

“method”: “POST”,

“uri”: “http://lambda.servers.mrc-mbu.cam.ac.uk:39002/api”,

“headers”: {

“CRYOSPARC-USER”: “5f91c87ed1c4c8ca207b020c”

},

“body”: {

“jsonrpc”: “2.0”,

“method”: “set_user_state_var”,

“params”: {

“user_id”: “5f91c87ed1c4c8ca207b020c”,

“key”: “licenseAccepted”,

“value”: true

},

“id”: “hdeD2K4uZXRE6cZaz”

},

“json”: true

}

{

“method”: “POST”,

“uri”: “http://lambda.servers.mrc-mbu.cam.ac.uk:39002/api”,

“headers”: {

“CRYOSPARC-USER”: “5f91c87ed1c4c8ca207b020c”

},

“body”: {

“jsonrpc”: “2.0”,

“method”: “set_user_state_var”,

“params”: {

“user_id”: “5f91c87ed1c4c8ca207b020c”,

“key”: “licenseAccepted”,

“value”: true

},

“id”: “5owJ9wwoFtdWiaNeL”

},

“json”: true

}

command_vis log:

2020-10-23 15:02:29,120 VIS.MAIN INFO === STARTED ===

*** client.py: command (http://lambda.servers.mrc-mbu.cam.ac.uk:39002/api) did not reply within timeout of 300 seconds, attempt 1 of 3

webapp log:

2020-10-23 15:02:21,260 INFO RPC interface ‘supervisor’ initialized

2020-10-23 15:02:21,260 CRIT Server ‘unix_http_server’ running without any HTTP authentication checking

2020-10-23 15:02:21,261 INFO daemonizing the supervisord process

2020-10-23 15:02:21,268 INFO supervisord started with pid 40385

2020-10-23 15:02:21,491 INFO spawned: ‘database’ with pid 40387

2020-10-23 15:02:22,718 INFO success: database entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2020-10-23 15:02:23,654 INFO spawned: ‘command_core’ with pid 40836

2020-10-23 15:02:25,267 INFO success: command_core entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2020-10-23 15:02:25,560 INFO spawned: ‘command_vis’ with pid 40859

2020-10-23 15:02:26,561 INFO success: command_vis entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2020-10-23 15:02:26,812 INFO spawned: ‘command_proxy’ with pid 40866

2020-10-23 15:02:28,133 INFO success: command_proxy entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2020-10-23 15:02:28,371 INFO spawned: ‘webapp’ with pid 40870

2020-10-23 15:02:29,432 INFO success: webapp entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

command_proxy log:

(34065) wsgi starting up on http://0.0.0.0:39004

(46370) wsgi starting up on http://0.0.0.0:39004

(49466) wsgi starting up on http://0.0.0.0:39004

(51779) wsgi starting up on http://0.0.0.0:39004

(8390) wsgi starting up on http://0.0.0.0:39004

(12250) wsgi starting up on http://0.0.0.0:39004

(13572) wsgi starting up on http://0.0.0.0:39004

(16192) wsgi starting up on http://0.0.0.0:39004

(16192) accepted (‘192.168.101.2’, 54869)

(1423) wsgi starting up on http://0.0.0.0:39004

(961) wsgi starting up on http://0.0.0.0:39004

(14487) wsgi starting up on http://0.0.0.0:39004

(39108) wsgi starting up on http://0.0.0.0:39004

(40866) wsgi starting up on http://0.0.0.0:39004

command_core log:

COMMAND CORE STARTED === 2020-10-23 15:02:24.265767 ==========================

*** BG WORKER START

supervisord log:

2020-10-23 15:02:21,260 INFO RPC interface ‘supervisor’ initialized

2020-10-23 15:02:21,260 CRIT Server ‘unix_http_server’ running without any HTTP authentication checking

2020-10-23 15:02:21,261 INFO daemonizing the supervisord process

2020-10-23 15:02:21,268 INFO supervisord started with pid 40385

2020-10-23 15:02:21,491 INFO spawned: ‘database’ with pid 40387

2020-10-23 15:02:22,718 INFO success: database entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2020-10-23 15:02:23,654 INFO spawned: ‘command_core’ with pid 40836

2020-10-23 15:02:25,267 INFO success: command_core entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2020-10-23 15:02:25,560 INFO spawned: ‘command_vis’ with pid 40859

2020-10-23 15:02:26,561 INFO success: command_vis entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2020-10-23 15:02:26,812 INFO spawned: ‘command_proxy’ with pid 40866

2020-10-23 15:02:28,133 INFO success: command_proxy entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)

2020-10-23 15:02:28,371 INFO spawned: ‘webapp’ with pid 40870

2020-10-23 15:02:29,432 INFO success: webapp entered RUNNING state, process has stayed up for > than 1 seconds (startsecs)