After updating cryoSPARC to v4.4.1 (cluster), I tried running the Benchmark job under Instance Testing Utilities, but it failed to finish. No other jobs were running at the time.

This message appeared 3 times in the event log:

Failed to complete GPU benchmark on GPU 0: [CUresult.CUDA_ERROR_OUT_OF_MEMORY] Call to cuMemAlloc results in CUDA_ERROR_OUT_OF_MEMORY

And this message at the end of the log:

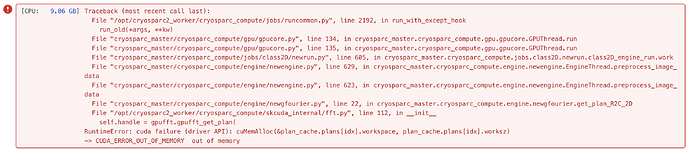

[CPU: 9.06 GB] Traceback (most recent call last):

File "/opt/cryosparc2_worker/cryosparc_compute/jobs/runcommon.py", line 2192, in run_with_except_hook

run_old(*args, **kw)

File "cryosparc_master/cryosparc_compute/gpu/gpucore.py", line 134, in cryosparc_master.cryosparc_compute.gpu.gpucore.GPUThread.run

File "cryosparc_master/cryosparc_compute/gpu/gpucore.py", line 135, in cryosparc_master.cryosparc_compute.gpu.gpucore.GPUThread.run

File "cryosparc_master/cryosparc_compute/jobs/class2D/newrun.py", line 605, in cryosparc_master.cryosparc_compute.jobs.class2D.newrun.class2D_engine_run.work

File "cryosparc_master/cryosparc_compute/engine/newengine.py", line 629, in cryosparc_master.cryosparc_compute.engine.newengine.EngineThread.preprocess_image_data

File "cryosparc_master/cryosparc_compute/engine/newengine.py", line 623, in cryosparc_master.cryosparc_compute.engine.newengine.EngineThread.preprocess_image_data

File "cryosparc_master/cryosparc_compute/engine/newgfourier.py", line 22, in cryosparc_master.cryosparc_compute.engine.newgfourier.get_plan_R2C_2D

File "/opt/cryosparc2_worker/cryosparc_compute/skcuda_internal/fft.py", line 112, in __init__

self.handle = gpufft.gpufft_get_plan(

RuntimeError: cuda failure (driver API): cuMemAlloc(&plan_cache.plans[idx].workspace, plan_cache.plans[idx].worksz)

-> CUDA_ERROR_OUT_OF_MEMORY out of memory

I have not had any issues running other jobs on this version of cryoSPARC yet, but I have not thoroughly tested it. Would like to know the root cause of this and any potential solutions. Please let me know if any additional details about the system would help. Thanks!