Hi,

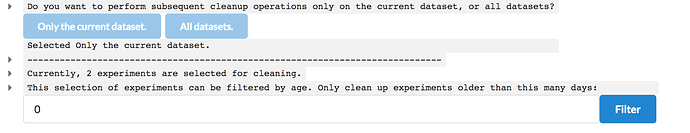

When I attempt to use the disk cleanup tool, it successfully removes orphaned jobs, but then it gets to the point where the user is given the option to filter the jobs that are selected for cleaning, and clicking the “Filter” button has no effect, regardless of the value entered in the text entry field.

Cheers

Oli

Hi @olibclarke, is this still happening? Does the status of the job still report running when it’s in the stuck state? What browser are you using?

Hi @apunjani yes this still happens with 0.5.3 - I am using Firefox (which seems to work fine for everything else, though I know Chrome is recommended). I’ll test it in Chrome and see if the same thing happens.

Thanks!

Oli

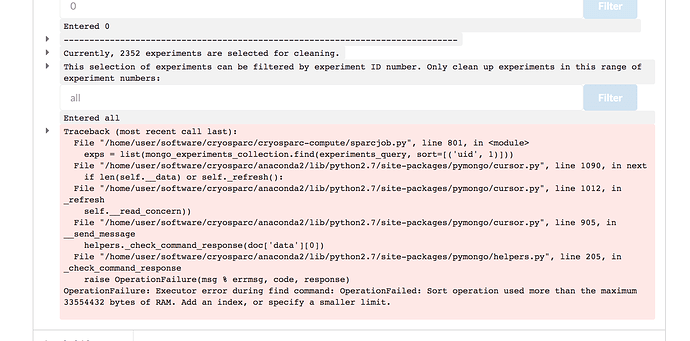

@apunjani just realized I never followed up this - it gets further in chrome but then fails with a different error, complaining about a lack of ram.

I’d really like to clean up the database because it’s sitting at 5T - any suggestions?

Cheers

Oli

When I try to use a more limited range, it works, but it doesn’t seem to do what I want.

I have noticed that when I delete a dataset in the GUI, the database size doesn’t seem to change, so I’m presuming that there are some phantom jobs hanging around that are associated with a dataset that has been deleted from the datasets tab, but I have no way of deleting them (and the names of the job folders in the sparcdata folder are not human readable). Is there something I’m missing?

Cheers

Oli