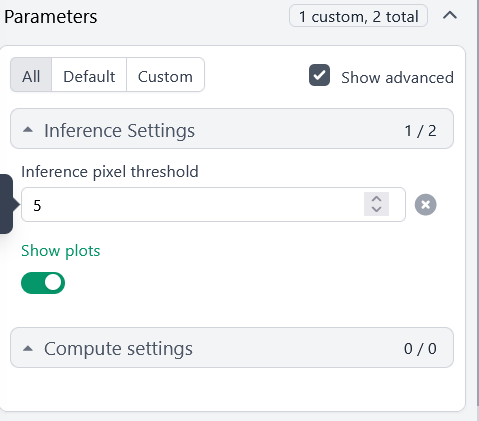

Hello @mbs , sorry to hear that. None of the suggestions on the forum helped me so far. I am also missing some of the settings from the menu (see the picture). First, there were some issues with the training itself that we solved based on this discussion.. I am wondering if the errors are related.

Note: I also tried it without the plots.