Thanks for your solution.

I have 6 attachment failed project directories. 3 of them were recovered by your solution. But other projects can not been recovered. Here is one of the **cryosparcm filterlog command_core -l ERROR** results. Another failed project error log is the same as this.

2024-01-19 17:34:55,066 wrapper ERROR | JSONRPC ERROR at set_user_viewed_project

2024-01-19 17:34:55,066 wrapper ERROR | Traceback (most recent call last):

2024-01-19 17:34:55,066 wrapper ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/cryosparc_command/commandcommon.py", line 195, in wrapper

2024-01-19 17:34:55,066 wrapper ERROR | res = func(*args, **kwargs)

2024-01-19 17:34:55,066 wrapper ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/cryosparc_command/command_core/__init__.py", line 1196, in set_user_viewed_project

2024-01-19 17:34:55,066 wrapper ERROR | update_project(project_uid, {'last_accessed' : {'name' : get_username_by_id(user_id), 'accessed_at' : datetime.datetime.utcnow()}}, operation='$set', export=False)

2024-01-19 17:34:55,066 wrapper ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/cryosparc_command/commandcommon.py", line 186, in wrapper

2024-01-19 17:34:55,066 wrapper ERROR | return func(*args, **kwargs)

2024-01-19 17:34:55,066 wrapper ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/cryosparc_command/commandcommon.py", line 261, in wrapper

2024-01-19 17:34:55,066 wrapper ERROR | assert not project['detached'], f"validation error: project {project_uid} is detached"

2024-01-19 17:34:55,066 wrapper ERROR | AssertionError: validation error: project P19 is detached

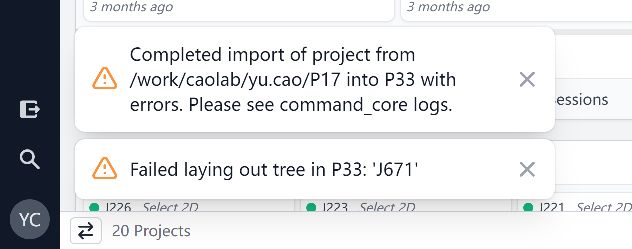

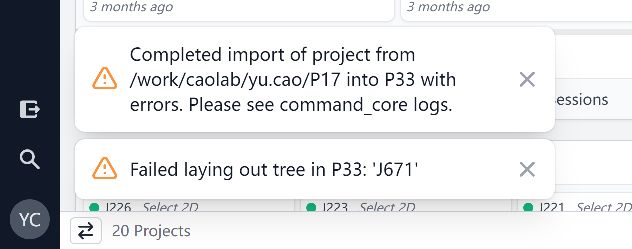

And this screenshot which disappeared quickly

P33 is the re-attachment of P19.

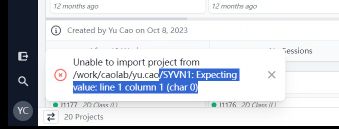

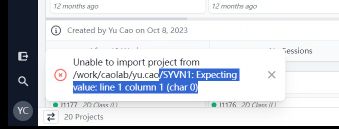

And last failed project got this error log:

cryosparcm filterlog command_core -l ERROR

2024-01-19 19:52:33,552 import_project_run ERROR | Unable to import project from /work/caolab/yu.cao/SYVN1

2024-01-19 19:52:33,552 import_project_run ERROR | Traceback (most recent call last):

2024-01-19 19:52:33,552 import_project_run ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/cryosparc_command/command_core/__init__.py", line 4479, in import_project_run

2024-01-19 19:52:33,552 import_project_run ERROR | warning = import_jobs(jobs_manifest, abs_path_export_project_dir, new_project_uid, owner_user_id, notification_id) or warning

2024-01-19 19:52:33,552 import_project_run ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/cryosparc_command/command_core/__init__.py", line 4722, in import_jobs

2024-01-19 19:52:33,552 import_project_run ERROR | job_doc_data = json.load(openfile, object_hook=json_util.object_hook)

2024-01-19 19:52:33,552 import_project_run ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.8/json/__init__.py", line 293, in load

2024-01-19 19:52:33,552 import_project_run ERROR | return loads(fp.read(),

2024-01-19 19:52:33,552 import_project_run ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.8/json/__init__.py", line 370, in loads

2024-01-19 19:52:33,552 import_project_run ERROR | return cls(**kw).decode(s)

2024-01-19 19:52:33,552 import_project_run ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.8/json/decoder.py", line 337, in decode

2024-01-19 19:52:33,552 import_project_run ERROR | obj, end = self.raw_decode(s, idx=_w(s, 0).end())

2024-01-19 19:52:33,552 import_project_run ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.8/json/decoder.py", line 355, in raw_decode

2024-01-19 19:52:33,552 import_project_run ERROR | raise JSONDecodeError("Expecting value", s, err.value) from None

2024-01-19 19:52:33,552 import_project_run ERROR | json.decoder.JSONDecodeError: Expecting value: line 1 column 1 (char 0)

2024-01-19 19:52:33,626 run ERROR | POST-RESPONSE-THREAD ERROR at import_project_run

2024-01-19 19:52:33,626 run ERROR | Traceback (most recent call last):

2024-01-19 19:52:33,626 run ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/cryosparc_command/commandcommon.py", line 72, in run

2024-01-19 19:52:33,626 run ERROR | self.target(*self.args)

2024-01-19 19:52:33,626 run ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/cryosparc_command/command_core/__init__.py", line 4479, in import_project_run

2024-01-19 19:52:33,626 run ERROR | warning = import_jobs(jobs_manifest, abs_path_export_project_dir, new_project_uid, owner_user_id, notification_id) or warning

2024-01-19 19:52:33,626 run ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/cryosparc_command/command_core/__init__.py", line 4722, in import_jobs

2024-01-19 19:52:33,626 run ERROR | job_doc_data = json.load(openfile, object_hook=json_util.object_hook)

2024-01-19 19:52:33,626 run ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.8/json/__init__.py", line 293, in load

2024-01-19 19:52:33,626 run ERROR | return loads(fp.read(),

2024-01-19 19:52:33,626 run ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.8/json/__init__.py", line 370, in loads

2024-01-19 19:52:33,626 run ERROR | return cls(**kw).decode(s)

2024-01-19 19:52:33,626 run ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.8/json/decoder.py", line 337, in decode

2024-01-19 19:52:33,626 run ERROR | obj, end = self.raw_decode(s, idx=_w(s, 0).end())

2024-01-19 19:52:33,626 run ERROR | File "/cm/shared/apps/cryosparc/cylab/cryosparc_master/deps/anaconda/envs/cryosparc_master_env/lib/python3.8/json/decoder.py", line 355, in raw_decode

2024-01-19 19:52:33,626 run ERROR | raise JSONDecodeError("Expecting value", s, err.value) from None

2024-01-19 19:52:33,626 run ERROR | json.decoder.JSONDecodeError: Expecting value: line 1 column 1 (char 0)

And also the screenshot

By the way, about the old detached project, can I delete them from database?