Hello,

I just upgraded our labs’ workstations after hearing about v.4.4 (looking forward to trying out all the new features!). However, when I tried running an extract from micrographs job, I get the following error:

Error occurred while processing micrograph J2/motioncorrected/011693211652979670157_20211206_1006_A002_G000_H100_D001_patch_aligned_doseweighted.mrc

Traceback (most recent call last):

File "/home/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numba/cuda/cudadrv/driver.py", line 3007, in add_ptx

driver.cuLinkAddData(self.handle, input_ptx, ptx, len(ptx),

File "/home/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numba/cuda/cudadrv/driver.py", line 352, in safe_cuda_api_call

return self._check_cuda_python_error(fname, libfn(*args))

File "/home/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numba/cuda/cudadrv/driver.py", line 412, in _check_cuda_python_error

raise CudaAPIError(retcode, msg)

numba.cuda.cudadrv.driver.CudaAPIError: [CUresult.CUDA_ERROR_UNSUPPORTED_PTX_VERSION] Call to cuLinkAddData results in CUDA_ERROR_UNSUPPORTED_PTX_VERSION

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/cryosparc/cryosparc_worker/cryosparc_compute/jobs/pipeline.py", line 61, in exec

return self.process(item)

File "/home/cryosparc/cryosparc_worker/cryosparc_compute/jobs/extract/run.py", line 508, in process

result = extraction_gpu.do_extract_particles_single_mic_gpu(mic=mic, bg_bin=bg_bin,

File "/home/cryosparc/cryosparc_worker/cryosparc_compute/jobs/extract/extraction_gpu.py", line 161, in do_extract_particles_single_mic_gpu

ET.patches_gpu.fill(0, stream=stream)

File "/home/cryosparc/cryosparc_worker/cryosparc_compute/gpu/gpuarray.py", line 110, in fill

from .elementwise.fill import fill

File "/home/cryosparc/cryosparc_worker/cryosparc_compute/gpu/elementwise/fill.py", line 24, in <module>

def fill(arr, x, out):

File "/home/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numba/np/ufunc/decorators.py", line 203, in wrap

guvec.add(fty)

File "/home/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numba/np/ufunc/deviceufunc.py", line 475, in add

kernel = self._compile_kernel(fnobj, sig=tuple(outertys))

File "/home/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numba/cuda/vectorizers.py", line 241, in _compile_kernel

return cuda.jit(sig)(fnobj)

File "/home/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numba/cuda/decorators.py", line 133, in _jit

disp.compile(argtypes)

File "/home/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numba/cuda/dispatcher.py", line 928, in compile

kernel.bind()

File "/home/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numba/cuda/dispatcher.py", line 207, in bind

self._codelibrary.get_cufunc()

File "/home/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numba/cuda/codegen.py", line 184, in get_cufunc

cubin = self.get_cubin(cc=device.compute_capability)

File "/home/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numba/cuda/codegen.py", line 159, in get_cubin

linker.add_ptx(ptx.encode())

File "/home/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numba/cuda/cudadrv/driver.py", line 3010, in add_ptx

raise LinkerError("%s\n%s" % (e, self.error_log))

numba.cuda.cudadrv.driver.LinkerError: [CUresult.CUDA_ERROR_UNSUPPORTED_PTX_VERSION] Call to cuLinkAddData results in CUDA_ERROR_UNSUPPORTED_PTX_VERSION

ptxas application ptx input, line 9; fatal : Unsupported .version 7.8; current version is '7.3'

Marking J2/motioncorrected/011693211652979670157_20211206_1006_A002_G000_H100_D001_patch_aligned_doseweighted.mrc as incomplete and continuing...

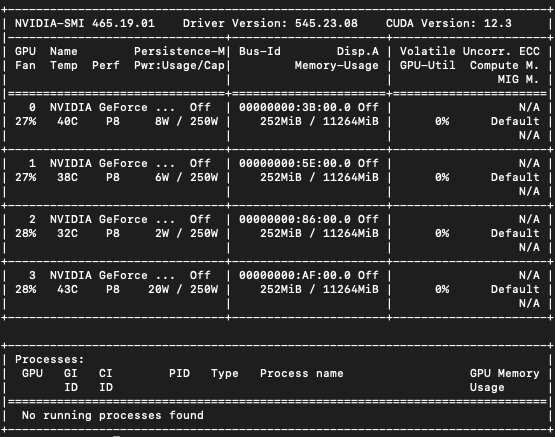

The error repeats for each micrograph it tries to extract particles from. Seems to be an issue with CUDA incompatibility after the update. Should I just try to update CUDA or is there another solution? Thanks for the help!

Best,

Kyle