Hi team.

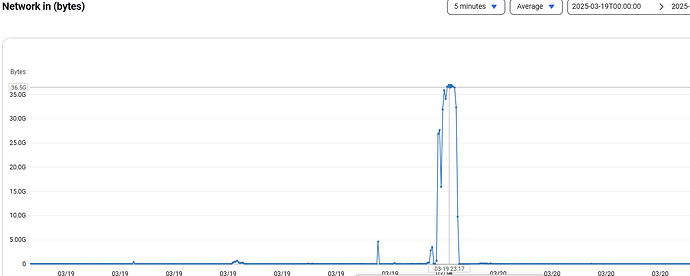

We are currently using CryoSPARC on AWS ParallelCluster. Occasionally, CryoSPARC crashes. Upon checking the monitoring data, I noticed a peak in network-in traffic of approximately 35GB/s. During these instances, the database log shows the following entries.

2025-03-19T22:27:49.275+0000 I NETWORK [conn5669] end connection :43206 (184 connections now open)

2025-03-19T22:27:49.275+0000 I NETWORK [conn5667] end connection :43190 (183 connections now open)

2025-03-19T22:29:42.117+0000 I STORAGE [WT RecordStoreThread: local.oplog.rs] WiredTiger record store oplog truncation finished in: 7ms

2025-03-19T22:31:45.585+0000 I STORAGE [WT RecordStoreThread: local.oplog.rs] WiredTiger record store oplog truncation finished in: 5ms

2025-03-19T22:34:00.337+0000 I NETWORK [listener] connection accepted from #5673 (184 connections now open)

2025-03-19T22:34:00.337+0000 I NETWORK [listener] connection accepted from :49304 #5674 (185 connections now open)

2025-03-19T22:34:00.337+0000 I NETWORK [conn5673] received client metadata from :49292 conn5673: { driver: { name: "PyMongo", version: "4.8.0" }, os: { type: "Linux", name: "Linux", architecture: "x86_64", version: "5.15.0-1062-aws" }, platform: "CPython 3.10.14.final.0" }

2025-03-19T22:34:00.337+0000 I NETWORK [conn5674] received client metadata from :49304 conn5674: { driver: { name: "PyMongo", version: "4.8.0" }, os: { type: "Linux", name: "Linux", architecture: "x86_64", version: "5.15.0-1062-aws" }, platform: "CPython 3.10.14.final.0" }

2025-03-19T22:34:00.338+0000 I NETWORK [listener] connection accepted from :49314 #5675 (186 connections now open)

2025-03-19T22:34:00.338+0000 I NETWORK [listener] connection accepted from :49320 #5676 (187 connections now open)

2025-03-19T22:34:00.338+0000 I NETWORK [conn5675] received client metadata from :49314 conn5675: { driver: { name: "PyMongo", version: "4.8.0" }, os: { type: "Linux", name: "Linux", architecture: "x86_64", version: "5.15.0-1062-aws" }, platform: "CPython 3.10.14.final.0" }

2025-03-19T22:34:00.338+0000 I NETWORK [conn5676] received client metadata from :49320 conn5676: { driver: { name: "PyMongo", version: "4.8.0" }, os: { type: "Linux", name: "Linux", architecture: "x86_64", version: "5.15.0-1062-aws" }, platform: "CPython 3.10.14.final.0" }

2025-03-19T22:34:00.342+0000 I ACCESS [conn5675] Successfully authenticated as principal cryosparc_user on admin from client :49314

2025-03-19T22:34:00.342+0000 I ACCESS [conn5676] Successfully authenticated as principal cryosparc_user on admin from client :49320

2025-03-19T22:34:12.624+0000 I STORAGE [WT RecordStoreThread: local.oplog.rs] WiredTiger record store oplog truncation finished in: 4ms

2025-03-19T22:34:27.600+0000 I NETWORK [listener] connection accepted from :37404 #5677 (188 connections now open)

2025-03-19T22:34:27.600+0000 I NETWORK [listener] connection accepted from :37418 #5678 (189 connections now open)

2025-03-19T22:34:27.601+0000 I NETWORK [conn5677] received client metadata from :37404 conn5677: { driver: { name: "PyMongo", version: "4.8.0" }, os: { type: "Linux", name: "Linux", architecture: "x86_64", version: "5.15.0-1062-aws" }, platform: "CPython 3.10.14.final.0" }

2025-03-19T22:34:27.601+0000 I NETWORK [conn5678] received client metadata from :37418 conn5678: { driver: { name: "PyMongo", version: "4.8.0" }, os: { type: "Linux", name: "Linux", architecture: "x86_64", version: "5.15.0-1062-aws" }, platform: "CPython 3.10.14.final.0" }

2025-03-19T22:34:27.601+0000 I NETWORK [listener] connection accepted from :37434 #5679 (190 connections now open)

2025-03-19T22:34:27.601+0000 I NETWORK [listener] connection accepted from :37446 #5680 (191 connections now open)

2025-03-19T22:34:27.601+0000 I NETWORK [conn5679] received client metadata from :37434 conn5679: { driver: { name: "PyMongo", version: "4.8.0" }, os: { type: "Linux", name: "Linux", architecture: "x86_64", version: "5.15.0-1062-aws" }, platform: "CPython 3.10.14.final.0" }

2025-03-19T22:34:27.601+0000 I NETWORK [conn5680] received client metadata from :37446 conn5680: { driver: { name: "PyMongo", version: "4.8.0" }, os: { type: "Linux", name: "Linux", architecture: "x86_64", version: "5.15.0-1062-aws" }, platform: "CPython 3.10.14.final.0" }

2025-03-19T22:34:27.605+0000 I ACCESS [conn5679] Successfully authenticated as principal cryosparc_user on admin from client :37434

2025-03-19T22:34:27.605+0000 I ACCESS [conn5680] Successfully authenticated as principal cryosparc_user on admin from client :37446

2025-03-19T22:34:48.709+0000 I STORAGE [WT RecordStoreThread: local.oplog.rs] WiredTiger record store oplog truncation finished in: 2ms

2025-03-19T22:35:24.369+0000 I STORAGE [WT RecordStoreThread: local.oplog.rs] WiredTiger record store oplog truncation finished in: 5ms

2025-03-19T22:35:41.732+0000 I STORAGE [WT RecordStoreThread: local.oplog.rs] WiredTiger record store oplog truncation finished in: 6ms

2025-03-19T22:35:59.853+0000 I STORAGE [WT RecordStoreThread: local.oplog.rs] WiredTiger record store oplog truncation finished in: 4ms

2025-03-19T22:36:16.984+0000 I NETWORK [listener] connection accepted from :42606 #5681 (192 connections now open)

2025-03-19T22:36:16.986+0000 I NETWORK [conn5681] received client metadata from :42606 conn5681: { driver: { name: "PyMongo", version: "4.8.0" }, os: { type: "Linux", name: "Linux", architecture: "x86_64", version: "5.15.0-1062-aws" }, platform: "CPython 3.10.14.final.0" }

2025-03-19T22:36:16.987+0000 I NETWORK [listener] connection accepted from :42620 #5682 (193 connections now open)

2025-03-19T22:36:16.987+0000 I NETWORK [conn5682] received client metadata from :42620 conn5682: { driver: { name: "PyMongo", version: "4.8.0" }, os: { type: "Linux", name: "Linux", architecture: "x86_64", version: "5.15.0-1062-aws" }, platform: "CPython 3.10.14.final.0" }

2025-03-19T22:36:16.997+0000 I ACCESS [conn5682] Successfully authenticated as principal cryosparc_user on admin from client :42620

2025-03-19T22:36:17.575+0000 I NETWORK [conn5682] end connection :42620 (191 connections now open)

2025-03-19T22:36:17.575+0000 I NETWORK [conn5681] end connection :42606 (192 connections now open)

2025-03-19T22:37:43.292+0000 I STORAGE [WT RecordStoreThread: local.oplog.rs] WiredTiger record store oplog truncation finished in: 8ms

2025-03-19T22:39:24.280+0000 I COMMAND [conn45] command meteor.workspaces command: find { find: "workspaces", filter: { project_uid: "P76", session_uid: "S3" }, projection: { exposure_groups: 1, file_engine_last_run: 1 }, limit: 1, singleBatch: true, lsid: { id: UUID("6d5362ab-f538-437d-8ebd-58942377ac40") }, $clusterTime: { clusterTime: Timestamp(1742423964, 3), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } planSummary: IXSCAN { project_uid: 1, session_uid: 1 } keysExamined:1 docsExamined:1 cursorExhausted:1 numYields:1 nreturned:1 reslen:880 locks:{ Global: { acquireCount: { r: 4 } }, Database: { acquireCount: { r: 2 } }, Collection: { acquireCount: { r: 2 } } } protocol:op_msg 140ms

2025-03-19T22:39:25.604+0000 I COMMAND [conn5633] command admin.$cmd command: isMaster { ismaster: 1, helloOk: true, $clusterTime: { clusterTime: Timestamp(1742423958, 4), signature: { hash: BinData(0, ), keyId: } }, $db: "admin" } numYields:0 reslen:639 locks:{} protocol:op_msg 142ms

2025-03-19T22:39:27.642+0000 I COMMAND [conn30] command local.oplog.rs command: getMore { getMore: 8615417076, collection: "oplog.rs", batchSize: 1000, lsid: { id: UUID("") }, $clusterTime: { clusterTime: Timestamp(1742423963, 46), signature: { hash: BinData(0, ), keyId: } }, $db: "local" } originatingCommand: { find: "oplog.rs", filter: { ns: /^(?:meteor\.|admin\.\$cmd)/, $or: [ { op: { $in: [ "i", "u", "d" ] } }, { op: "c", o.drop: { $exists: true } }, { op: "c", o.dropDatabase: 1 }, { op: "c", o.applyOps: { $exists: true } } ], ts: { $gt: Timestamp(1739901706, 11) } }, tailable: true, oplogReplay: true, awaitData: true, lsid: { id: UUID("838be17a-cf97-4841-843f-872a5f3052e6") }, $clusterTime: { clusterTime: Timestamp(1739901710, 80), signature: { hash: BinData(0, 64C3AFDB895F18A43778BFAE149236D529255727), keyId: } }, $db: "local" } planSummary: COLLSCAN cursorid:8615417076 keysExamined:0 docsExamined:8 numYields:2 nreturned:8 reslen:4344 locks:{ Global: { acquireCount: { r: 6 } }, Database: { acquireCount: { r: 3 } }, oplog: { acquireCount: { r: 3 } } } protocol:op_msg 1053ms

2025-03-19T22:39:27.735+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 12, after asserts: 12, after backgroundFlushing: 12, after connections: 12, after dur: 12, after extra_info: 22, after globalLock: 32, after locks: 42, after logicalSessionRecordCache: 42, after network: 52, after opLatencies: 72, after opReadConcernCounters: 72, after opcounters: 72, after opcountersRepl: 72, after oplogTruncation: 127, after repl: 258, after storageEngine: 641, after tcmalloc: 1160, after transactions: 1379, after wiredTiger: 2312, at end: 2425 }

2025-03-19T22:39:27.875+0000 I COMMAND [conn5576] command admin.$cmd command: isMaster { ismaster: 1, helloOk: true, $clusterTime: { clusterTime: Timestamp(1742423956, 6), signature: { hash: BinData(0, ), keyId: } }, $db: "admin" } numYields:0 reslen:639 locks:{} protocol:op_msg 159ms

2025-03-19T22:39:27.875+0000 I COMMAND [conn5575] command admin.$cmd command: isMaster { ismaster: 1, helloOk: true, $clusterTime: { clusterTime: Timestamp(1742423964, 3), signature: { hash: BinData(0, ), keyId: } }, $db: "admin" } numYields:0 reslen:639 locks:{} protocol:op_msg 185ms

2025-03-19T22:39:28.500+0000 I COMMAND [conn2017] command meteor.sched_queued command: find { find: "sched_queued", filter: {}, projection: { queued_job_hash: 1, last_scheduled_at: 1 }, lsid: { id: UUID("e52e100a-4951-4451-9596-0f10c946b735") }, $clusterTime: { clusterTime: Timestamp(1742423963, 35), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } planSummary: COLLSCAN keysExamined:0 docsExamined:0 cursorExhausted:1 numYields:1 nreturned:0 reslen:217 locks:{ Global: { acquireCount: { r: 4 } }, Database: { acquireCount: { r: 2 } }, Collection: { acquireCount: { r: 2 } } } protocol:op_msg 364ms

2025-03-19T22:39:28.964+0000 I COMMAND [conn5675] command meteor.events command: insert { insert: "events", ordered: true, lsid: { id: UUID("") }, txnNumber: 5000, $clusterTime: { clusterTime: Timestamp(1742423964, 8), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } ninserted:1 keysInserted:2 numYields:0 reslen:214 locks:{ Global: { acquireCount: { r: 3, w: 3 } }, Database: { acquireCount: { w: 3 } }, Collection: { acquireCount: { w: 2 } }, oplog: { acquireCount: { w: 1 } } } protocol:op_msg 3535ms

2025-03-19T22:39:28.964+0000 I WRITE [conn5638] update meteor.events command: { q: { _id: ObjectId('') }, u: { $set: { text: "-- DEV 0 THR 1 NUM 3500 TOTAL 39.232657 ELAPSED 218.80523 --\n" } }, multi: false, upsert: false } planSummary: IDHACK keysExamined:1 docsExamined:1 nMatched:1 nModified:1 numYields:2 locks:{ Global: { acquireCount: { r: 5, w: 5 } }, Database: { acquireCount: { w: 5 } }, Collection: { acquireCount: { w: 4 } }, oplog: { acquireCount: { w: 1 } } } 3560ms

2025-03-19T22:39:28.965+0000 I COMMAND [conn5679] command meteor.events command: insert { insert: "events", ordered: true, lsid: { id: UUID("") }, txnNumber: 5274, $clusterTime: { clusterTime: Timestamp(1742423964, 8), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } ninserted:1 keysInserted:2 numYields:0 reslen:214 locks:{ Global: { acquireCount: { r: 3, w: 3 } }, Database: { acquireCount: { w: 3 } }, Collection: { acquireCount: { w: 2 } }, oplog: { acquireCount: { w: 1 } } } protocol:op_msg 3535ms

2025-03-19T22:39:30.761+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 15, after asserts: 70, after backgroundFlushing: 81, after connections: 81, after dur: 81, after extra_info: 91, after globalLock: 101, after locks: 111, after logicalSessionRecordCache: 157, after network: 303, after opLatencies: 352, after opReadConcernCounters: 352, after opcounters: 379, after opcountersRepl: 379, after oplogTruncation: 389, after repl: 527, after storageEngine: 537, after tcmalloc: 547, after transactions: 547, after wiredTiger: 601, at end: 2091 }

2025-03-19T22:39:30.876+0000 I COMMAND [conn5678] command admin.$cmd command: isMaster { ismaster: 1, helloOk: true, $clusterTime: { clusterTime: Timestamp(1742423958, 10), signature: { hash: BinData(0, ), keyId: } }, $db: "admin" } numYields:0 reslen:639 locks:{} protocol:op_msg 1250ms

2025-03-19T22:39:30.876+0000 I COMMAND [conn5580] command admin.$cmd command: isMaster { ismaster: 1, helloOk: true, $clusterTime: { clusterTime: Timestamp(1742423963, 11), signature: { hash: BinData(0, ), keyId: } }, $db: "admin" } numYields:0 reslen:639 locks:{} protocol:op_msg 1250ms

2025-03-19T22:39:30.876+0000 I COMMAND [conn5408] command admin.$cmd command: isMaster { ismaster: 1, helloOk: true, $clusterTime: { clusterTime: Timestamp(1742423957, 8), signature: { hash: BinData(0, ), keyId: } }, $db: "admin" } numYields:0 reslen:639 locks:{} protocol:op_msg 1315ms

2025-03-19T22:39:30.876+0000 I COMMAND [conn5673] command admin.$cmd command: isMaster { ismaster: 1, helloOk: true, $clusterTime: { clusterTime: Timestamp(1742423964, 8), signature: { hash: BinData(0, ), keyId: } }, $db: "admin" } numYields:0 reslen:639 locks:{} protocol:op_msg 1315ms

2025-03-19T22:39:31.024+0000 I COMMAND [conn5638] command meteor.$cmd command: update { update: "events", ordered: true, lsid: { id: UUID("") }, txnNumber: 6612, $clusterTime: { clusterTime: Timestamp(1742423958, 4), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } numYields:0 reslen:229 locks:{ Global: { acquireCount: { r: 5, w: 5 } }, Database: { acquireCount: { w: 5 } }, Collection: { acquireCount: { w: 4 } }, oplog: { acquireCount: { w: 1 } } } protocol:op_msg 5555ms

2025-03-19T22:39:31.024+0000 I COMMAND [conn5581] command meteor.events command: insert { insert: "events", ordered: true, lsid: { id: UUID("") }, txnNumber: 50097, $clusterTime: { clusterTime: Timestamp(1742423963, 11), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } ninserted:1 keysInserted:2 numYields:0 reslen:214 locks:{ Global: { acquireCount: { r: 3, w: 3 } }, Database: { acquireCount: { w: 3 } }, Collection: { acquireCount: { w: 2 } }, oplog: { acquireCount: { w: 1 } } } protocol:op_msg 3121ms

2025-03-19T22:39:31.157+0000 I WRITE [conn5664] update meteor.events command: { q: { _id: ObjectId('') }, u: { $set: { text: "-- DEV 1 THR 0 NUM 2500 TOTAL 28.977865 ELAPSED 214.20418 --\n" } }, multi: false, upsert: false } planSummary: IDHACK keysExamined:1 docsExamined:1 nMatched:1 nModified:1 numYields:2 locks:{ Global: { acquireCount: { r: 5, w: 5 } }, Database: { acquireCount: { w: 5 } }, Collection: { acquireCount: { w: 4 } }, oplog: { acquireCount: { w: 1 } } } 3288ms

2025-03-19T22:39:32.761+0000 I COMMAND [conn5632] command admin.$cmd command: isMaster { ismaster: 1, helloOk: true, $clusterTime: { clusterTime: Timestamp(1742423959, 139), signature: { hash: BinData(0, ), keyId: } }, $db: "admin" } numYields:0 reslen:639 locks:{} protocol:op_msg 276ms

2025-03-19T22:39:32.761+0000 I COMMAND [conn5579] command admin.$cmd command: isMaster { ismaster: 1, helloOk: true, $clusterTime: { clusterTime: Timestamp(1742423958, 16), signature: { hash: BinData(0, ), keyId: } }, $db: "admin" } numYields:0 reslen:639 locks:{} protocol:op_msg 276ms

2025-03-19T22:39:33.957+0000 I COMMAND [conn5664] command meteor.$cmd command: update { update: "events", ordered: true, lsid: { id: UUID("") }, txnNumber: 258, $clusterTime: { clusterTime: Timestamp(1742423964, 8), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } numYields:0 reslen:229 locks:{ Global: { acquireCount: { r: 5, w: 5 } }, Database: { acquireCount: { w: 5 } }, Collection: { acquireCount: { w: 4 } }, oplog: { acquireCount: { w: 1 } } } protocol:op_msg 5775ms

2025-03-19T22:39:34.148+0000 I COMMAND [conn5659] command admin.$cmd command: isMaster { ismaster: 1, helloOk: true, $clusterTime: { clusterTime: Timestamp(1742423964, 8), signature: { hash: BinData(0, ), keyId: } }, $db: "admin" } numYields:0 reslen:639 locks:{} protocol:op_msg 104ms

2025-03-19T22:39:34.148+0000 I COMMAND [conn2001] command admin.$cmd command: isMaster { ismaster: 1, helloOk: true, $clusterTime: { clusterTime: Timestamp(1742423963, 35), signature: { hash: BinData(0, ), keyId: } }, $db: "admin" } numYields:0 reslen:639 locks:{} protocol:op_msg 585ms

2025-03-19T22:39:34.148+0000 I COMMAND [conn5674] command admin.$cmd command: isMaster { ismaster: 1, helloOk: true, $clusterTime: { clusterTime: Timestamp(1742423961, 6), signature: { hash: BinData(0, ), keyId: } }, $db: "admin" } numYields:0 reslen:639 locks:{} protocol:op_msg 211ms

2025-03-19T22:39:34.206+0000 I WRITE [conn5635] update meteor.events command: { q: { _id: ObjectId('') }, u: { $set: { text: "-- DEV 1 THR 1 NUM 3500 TOTAL 39.447960 ELAPSED 218.77935 --\n" } }, multi: false, upsert: false } planSummary: IDHACK keysExamined:1 docsExamined:1 nMatched:1 nModified:1 writeConflicts:2 numYields:4 locks:{ Global: { acquireCount: { r: 7, w: 7 } }, Database: { acquireCount: { w: 7 } }, Collection: { acquireCount: { w: 6 } }, oplog: { acquireCount: { w: 1 } } } 8845ms

2025-03-19T22:39:34.240+0000 I WRITE [conn5660] update meteor.events command: { q: { _id: ObjectId('') }, u: { $set: { text: "-- DEV 0 THR 1 NUM 2500 TOTAL 27.690359 ELAPSED 214.20617 --\n" } }, multi: false, upsert: false } planSummary: IDHACK keysExamined:1 docsExamined:1 nMatched:1 nModified:1 writeConflicts:1 numYields:3 locks:{ Global: { acquireCount: { r: 6, w: 6 } }, Database: { acquireCount: { w: 6 } }, Collection: { acquireCount: { w: 5 } }, oplog: { acquireCount: { w: 1 } } } 6462ms

2025-03-19T22:39:34.271+0000 I WRITE [conn5638] update meteor.events command: { q: { _id: ObjectId('') }, u: { $set: { text: "-- DEV 0 THR 1 NUM 4000 TOTAL 41.080067 ELAPSED 227.69644 --\n" } }, multi: false, upsert: false } planSummary: IDHACK keysExamined:1 docsExamined:1 nMatched:1 nModified:1 numYields:1 locks:{ Global: { acquireCount: { r: 4, w: 4 } }, Database: { acquireCount: { w: 4 } }, Collection: { acquireCount: { w: 3 } }, oplog: { acquireCount: { w: 1 } } } 143ms

2025-03-19T22:39:34.271+0000 I WRITE [conn5663] update meteor.events command: { q: { _id: ObjectId('') }, u: { $set: { text: "-- DEV 1 THR 1 NUM 3000 TOTAL 27.802154 ELAPSED 216.42389 --\n" } }, multi: false, upsert: false } planSummary: IDHACK keysExamined:1 docsExamined:1 nMatched:1 nModified:1 writeConflicts:2 numYields:4 locks:{ Global: { acquireCount: { r: 7, w: 7 } }, Database: { acquireCount: { w: 7 } }, Collection: { acquireCount: { w: 6 } }, oplog: { acquireCount: { w: 1 } } } 3427ms

2025-03-19T22:39:34.271+0000 I WRITE [conn5662] update meteor.events command: { q: { _id: ObjectId('') }, u: { $set: { text: "-- DEV 0 THR 0 NUM 2500 TOTAL 28.242600 ELAPSED 214.18415 --\n" } }, multi: false, upsert: false } planSummary: IDHACK keysExamined:1 docsExamined:1 nMatched:1 nModified:1 writeConflicts:4 numYields:6 locks:{ Global: { acquireCount: { r: 9, w: 9 } }, Database: { acquireCount: { w: 9 } }, Collection: { acquireCount: { w: 8 } }, oplog: { acquireCount: { w: 1 } } } 6493ms

2025-03-19T22:39:34.271+0000 I WRITE [conn5637] update meteor.events command: { q: { _id: ObjectId('') }, u: { $set: { text: "-- DEV 0 THR 0 NUM 4000 TOTAL 38.821593 ELAPSED 218.79305 --\n" } }, multi: false, upsert: false } planSummary: IDHACK keysExamined:1 docsExamined:1 nMatched:1 nModified:1 writeConflicts:4 numYields:6 locks:{ Global: { acquireCount: { r: 9, w: 9 } }, Database: { acquireCount: { w: 9 } }, Collection: { acquireCount: { w: 8 } }, oplog: { acquireCount: { w: 1 } } } 8918ms

2025-03-19T22:39:34.271+0000 I WRITE [conn5636] update meteor.events command: { q: { _id: ObjectId('') }, u: { $set: { text: "-- DEV 1 THR 0 NUM 3500 TOTAL 37.079320 ELAPSED 218.80479 --\n" } }, multi: false, upsert: false } planSummary: IDHACK keysExamined:1 docsExamined:1 nMatched:1 nModified:1 writeConflicts:5 numYields:7 locks:{ Global: { acquireCount: { r: 10, w: 10 } }, Database: { acquireCount: { w: 10 } }, Collection: { acquireCount: { w: 9 } }, oplog: { acquireCount: { w: 1 } } } 8918ms

2025-03-19T22:39:34.283+0000 I COMMAND [conn5638] command meteor.$cmd command: update { update: "events", ordered: true, lsid: { id: UUID("") }, txnNumber: 6613, $clusterTime: { clusterTime: Timestamp(1742423967, 3), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } numYields:0 reslen:229 locks:{ Global: { acquireCount: { r: 4, w: 4 } }, Database: { acquireCount: { w: 4 } }, Collection: { acquireCount: { w: 3 } }, oplog: { acquireCount: { w: 1 } } } protocol:op_msg 166ms

2025-03-19T22:39:34.283+0000 I COMMAND [conn5577] command meteor.events command: insert { insert: "events", ordered: true, lsid: { id: UUID("") }, txnNumber: 50504, $clusterTime: { clusterTime: Timestamp(1742423967, 1), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } ninserted:1 keysInserted:2 numYields:0 reslen:214 locks:{ Global: { acquireCount: { r: 3, w: 3 } }, Database: { acquireCount: { w: 3 } }, Collection: { acquireCount: { w: 2 } }, oplog: { acquireCount: { w: 1 } } } protocol:op_msg 5344ms

2025-03-19T22:39:34.283+0000 I COMMAND [conn5636] command meteor.$cmd command: update { update: "events", ordered: true, lsid: { id: UUID("") }, txnNumber: 8531, $clusterTime: { clusterTime: Timestamp(1742423958, 4), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } numYields:0 reslen:229 locks:{ Global: { acquireCount: { r: 10, w: 10 } }, Database: { acquireCount: { w: 10 } }, Collection: { acquireCount: { w: 9 } }, oplog: { acquireCount: { w: 1 } } } protocol:op_msg 8965ms

2025-03-19T22:39:34.283+0000 I COMMAND [conn5635] command meteor.$cmd command: update { update: "events", ordered: true, lsid: { id: UUID("") }, txnNumber: 6254, $clusterTime: { clusterTime: Timestamp(1742423958, 4), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } numYields:0 reslen:229 locks:{ Global: { acquireCount: { r: 7, w: 7 } }, Database: { acquireCount: { w: 7 } }, Collection: { acquireCount: { w: 6 } }, oplog: { acquireCount: { w: 1 } } } protocol:op_msg 8965ms

2025-03-19T22:39:34.283+0000 I COMMAND [conn5662] command meteor.$cmd command: update { update: "events", ordered: true, lsid: { id: UUID("") }, txnNumber: 154, $clusterTime: { clusterTime: Timestamp(1742423964, 8), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } numYields:0 reslen:229 locks:{ Global: { acquireCount: { r: 9, w: 9 } }, Database: { acquireCount: { w: 9 } }, Collection: { acquireCount: { w: 8 } }, oplog: { acquireCount: { w: 1 } } } protocol:op_msg 6518ms

2025-03-19T22:39:34.283+0000 I COMMAND [conn5663] command meteor.$cmd command: update { update: "events", ordered: true, lsid: { id: UUID("") }, txnNumber: 716, $clusterTime: { clusterTime: Timestamp(1742423964, 8), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } numYields:0 reslen:229 locks:{ Global: { acquireCount: { r: 7, w: 7 } }, Database: { acquireCount: { w: 7 } }, Collection: { acquireCount: { w: 6 } }, oplog: { acquireCount: { w: 1 } } } protocol:op_msg 5344ms

2025-03-19T22:39:34.283+0000 I COMMAND [conn5637] command meteor.$cmd command: update { update: "events", ordered: true, lsid: { id: UUID("") }, txnNumber: 10660, $clusterTime: { clusterTime: Timestamp(1742423958, 4), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } numYields:0 reslen:229 locks:{ Global: { acquireCount: { r: 9, w: 9 } }, Database: { acquireCount: { w: 9 } }, Collection: { acquireCount: { w: 8 } }, oplog: { acquireCount: { w: 1 } } } protocol:op_msg 8965ms

2025-03-19T22:39:34.283+0000 I COMMAND [conn5660] command meteor.$cmd command: update { update: "events", ordered: true, lsid: { id: UUID("") }, txnNumber: 2140, $clusterTime: { clusterTime: Timestamp(1742423964, 8), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } numYields:0 reslen:229 locks:{ Global: { acquireCount: { r: 6, w: 6 } }, Database: { acquireCount: { w: 6 } }, Collection: { acquireCount: { w: 5 } }, oplog: { acquireCount: { w: 1 } } } protocol:op_msg 6518ms

2025-03-19T22:39:34.283+0000 I COMMAND [conn5675] command meteor.events command: insert { insert: "events", ordered: true, lsid: { id: UUID("") }, txnNumber: 5001, $clusterTime: { clusterTime: Timestamp(1742423967, 3), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } ninserted:1 keysInserted:2 numYields:0 reslen:214 locks:{ Global: { acquireCount: { r: 3, w: 3 } }, Database: { acquireCount: { w: 3 } }, Collection: { acquireCount: { w: 2 } }, oplog: { acquireCount: { w: 1 } } } protocol:op_msg 3159ms

2025-03-19T22:39:34.283+0000 I COMMAND [conn5679] command meteor.events command: insert { insert: "events", ordered: true, lsid: { id: UUID("") }, txnNumber: 5275, $clusterTime: { clusterTime: Timestamp(1742423967, 3), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } ninserted:1 keysInserted:2 numYields:0 reslen:214 locks:{ Global: { acquireCount: { r: 3, w: 3 } }, Database: { acquireCount: { w: 3 } }, Collection: { acquireCount: { w: 2 } }, oplog: { acquireCount: { w: 1 } } } protocol:op_msg 3159ms

2025-03-19T22:39:34.284+0000 I COMMAND [conn45] command meteor.workspaces command: find { find: "workspaces", filter: { $or: [ { rtp_childs: { $exists: true, $ne: [] } }, { rtp_workers: { $exists: true, $ne: {} } } ] }, projection: { project_uid: 1, session_uid: 1, rtp_childs: 1, rtp_workers: 1 }, lsid: { id: UUID("") }, $clusterTime: { clusterTime: Timestamp(1742423964, 8), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } planSummary: IXSCAN { rtp_childs: 1 }, IXSCAN { rtp_workers: 1 } keysExamined:376 docsExamined:374 cursorExhausted:1 numYields:10 nreturned:18 reslen:2823 locks:{ Global: { acquireCount: { r: 22 } }, Database: { acquireCount: { r: 11 } }, Collection: { acquireCount: { r: 11 } } } protocol:op_msg 9871ms

2025-03-19T22:39:34.298+0000 I COMMAND [conn10] command admin.$cmd command: isMaster { ismaster: true, $clusterTime: { clusterTime: Timestamp(1742423956, 6), signature: { hash: BinData(0, ), keyId: } }, $db: "admin" } numYields:0 reslen:639 locks:{} protocol:op_msg 130ms

2025-03-19T22:39:34.435+0000 I NETWORK [listener] connection accepted from 127.0.0.1:49824 #5683 (192 connections now open)

2025-03-19T22:39:34.486+0000 I NETWORK [conn5683] received client metadata from 127.0.0.1:49824 conn5683: { driver: { name: "PyMongo", version: "4.8.0" }, os: { type: "Linux", name: "Linux", architecture: "x86_64", version: "5.15.0-1062-aws" }, platform: "CPython 3.10.14.final.0" }

2025-03-19T22:39:34.512+0000 I COMMAND [conn39] command meteor.exposures command: aggregate { aggregate: "exposures", pipeline: [ { $match: { project_uid: "P76", session_uid: "S3", stage: { $in: [ "thumbs", "ctf", "pick", "extract", "extract_manual", "ready" ] } } }, { $project: { _id: 1 } }, { $count: "total_thumbs" } ], cursor: {}, lsid: { id: UUID("") }, $clusterTime: { clusterTime: Timestamp(1742423974, 28), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } planSummary: IXSCAN { project_uid: 1, session_uid: 1 } keysExamined:1909 docsExamined:1909 cursorExhausted:1 numYields:15 nreturned:1 reslen:240 locks:{ Global: { acquireCount: { r: 34 } }, Database: { acquireCount: { r: 17 } }, Collection: { acquireCount: { r: 17 } } } protocol:op_msg 141ms

2025-03-19T22:39:34.613+0000 I ACCESS [conn5683] Successfully authenticated as principal cryosparc_user on admin from client 127.0.0.1:49824

2025-03-19T22:39:34.683+0000 I STORAGE [WT RecordStoreThread: local.oplog.rs] WiredTiger record store oplog truncation finished in: 28ms

2025-03-19T22:39:34.690+0000 I COMMAND [conn39] command meteor.exposures command: aggregate { aggregate: "exposures", pipeline: [ { $match: { project_uid: "P76", session_uid: "S3", in_progress: true } }, { $project: { _id: 1 } }, { $count: "in_progress" } ], cursor: {}, lsid: { id: UUID("") }, $clusterTime: { clusterTime: Timestamp(1742423974, 92), signature: { hash: BinData(0, ), keyId: } }, $db: "meteor" } planSummary: IXSCAN { project_uid: 1, session_uid: 1 } keysExamined:1909 docsExamined:1909 cursorExhausted:1 numYields:14 nreturned:1 reslen:239 locks:{ Global: { acquireCount: { r: 32 } }, Database: { acquireCount: { r: 16 } }, Collection: { acquireCount: { r: 16 } } } protocol:op_msg 128ms

2025-03-19T22:39:39.333+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 97, after asserts: 333, after backgroundFlushing: 486, after connections: 701, after dur: 895, after extra_info: 1102, after globalLock: 1291, after locks: 1519, after logicalSessionRecordCache: 1567, after network: 1711, after opLatencies: 1991, after opReadConcernCounters: 2115, after opcounters: 2322, after opcountersRepl: 2424, after oplogTruncation: 3124, after repl: 3815, after storageEngine: 3882, after tcmalloc: 3933, after transactions: 3943, after wiredTiger: 3980, at end: 4062 }

Has anyone encountered similar issues or have any solutions for this problem?