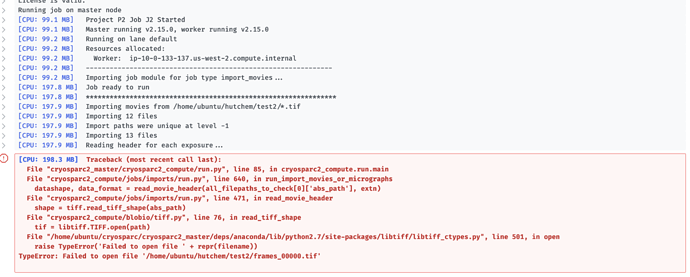

Hi, I am trying to setup cryosparc in AWS cloud in an p2.xlarge EC2 instance with a 10 TB EBS volume attached and raw date stored remotely in S3 bucket. The installation went into completion and I was able top open the cryosparc webapp and build up an import movies job. But it seems that cryosparc automatically unmounted the S3 bucket although at different sages, either while reading the *.tif files or the gain reference file. I did make sure the bucket was attached prior to queuing the job. I am not sure whether it is a cryosparc problem or a AWS s3fs problem. Here is an attachment fro the cryosparc view log. Thank you so much for looking into the matter.

Hi @Ricky,

Thanks for reporting.

How did you find out that the S3 bucket was unmounted?

Can you try opening the file manually outside of cryoSPARC on the system?

Hi @stephan, Thanks for check. The issue is resolved. It is an issue with the newest version of s3fs.

After applying the patch. The problem is resolved.

Bug in the new s3fs version: https://github.com/s3fs-fuse/s3fs-fuse/issues/1245.

1 Like