Hi, the last command you provided showed us that the worker had not updated. Following the “Manual Cluster Updates” sections of the installation instructions seems have resolved the issue, but I am discussing with the user if there are still any further problems and I will update this thread if there is still further problems that we either resolve, or that we require further support on. I have copied the previously requested details below, even thought they seem like they may not be necessary, to make this thread more searchable for future users experiencing this problem.

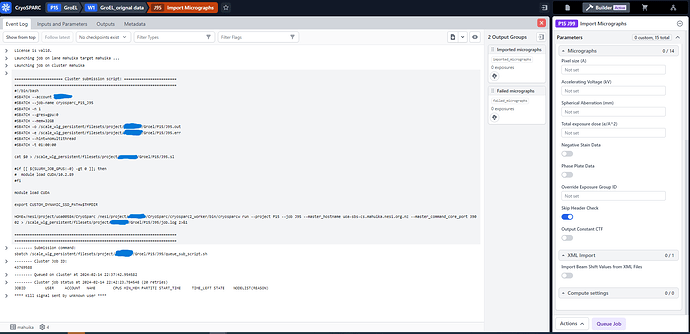

Here is an image from the user containing the empty “Compute settings” near the bottom right corner

And it seems I can’t upload text files so here are the logs to one of the post update failed jobs:

slurmd-logs

Common labels: {"app":"slurmd","cluster":"mahuika","node":"wbg002"}

Line limit: 1000

Total bytes processed: "207 kB"

2024-02-13T13:01:34+13:00 [43721996.extern] done with job

2024-02-13T13:01:34+13:00 [43721996.extern] Sent signal 15 to StepId=43721996.extern

2024-02-13T13:01:34+13:00 [43721996.extern] Sent signal 18 to StepId=43721996.extern

2024-02-13T13:01:34+13:00 [43721996.batch] done with job

2024-02-13T13:01:34+13:00 [43721996.batch] job 43721996 completed with slurm_rc = 0, job_rc = 256

2024-02-13T13:01:34+13:00 [43721996.batch] task 0 (48431) exited with exit code 1.

2024-02-13T13:01:12+13:00 [43721996.batch] task 0 (48431) started 2024-02-13T00:01:12

2024-02-13T13:01:12+13:00 [43721996.batch] starting 1 tasks

2024-02-13T13:01:12+13:00 [43721996.batch] debug levels are stderr='error', logfile='verbose', syslog='verbose'

2024-02-13T13:01:12+13:00 [43721996.batch] task/cgroup: _memcg_initialize: step: alloc=8192MB mem.limit=8192MB memsw.limit=8192MB job_swappiness=18446744073709551614

2024-02-13T13:01:12+13:00 [43721996.batch] task/cgroup: _memcg_initialize: job: alloc=8192MB mem.limit=8192MB memsw.limit=8192MB job_swappiness=18446744073709551614

2024-02-13T13:01:12+13:00 [43721996.batch] topology/tree: init: topology tree plugin loaded

2024-02-13T13:01:12+13:00 [43721996.batch] route/topology: init: route topology plugin loaded

2024-02-13T13:01:12+13:00 [43721996.batch] cred/munge: init: Munge credential signature plugin loaded

2024-02-13T13:01:12+13:00 [43721996.batch] task/affinity: init: task affinity plugin loaded with CPU mask 0xffffffffffffffffff

2024-02-13T13:01:12+13:00 Launching batch job 43721996 for UID 66500573

2024-02-13T13:01:12+13:00 [43721996.extern] task/cgroup: _memcg_initialize: step: alloc=8192MB mem.limit=8192MB memsw.limit=8192MB job_swappiness=18446744073709551614

2024-02-13T13:01:12+13:00 [43721996.extern] task/cgroup: _memcg_initialize: job: alloc=8192MB mem.limit=8192MB memsw.limit=8192MB job_swappiness=18446744073709551614

2024-02-13T13:01:12+13:00 [43721996.extern] topology/tree: init: topology tree plugin loaded

2024-02-13T13:01:12+13:00 [43721996.extern] route/topology: init: route topology plugin loaded

2024-02-13T13:01:12+13:00 [43721996.extern] cred/munge: init: Munge credential signature plugin loaded

2024-02-13T13:01:12+13:00 [43721996.extern] task/affinity: init: task affinity plugin loaded with CPU mask 0xffffffffffffffffff

2024-02-13T13:01:12+13:00 task/affinity: batch_bind: job 43721996 CPU final HW mask for node: 0x0003C00000003C0000

2024-02-13T13:01:12+13:00 task/affinity: batch_bind: job 43721996 CPU input mask for node: 0x0000000FF000000000

2024-02-13T13:01:12+13:00 task/affinity: task_p_slurmd_batch_request: task_p_slurmd_batch_request: 43721996

slurmctld-logs

Common labels: {"app":"slurmctld","cluster":"mahuika"}

Line limit: 1000

Total bytes processed: "1.36 MB"

2024-02-13T13:01:34+13:00 _job_complete: JobId=43721996 done

2024-02-13T13:01:34+13:00 _job_complete: JobId=43721996 WEXITSTATUS 1

2024-02-13T13:01:12+13:00 sched: Allocate JobId=43721996 NodeList=wbg002 #CPUs=8 Partition=gpu

2024-02-13T13:01:11+13:00 _slurm_rpc_submit_batch_job: JobId=43721996 InitPrio=1452 usec=5980

And here are the outputs to the requests commands (note: the third command you requested hangs with Waiting for data... (interrupt to abort) at the end):

[User ~]$ /nesi/project/PROJECTCODE/CryoSparc/cryosparc2_master/bin/cryosparcm cli "get_scheduler_targets()"

[{'cache_path': '/dev/shm/jobs/43615220', 'cache_quota_mb': None, 'cache_reserve_mb': 10000, 'custom_var_names': [], 'desc': None, 'hostname': 'mahuika', 'lane': 'mahuika', 'name': 'mahuika', 'qdel_cmd_tpl': 'scancel {{ cluster_job_id }}', 'qinfo_cmd_tpl': 'sinfo', 'qstat_cmd_tpl': 'squeue -j {{ cluster_job_id }}', 'qsub_cmd_tpl': 'sbatch {{ script_path_abs }}', 'script_tpl': '#!/bin/bash\n#SBATCH --account PROJECTCODE\n#SBATCH --job-name cryosparc_{{ project_uid }}_{{ job_uid }}\n#SBATCH -n {{ num_cpu }}\n#SBATCH --gres=gpu:{{ num_gpu }}\n#SBATCH --mem={{ (ram_gb)|int }}GB\n#SBATCH -o {{ job_dir_abs }}.out\n#SBATCH -e {{ job_dir_abs }}.err\n#SBATCH --hint=nomultithread\n#SBATCH -t 01:00:00\n\ncat $0 > {{ job_dir_abs }}.sl\n\n#if [[ ${SLURM_JOB_GPUS:-0} -gt 0 ]]; then\n# module load CUDA/10.2.89\n#fi\n\nmodule load CUDA\n\nexport CUSTOM_DYNAMIC_SSD_PATH=$TMPDIR\n\nHOME=/nesi/project/PROJECTCODE/CryoSparc {{ run_cmd }}\n\n', 'send_cmd_tpl': '{{ command }}', 'title': 'mahuika', 'tpl_vars': ['command', 'cluster_job_id', 'run_cmd', 'job_uid', 'project_uid', 'job_dir_abs', 'num_gpu', 'num_cpu', 'ram_gb'], 'type': 'cluster', 'worker_bin_path': '/nesi/project/PROJECTCODE/CryoSparc/cryosparc2_worker/bin/cryosparcw'}]

[User ~]$ /nesi/project/PROJECTCODE/CryoSparc/cryosparc2_master/bin/cryosparcm cli "get_job('P15', 'J89', 'version', 'job_type', 'instance_information', 'cluster_job_id', 'instance_information', 'resources_allocated')"

{'_id': '65caadb7e2b78fba11a17c82', 'cluster_job_id': '43721591', 'instance_information': {}, 'job_type': 'volume_tools', 'project_uid': 'P15', 'resources_allocated': {'fixed': {'SSD': False}, 'hostname': 'mahuika', 'lane': 'mahuika', 'lane_type': 'cluster', 'license': False, 'licenses_acquired': 0, 'slots': {'CPU': [0, 1], 'GPU': [], 'RAM': [0]}, 'target': {'cache_path': '/dev/shm/jobs/43615220', 'cache_quota_mb': None, 'cache_reserve_mb': 10000, 'custom_var_names': [], 'desc': None, 'hostname': 'mahuika', 'lane': 'mahuika', 'name': 'mahuika', 'qdel_cmd_tpl': 'scancel {{ cluster_job_id }}', 'qinfo_cmd_tpl': 'sinfo', 'qstat_cmd_tpl': 'squeue -j {{ cluster_job_id }}', 'qsub_cmd_tpl': 'sbatch {{ script_path_abs }}', 'script_tpl': '#!/bin/bash\n#SBATCH --account PROJECTCODE\n#SBATCH --job-name cryosparc_{{ project_uid }}_{{ job_uid }}\n#SBATCH -n {{ num_cpu }}\n#SBATCH --gres=gpu:{{ num_gpu }}\n#SBATCH --mem={{ (ram_gb)|int }}GB\n#SBATCH -o {{ job_dir_abs }}.out\n#SBATCH -e {{ job_dir_abs }}.err\n#SBATCH --hint=nomultithread\n#SBATCH -t 01:00:00\n\ncat $0 > {{ job_dir_abs }}.sl\n\n#if [[ ${SLURM_JOB_GPUS:-0} -gt 0 ]]; then\n# module load CUDA/10.2.89\n#fi\n\nmodule load CUDA\n\nexport CUSTOM_DYNAMIC_SSD_PATH=$TMPDIR\n\nHOME=/nesi/project/PROJECTCODE/CryoSparc {{ run_cmd }}\n\n', 'send_cmd_tpl': '{{ command }}', 'title': 'mahuika', 'tpl_vars': ['command', 'cluster_job_id', 'run_cmd', 'job_uid', 'project_uid', 'job_dir_abs', 'num_gpu', 'num_cpu', 'ram_gb'], 'type': 'cluster', 'worker_bin_path': '/nesi/project/PROJECTCODE/CryoSparc/cryosparc2_worker/bin/cryosparcw'}}, 'uid': 'J89', 'version': 'v4.4.1'}

[User ~]$ /nesi/project/PROJECTCODE/CryoSparc/cryosparc2_master/bin/cryosparcm joblog P15 J89

================= CRYOSPARCW ======= 2024-02-12 23:46:59.830681 =========

Project P15 Job J89

Master uoa-sbs-cs.mahuika.nesi.org.nz Port 39002

===========================================================================

========= monitor process now starting main process

MAINPROCESS PID 25341

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "cryosparc_worker/cryosparc_compute/run.py", line 160, in cryosparc_compute.run.run

File "/scale_wlg_persistent/filesets/project/PROJECTCODE/CryoSparc/cryosparc2_worker/cryosparc_compute/jobs/runcommon.py", line 93, in connect

assert cli.test_connection(), "Job could not connect to master instance at %s:%s" % (master_hostname, str(master_command_core_port))

File "/scale_wlg_persistent/filesets/project/PROJECTCODE/CryoSparc/cryosparc2_worker/cryosparc_compute/client.py", line 59, in func

assert False, res['error']

AssertionError: {'code': 403, 'data': None, 'message': 'ServerError: Authentication failed - License-ID request header missing.\n This may indicate that cryosparc_worker did not update,\n cryosparc_worker/config.sh is missing a CRYOSPARC_LICENSE_ID entry,\n or CRYOSPARC_LICENSE_ID is not present in your environment.\n See https://guide.cryosparc.com/setup-configuration-and-management/hardware-and-system-requirements#command-api-security for more details.\n', 'name': 'ServerError'}

Process Process-1:

Traceback (most recent call last):

File "/scale_wlg_persistent/filesets/project/PROJECTCODE/CryoSparc/cryosparc2_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/multiprocessing/process.py", line 297, in _bootstrap

self.run()

File "/scale_wlg_persistent/filesets/project/PROJECTCODE/CryoSparc/cryosparc2_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/multiprocessing/process.py", line 99, in run

self._target(*self._args, **self._kwargs)

File "cryosparc_worker/cryosparc_compute/run.py", line 31, in cryosparc_compute.run.main

File "/scale_wlg_persistent/filesets/project/PROJECTCODE/CryoSparc/cryosparc2_worker/cryosparc_compute/jobs/runcommon.py", line 93, in connect

assert cli.test_connection(), "Job could not connect to master instance at %s:%s" % (master_hostname, str(master_command_core_port))

File "/scale_wlg_persistent/filesets/project/PROJECTCODE/CryoSparc/cryosparc2_worker/cryosparc_compute/client.py", line 59, in func

assert False, res['error']

AssertionError: {'code': 403, 'data': None, 'message': 'ServerError: Authentication failed - License-ID request header missing.\n This may indicate that cryosparc_worker did not update,\n cryosparc_worker/config.sh is missing a CRYOSPARC_LICENSE_ID entry,\n or CRYOSPARC_LICENSE_ID is not present in your environment.\n See https://guide.cryosparc.com/setup-configuration-and-management/hardware-and-system-requirements#command-api-security for more details.\n', 'name': 'ServerError'}

Waiting for data... (interrupt to abort)