Hi @wtempel. Here is the output of the log and the get_scheduler_targets.

By the way, the command cryosparcm cli “get_scheduler_targets()” only worked when I put in the quotation marks.

================= CRYOSPARCW ======= 2022-12-08 10:54:16.126383 =========

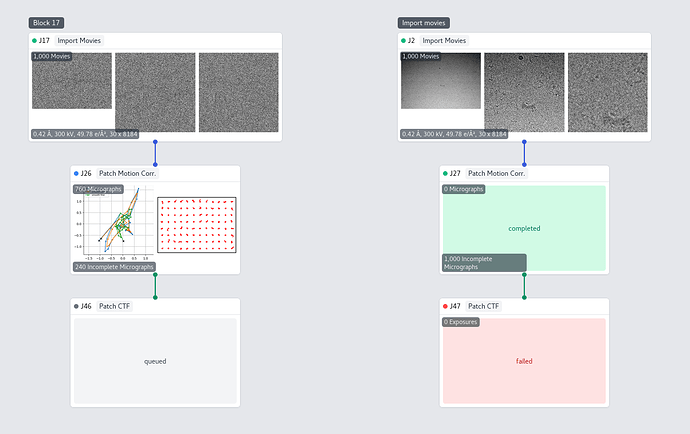

Project P1 Job J27

Master execute-001.mortimer.hpc Port 40002

===========================================================================

========= monitor process now starting main process

MAINPROCESS PID 439871

MAIN PID 439871

motioncorrection.run_patch cryosparc_compute.jobs.jobregister

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "cryosparc_worker/cryosparc_compute/run.py", line 181, in cryosparc_compute.run.run

File "/tank/data/Programs/cryosparc/cryosparc_worker/cryosparc_compute/jobs/runcommon.py", line 2070, in get_gpu_info

import pycuda.driver as cudrv

File "/tank/data/Programs/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/site-packages/pycuda/driver.py", line 62, in <module>

from pycuda._driver import * # noqa

ImportError: libcurand.so.10: cannot open shared object file: No such file or directory

***************************************************************

Running job on hostname %s GPU_node2

Allocated Resources : {'fixed': {'SSD': False}, 'hostname': 'GPU_node2', 'lane': 'GPU_node2', 'lane_type': 'cluster', 'license': True, 'licenses_acquired': 1, 'slots': {'CPU': [0, 1, 2, 3, 4, 5], 'GPU': [0], 'RAM': [0, 1]}, 'target': {'cache_path': '/tmp', 'cache_quota_mb': None, 'cache_reserve_mb': 10000, 'desc': None, 'hostname': 'GPU_node2', 'lane': 'GPU_node2', 'name': 'GPU_node2', 'qdel_cmd_tpl': 'scancel {{ cluster_job_id }}', 'qinfo_cmd_tpl': 'sinfo', 'qstat_cmd_tpl': 'squeue -j {{ cluster_job_id }}', 'qstat_code_cmd_tpl': None, 'qsub_cmd_tpl': 'sbatch {{ script_path_abs }}', 'script_tpl': '#!/bin/bash\n#### cryoSPARC cluster submission script template for SLURM\n## Available variables:\n## {{ run_cmd }} - the complete command string to run the job\n## {{ num_cpu }} - the number of CPUs needed\n## {{ num_gpu }} - the number of GPUs needed. \n## Note: the code will use this many GPUs starting from dev id 0\n## the cluster scheduler or this script have the responsibility\n## of setting CUDA_VISIBLE_DEVICES so that the job code ends up\n## using the correct cluster-allocated GPUs.\n## {{ ram_gb }} - the amount of RAM needed in GB\n## {{ job_dir_abs }} - absolute path to the job directory\n## {{ project_dir_abs }} - absolute path to the project dir\n## {{ job_log_path_abs }} - absolute path to the log file for the job\n## {{ worker_bin_path }} - absolute path to the cryosparc worker command\n## {{ run_args }} - arguments to be passed to cryosparcw run\n## {{ project_uid }} - uid of the project\n## {{ job_uid }} - uid of the job\n## {{ job_creator }} - name of the user that created the job (may contain spaces)\n## {{ cryosparc_username }} - cryosparc username of the user that created the job (usually an email)\n##\n## What follows is a simple SLURM script:\n\n#SBATCH --job-name {{ job_uid }}_{{ project_uid }}\n#SBATCH -n {{ num_cpu }}\n##SBATCH -G {{ num_gpu }}\n#SBATCH --partition=gpu\n##SBATCH --partition=batch\n#SBATCH --exclude=execute-3000\n#SBATCH --mem={{ (ram_gb*1000)|int }}MB \n#SBATCH -o {{ job_dir_abs }}/output\n#SBATCH -e {{ job_dir_abs }}/error\n\navailable_devs=""\nfor devidx in $(seq 0 15);\ndo\n if [[ -z $(nvidia-smi -i $devidx --query-compute-apps=pid --format=csv,noheader) ]] ; then\n if [[ -z "$available_devs" ]] ; then\n available_devs=$devidx\n else\n available_devs=$available_devs,$devidx\n fi\n fi\ndone\nexport CUDA_VISIBLE_DEVICES=$available_devs\n\n{{ run_cmd }}\n\n', 'send_cmd_tpl': '{{ command }}', 'title': 'GPU_node2', 'type': 'cluster', 'worker_bin_path': '/home/Programs/cryosparc/cryosparc_worker/bin/cryosparcw'}}

Process Process-1:1:

Traceback (most recent call last):

File "/tank/data/Programs/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/multiprocessing/process.py", line 297, in _bootstrap

self.run()

File "/tank/data/Programs/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/multiprocessing/process.py", line 99, in run

self._target(*self._args, **self._kwargs)

File "/tank/data/Programs/cryosparc/cryosparc_worker/cryosparc_compute/jobs/pipeline.py", line 200, in process_work_simple

process_setup(proc_idx) # do any setup you want on a per-process basis

File "cryosparc_worker/cryosparc_compute/jobs/motioncorrection/run_patch.py", line 81, in cryosparc_compute.jobs.motioncorrection.run_patch.run_patch_motion_correction_multi.process_setup

File "/tank/data/Programs/cryosparc/cryosparc_worker/cryosparc_compute/engine/__init__.py", line 8, in <module>

from .engine import * # noqa

File "cryosparc_worker/cryosparc_compute/engine/engine.py", line 9, in init cryosparc_compute.engine.engine

File "cryosparc_worker/cryosparc_compute/engine/cuda_core.py", line 4, in init cryosparc_compute.engine.cuda_core

File "/tank/data/Programs/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/site-packages/pycuda/driver.py", line 62, in <module>

from pycuda._driver import * # noqa

ImportError: libcurand.so.10: cannot open shared object file: No such file or directory

***************************************************************

Traceback (most recent call last):

File "/tank/data/Programs/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/multiprocessing/queues.py", line 242, in _feed

send_bytes(obj)

File "/tank/data/Programs/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/multiprocessing/connection.py", line 200, in send_bytes

self._send_bytes(m[offset:offset + size])

File "/tank/data/Programs/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/multiprocessing/connection.py", line 404, in _send_bytes

self._send(header + buf)

File "/tank/data/Programs/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/multiprocessing/connection.py", line 368, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

Traceback (most recent call last):

# This error block repeats 992 times.

BrokenPipeError: [Errno 32] Broken pipe

/tank/data/Programs/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/site-packages/numpy/core/fromnumeric.py:3373: RuntimeWarning: Mean of empty slice.

out=out, **kwargs)

/tank/data/Programs/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.7/site-packages/numpy/core/_methods.py:170: RuntimeWarning: invalid value encountered in double_scalars

ret = ret.dtype.type(ret / rcount)

#

#

#

#

[user@execute-001 cryosparc_worker]$ cryosparcm cli "get_scheduler_targets()

[{'cache_path': '/tmp', 'cache_quota_mb': None, 'cache_reserve_mb': 10000, 'desc': None, 'gpus': [{'id': 0, 'mem': 42314694656, 'name': 'NVIDIA A100-PCIE-40GB'}, {'id': 1, 'mem': 42314694656, 'name': 'NVIDIA A100-PCIE-40GB'}], 'hostname': 'execute-001.mortimer.hpc', 'lane': 'default', 'monitor_port': None, 'name': 'execute-001.mortimer.hpc', 'resource_fixed': {'SSD': True}, 'resource_slots': {'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63], 'GPU': [0, 1], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31]}, 'ssh_str': 'user@execute-001.mortimer.hpc', 'title': 'Worker node execute-001.mortimer.hpc', 'type': 'node', 'worker_bin_path': '/tank/data/LS/user/Programs/cryosparc/cryosparc_worker/bin/cryosparcw'}, {'cache_path': '/tmp', 'cache_quota_mb': None, 'cache_reserve_mb': 10000, 'desc': None, 'hostname': 'GPU_node', 'lane': 'GPU_node', 'name': 'GPU_node', 'qdel_cmd_tpl': 'scancel {{ cluster_job_id }}', 'qinfo_cmd_tpl': 'sinfo', 'qstat_cmd_tpl': 'squeue -j {{ cluster_job_id }}', 'qstat_code_cmd_tpl': None, 'qsub_cmd_tpl': 'sbatch {{ script_path_abs }}', 'script_tpl': '#!/bin/bash\n#### cryoSPARC cluster submission script template for SLURM\n## Available variables:\n## {{ run_cmd }} - the complete command string to run the job\n## {{ num_cpu }} - the number of CPUs needed\n## {{ num_gpu }} - the number of GPUs needed. \n## Note: the code will use this many GPUs starting from dev id 0\n## the cluster scheduler or this script have the responsibility\n## of setting CUDA_VISIBLE_DEVICES so that the job code ends up\n## using the correct cluster-allocated GPUs.\n## {{ ram_gb }} - the amount of RAM needed in GB\n## {{ job_dir_abs }} - absolute path to the job directory\n## {{ project_dir_abs }} - absolute path to the project dir\n## {{ job_log_path_abs }} - absolute path to the log file for the job\n## {{ worker_bin_path }} - absolute path to the cryosparc worker command\n## {{ run_args }} - arguments to be passed to cryosparcw run\n## {{ project_uid }} - uid of the project\n## {{ job_uid }} - uid of the job\n## {{ job_creator }} - name of the user that created the job (may contain spaces)\n## {{ cryosparc_username }} - cryosparc username of the user that created the job (usually an email)\n##\n## What follows is a simple SLURM script:\n\n#SBATCH --job-name {{ job_uid }}_{{ project_uid }}\n##SBATCH -n {{ num_cpu }}\n#SBATCH -n 32\n##SBATCH -G {{ num_gpu }}\n#SBATCH --partition=gpu\n##SBATCH --partition=batch\n#SBATCH --mem={{ (ram_gb*1000)|int }}MB \n#SBATCH -o {{ job_dir_abs }}/output\n#SBATCH -e {{ job_dir_abs }}/error\n\navailable_devs=""\nfor devidx in $(seq 0 15);\ndo\n if [[ -z $(nvidia-smi -i $devidx --query-compute-apps=pid --format=csv,noheader) ]] ; then\n if [[ -z "$available_devs" ]] ; then\n available_devs=$devidx\n else\n available_devs=$available_devs,$devidx\n fi\n fi\ndone\nexport CUDA_VISIBLE_DEVICES=$available_devs\n\n{{ run_cmd }}\n\n', 'send_cmd_tpl': '{{ command }}', 'title': 'GPU_node', 'type': 'cluster', 'worker_bin_path': '/tank/data/Programscryosparc/cryosparc_worker/bin/cryosparcw'}, {'cache_path': '/tmp', 'cache_quota_mb': None, 'cache_reserve_mb': 10000, 'desc': None, 'hostname': 'CPU_node', 'lane': 'CPU_node', 'name': 'CPU_node', 'qdel_cmd_tpl': 'scancel {{ cluster_job_id }}', 'qinfo_cmd_tpl': 'sinfo', 'qstat_cmd_tpl': 'squeue -j {{ cluster_job_id }}', 'qstat_code_cmd_tpl': None, 'qsub_cmd_tpl': 'sbatch {{ script_path_abs }}', 'script_tpl': '#!/bin/bash\n#### cryoSPARC cluster submission script template for SLURM\n## Available variables:\n## {{ run_cmd }} - the complete command string to run the job\n## {{ num_cpu }} - the number of CPUs needed\n## {{ num_gpu }} - the number of GPUs needed. \n## Note: the code will use this many GPUs starting from dev id 0\n## the cluster scheduler or this script have the responsibility\n## of setting CUDA_VISIBLE_DEVICES so that the job code ends up\n## using the correct cluster-allocated GPUs.\n## {{ ram_gb }} - the amount of RAM needed in GB\n## {{ job_dir_abs }} - absolute path to the job directory\n## {{ project_dir_abs }} - absolute path to the project dir\n## {{ job_log_path_abs }} - absolute path to the log file for the job\n## {{ worker_bin_path }} - absolute path to the cryosparc worker command\n## {{ run_args }} - arguments to be passed to cryosparcw run\n## {{ project_uid }} - uid of the project\n## {{ job_uid }} - uid of the job\n## {{ job_creator }} - name of the user that created the job (may contain spaces)\n## {{ cryosparc_username }} - cryosparc username of the user that created the job (usually an email)\n##\n## What follows is a simple SLURM script:\n\n#SBATCH --job-name cryo_{{ project_uid }}_{{ job_uid }}\n#SBATCH -n {{ num_cpu }}\n##SBATCH --partition=gpu\n#SBATCH --partition=batch,highmem\n#SBATCH --mem={{ (ram_gb*1000)|int }}MB \n#SBATCH -o {{ job_dir_abs }}/output\n#SBATCH -e {{ job_dir_abs }}/error\n\navailable_devs=""\nfor devidx in $(seq 0 15);\ndo\n if [[ -z $(nvidia-smi -i $devidx --query-compute-apps=pid --format=csv,noheader) ]] ; then\n if [[ -z "$available_devs" ]] ; then\n available_devs=$devidx\n else\n available_devs=$available_devs,$devidx\n fi\n fi\ndone\nexport CUDA_VISIBLE_DEVICES=$available_devs\n\n{{ run_cmd }}\n\n', 'send_cmd_tpl': '{{ command }}', 'title': 'CPU_node', 'type': 'cluster', 'worker_bin_path': '/tank/data/Programscryosparc/cryosparc_worker/bin/cryosparcw'}, {'cache_path': '/tmp', 'cache_quota_mb': None, 'cache_reserve_mb': 10000, 'desc': None, 'hostname': 'GPU_node1', 'lane': 'GPU_node1', 'name': 'GPU_node1', 'qdel_cmd_tpl': 'scancel {{ cluster_job_id }}', 'qinfo_cmd_tpl': 'sinfo', 'qstat_cmd_tpl': 'squeue -j {{ cluster_job_id }}', 'qstat_code_cmd_tpl': None, 'qsub_cmd_tpl': 'sbatch {{ script_path_abs }}', 'script_tpl': '#!/bin/bash\n#### cryoSPARC cluster submission script template for SLURM\n## Available variables:\n## {{ run_cmd }} - the complete command string to run the job\n## {{ num_cpu }} - the number of CPUs needed\n## {{ num_gpu }} - the number of GPUs needed. \n## Note: the code will use this many GPUs starting from dev id 0\n## the cluster scheduler or this script have the responsibility\n## of setting CUDA_VISIBLE_DEVICES so that the job code ends up\n## using the correct cluster-allocated GPUs.\n## {{ ram_gb }} - the amount of RAM needed in GB\n## {{ job_dir_abs }} - absolute path to the job directory\n## {{ project_dir_abs }} - absolute path to the project dir\n## {{ job_log_path_abs }} - absolute path to the log file for the job\n## {{ worker_bin_path }} - absolute path to the cryosparc worker command\n## {{ run_args }} - arguments to be passed to cryosparcw run\n## {{ project_uid }} - uid of the project\n## {{ job_uid }} - uid of the job\n## {{ job_creator }} - name of the user that created the job (may contain spaces)\n## {{ cryosparc_username }} - cryosparc username of the user that created the job (usually an email)\n##\n## What follows is a simple SLURM script:\n\n#SBATCH --job-name {{ job_uid }}_{{ project_uid }}\n#SBATCH -n {{ num_cpu }}\n##SBATCH -G {{ num_gpu }}\n#SBATCH --partition=gpu\n##SBATCH --partition=batch\n#SBATCH --exclude=execute-3001\n#SBATCH --mem={{ (ram_gb*1000)|int }}MB \n#SBATCH -o {{ job_dir_abs }}/output\n#SBATCH -e {{ job_dir_abs }}/error\n\navailable_devs=""\nfor devidx in $(seq 0 15);\ndo\n if [[ -z $(nvidia-smi -i $devidx --query-compute-apps=pid --format=csv,noheader) ]] ; then\n if [[ -z "$available_devs" ]] ; then\n available_devs=$devidx\n else\n available_devs=$available_devs,$devidx\n fi\n fi\ndone\nexport CUDA_VISIBLE_DEVICES=$available_devs\n\n{{ run_cmd }}\n\n', 'send_cmd_tpl': '{{ command }}', 'title': 'GPU_node1', 'type': 'cluster', 'worker_bin_path': '/tank/data/Programscryosparc/cryosparc_worker/bin/cryosparcw'}, {'cache_path': '/tmp', 'cache_quota_mb': None, 'cache_reserve_mb': 10000, 'desc': None, 'hostname': 'GPU_node2', 'lane': 'GPU_node2', 'name': 'GPU_node2', 'qdel_cmd_tpl': 'scancel {{ cluster_job_id }}', 'qinfo_cmd_tpl': 'sinfo', 'qstat_cmd_tpl': 'squeue -j {{ cluster_job_id }}', 'qstat_code_cmd_tpl': None, 'qsub_cmd_tpl': 'sbatch {{ script_path_abs }}', 'script_tpl': '#!/bin/bash\n#### cryoSPARC cluster submission script template for SLURM\n## Available variables:\n## {{ run_cmd }} - the complete command string to run the job\n## {{ num_cpu }} - the number of CPUs needed\n## {{ num_gpu }} - the number of GPUs needed. \n## Note: the code will use this many GPUs starting from dev id 0\n## the cluster scheduler or this script have the responsibility\n## of setting CUDA_VISIBLE_DEVICES so that the job code ends up\n## using the correct cluster-allocated GPUs.\n## {{ ram_gb }} - the amount of RAM needed in GB\n## {{ job_dir_abs }} - absolute path to the job directory\n## {{ project_dir_abs }} - absolute path to the project dir\n## {{ job_log_path_abs }} - absolute path to the log file for the job\n## {{ worker_bin_path }} - absolute path to the cryosparc worker command\n## {{ run_args }} - arguments to be passed to cryosparcw run\n## {{ project_uid }} - uid of the project\n## {{ job_uid }} - uid of the job\n## {{ job_creator }} - name of the user that created the job (may contain spaces)\n## {{ cryosparc_username }} - cryosparc username of the user that created the job (usually an email)\n##\n## What follows is a simple SLURM script:\n\n#SBATCH --job-name {{ job_uid }}_{{ project_uid }}\n#SBATCH -n {{ num_cpu }}\n##SBATCH -G {{ num_gpu }}\n#SBATCH --partition=gpu\n##SBATCH --partition=batch\n#SBATCH --exclude=execute-3000\n#SBATCH --mem={{ (ram_gb*1000)|int }}MB \n#SBATCH -o {{ job_dir_abs }}/output\n#SBATCH -e {{ job_dir_abs }}/error\n\navailable_devs=""\nfor devidx in $(seq 0 15);\ndo\n if [[ -z $(nvidia-smi -i $devidx --query-compute-apps=pid --format=csv,noheader) ]] ; then\n if [[ -z "$available_devs" ]] ; then\n available_devs=$devidx\n else\n available_devs=$available_devs,$devidx\n fi\n fi\ndone\nexport CUDA_VISIBLE_DEVICES=$available_devs\n\n{{ run_cmd }}\n\n', 'send_cmd_tpl': '{{ command }}', 'title': 'GPU_node2', 'type': 'cluster', 'worker_bin_path': '/tank/data/Programscryosparc/cryosparc_worker/bin/cryosparcw'}]