I also facing the same problem and i am new in the computational biology field. I am not good with linux and its commands. Can you explain me in detail, how can i solve this problem and what is the reason.

Welcome to the forum @Lalit123.

To avoid any confusion, please can you paste, as text any error messages that you have encountered, where (which log(s), which part(s) of the user interface) and when (for example, during submission of a job of which type).

Does the job log (accessible via Metadata|Log) include any useful information that could indicate the cause of the termination? A gentle reminder: Please paste error messages and warnings as text so that this discussion can be found by interested forum visitors with a text search.

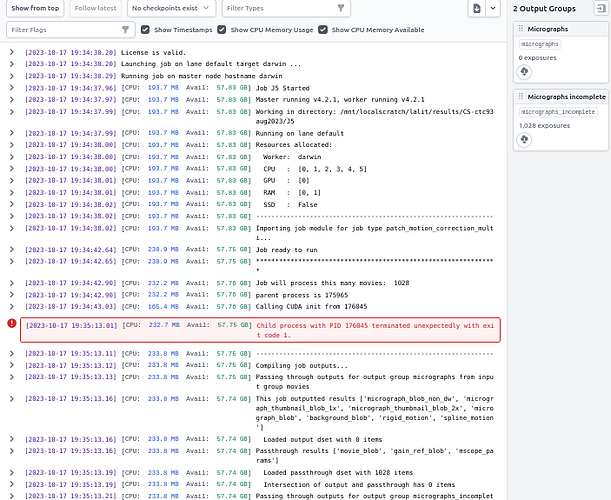

When i checked i found there are run time error (no cuda error) and broken pipe error.Here i am attaching the error messages that i have got in my job log.

================= CRYOSPARCW ======= 2023-10-17 19:34:31.426748 =========

Project P15 Job J5

Master darwin Port 39002

===========================================================================

========= monitor process now starting main process at 2023-10-17 19:34:31.426816

MAINPROCESS PID 175965

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "cryosparc_master/cryosparc_compute/run.py", line 184, in cryosparc_compute.run.run

File "/home/radha/cryosparc/cryosparc_worker/cryosparc_compute/jobs/runcommon.py", line 2179, in get_gpu_info

cudrv.init()

pycuda._driver.RuntimeError: cuInit failed: no CUDA-capable device is detected

MAIN PID 175965

motioncorrection.run_patch cryosparc_compute.jobs.jobregister

***************************************************************

Running job on hostname %s darwin

Allocated Resources : {'fixed': {'SSD': False}, 'hostname': 'darwin', 'lane': 'default', 'lane_type': 'node', 'license': True, 'licenses_acquired': 1, 'slots': {'CPU': [0, 1, 2, 3, 4, 5], 'GPU': [0], 'RAM': [0, 1]}, 'target': {'cache_path': '/mnt/ssd1/cryosparc_cache', 'cache_quota_mb': None, 'cache_reserve_mb': 10000, 'desc': None, 'gpus': [{'id': 0, 'mem': 8513716224, 'name': 'NVIDIA GeForce GTX 1070'}, {'id': 1, 'mem': 8514109440, 'name': 'NVIDIA GeForce GTX 1070'}], 'hostname': 'darwin', 'lane': 'default', 'monitor_port': None, 'name': 'darwin', 'resource_fixed': {'SSD': True}, 'resource_slots': {'CPU': [0, 1, 2, 3, 4, 5], 'GPU': [0, 1], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7]}, 'ssh_str': 'radha@darwin', 'title': 'Worker node darwin', 'type': 'node', 'worker_bin_path': '/home/radha/cryosparc/cryosparc_worker/bin/cryosparcw'}}

Process Process-1:1:

Traceback (most recent call last):

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/process.py", line 315, in _bootstrap

self.run()

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/process.py", line 108, in run

self._target(*self._args, **self._kwargs)

File "/home/radha/cryosparc/cryosparc_worker/cryosparc_compute/jobs/pipeline.py", line 200, in process_work_simple

process_setup(proc_idx) # do any setup you want on a per-process basis

File "cryosparc_master/cryosparc_compute/jobs/motioncorrection/run_patch.py", line 83, in cryosparc_compute.jobs.motioncorrection.run_patch.run_patch_motion_correction_multi.process_setup

File "cryosparc_master/cryosparc_compute/engine/cuda_core.py", line 29, in cryosparc_compute.engine.cuda_core.initialize

pycuda._driver.RuntimeError: cuInit failed: no CUDA-capable device is detected

Traceback (most recent call last):

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/queues.py", line 245, in _feed

send_bytes(obj)

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 200, in send_bytes

self._send_bytes(m[offset:offset + size])

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 411, in _send_bytes

self._send(header + buf)

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 368, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

Traceback (most recent call last):

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/queues.py", line 245, in _feed

send_bytes(obj)

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 200, in send_bytes

self._send_bytes(m[offset:offset + size])

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 411, in _send_bytes

self._send(header + buf)

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 368, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

Traceback (most recent call last):

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/queues.py", line 245, in _feed

send_bytes(obj)

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 200, in send_bytes

self._send_bytes(m[offset:offset + size])

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 411, in _send_bytes

self._send(header + buf)

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 368, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

Traceback (most recent call last):

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/queues.py", line 245, in _feed

send_bytes(obj)

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 200, in send_bytes

self._send_bytes(m[offset:offset + size])

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 411, in _send_bytes

self._send(header + buf)

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 368, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

Traceback (most recent call last):

File "/home/radha/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/queues.py", line 245, in _feed

send_bytes(obj)

Please can you post the output of these commands in a new shell:

eval $(/home/radha/cryosparc/cryosparc_worker/cryosparc_compute/bin/cryosparcw env)

nvidia-smi --query-gpu=index,name,driver_version --format=csv

nvcc -V

which nvcc

cryosparcw gpulist

echo $CUDA_VISIBLE_DEVICES

I am also facing similar issue in Patch motion correction step.

Here is the error log!!!

License is valid.

Launching job on lane default target radha-HP-Z8-G5-Workstation-Desktop-PC …

Running job on master node hostname radha-HP-Z8-G5-Workstation-Desktop-PC

[CPU: 90.2 MB Avail: 247.42 GB]

Job J14 Started

[CPU: 90.5 MB Avail: 247.42 GB]

Master running v4.6.2, worker running v4.6.2

[CPU: 90.5 MB Avail: 247.42 GB]

Working in directory: /media/radha/b8dc5185-0b16-4b59-96fa-327edda01813/Dataprocessing2024/CS-1882/J14

[CPU: 90.5 MB Avail: 247.42 GB]

Running on lane default

[CPU: 90.5 MB Avail: 247.42 GB]

Resources allocated:

[CPU: 90.5 MB Avail: 247.41 GB]

Worker: radha-HP-Z8-G5-Workstation-Desktop-PC

[CPU: 90.5 MB Avail: 247.41 GB]

CPU : [6, 7, 8, 9, 10, 11]

[CPU: 90.5 MB Avail: 247.42 GB]

GPU : [1]

[CPU: 90.5 MB Avail: 247.42 GB]

RAM : [2, 3]

[CPU: 90.5 MB Avail: 247.41 GB]

SSD : False

[CPU: 90.5 MB Avail: 247.40 GB]

[CPU: 90.5 MB Avail: 247.40 GB]

Importing job module for job type patch_motion_correction_multi…

[CPU: 248.0 MB Avail: 247.43 GB]

Job ready to run

[CPU: 248.0 MB Avail: 247.43 GB]

[CPU: 251.5 MB Avail: 247.43 GB]

Job will process this many movies: 5049

[CPU: 251.5 MB Avail: 247.43 GB]

Job will output denoiser training data for this many movies: 200

[CPU: 251.5 MB Avail: 247.43 GB]

Random seed: 242369941

[CPU: 251.5 MB Avail: 247.43 GB]

parent process is 1381085

[CPU: 204.6 MB Avail: 247.31 GB]

Calling CUDA init from 1381112

[CPU: 287.5 MB Avail: 247.46 GB]

Child process with PID 1381112 terminated unexpectedly with exit code 1.

[CPU: 291.6 MB Avail: 247.46 GB]

[‘uid’, ‘movie_blob/path’, ‘movie_blob/shape’, ‘movie_blob/psize_A’, ‘movie_blob/is_gain_corrected’, ‘movie_blob/format’, ‘movie_blob/has_defect_file’, ‘movie_blob/import_sig’, ‘micrograph_blob/path’, ‘micrograph_blob/idx’, ‘micrograph_blob/shape’, ‘micrograph_blob/psize_A’, ‘micrograph_blob/format’, ‘micrograph_blob/is_background_subtracted’, ‘micrograph_blob/vmin’, ‘micrograph_blob/vmax’, ‘micrograph_blob/import_sig’, ‘micrograph_blob_non_dw/path’, ‘micrograph_blob_non_dw/idx’, ‘micrograph_blob_non_dw/shape’, ‘micrograph_blob_non_dw/psize_A’, ‘micrograph_blob_non_dw/format’, ‘micrograph_blob_non_dw/is_background_subtracted’, ‘micrograph_blob_non_dw/vmin’, ‘micrograph_blob_non_dw/vmax’, ‘micrograph_blob_non_dw/import_sig’, ‘micrograph_blob_non_dw_AB/path’, ‘micrograph_blob_non_dw_AB/idx’, ‘micrograph_blob_non_dw_AB/shape’, ‘micrograph_blob_non_dw_AB/psize_A’, ‘micrograph_blob_non_dw_AB/format’, ‘micrograph_blob_non_dw_AB/is_background_subtracted’, ‘micrograph_blob_non_dw_AB/vmin’, ‘micrograph_blob_non_dw_AB/vmax’, ‘micrograph_blob_non_dw_AB/import_sig’, ‘micrograph_thumbnail_blob_1x/path’, ‘micrograph_thumbnail_blob_1x/idx’, ‘micrograph_thumbnail_blob_1x/shape’, ‘micrograph_thumbnail_blob_1x/format’, ‘micrograph_thumbnail_blob_1x/binfactor’, ‘micrograph_thumbnail_blob_1x/micrograph_path’, ‘micrograph_thumbnail_blob_1x/vmin’, ‘micrograph_thumbnail_blob_1x/vmax’, ‘micrograph_thumbnail_blob_2x/path’, ‘micrograph_thumbnail_blob_2x/idx’, ‘micrograph_thumbnail_blob_2x/shape’, ‘micrograph_thumbnail_blob_2x/format’, ‘micrograph_thumbnail_blob_2x/binfactor’, ‘micrograph_thumbnail_blob_2x/micrograph_path’, ‘micrograph_thumbnail_blob_2x/vmin’, ‘micrograph_thumbnail_blob_2x/vmax’, ‘background_blob/path’, ‘background_blob/idx’, ‘background_blob/binfactor’, ‘background_blob/shape’, ‘background_blob/psize_A’, ‘rigid_motion/type’, ‘rigid_motion/path’, ‘rigid_motion/idx’, ‘rigid_motion/frame_start’, ‘rigid_motion/frame_end’, ‘rigid_motion/zero_shift_frame’, ‘rigid_motion/psize_A’, ‘spline_motion/type’, ‘spline_motion/path’, ‘spline_motion/idx’, ‘spline_motion/frame_start’, ‘spline_motion/frame_end’, ‘spline_motion/zero_shift_frame’, ‘spline_motion/psize_A’]

[CPU: 288.0 MB Avail: 247.46 GB]

[CPU: 288.0 MB Avail: 247.46 GB]

Compiling job outputs…

[CPU: 288.0 MB Avail: 247.46 GB]

Passing through outputs for output group micrographs from input group movies

[CPU: 288.0 MB Avail: 247.46 GB]

This job outputted results [‘micrograph_blob_non_dw’, ‘micrograph_blob_non_dw_AB’, ‘micrograph_thumbnail_blob_1x’, ‘micrograph_thumbnail_blob_2x’, ‘movie_blob’, ‘micrograph_blob’, ‘background_blob’, ‘rigid_motion’, ‘spline_motion’]

[CPU: 288.0 MB Avail: 247.46 GB]

Loaded output dset with 0 items

[CPU: 288.0 MB Avail: 247.46 GB]

Passthrough results [‘gain_ref_blob’, ‘mscope_params’]

[CPU: 290.7 MB Avail: 247.46 GB]

Loaded passthrough dset with 5049 items

[CPU: 290.7 MB Avail: 247.46 GB]

Intersection of output and passthrough has 0 items

[CPU: 290.7 MB Avail: 247.46 GB]

Output dataset contains: [‘gain_ref_blob’, ‘mscope_params’]

[CPU: 290.7 MB Avail: 247.46 GB]

Outputting passthrough result gain_ref_blob

[CPU: 290.7 MB Avail: 247.46 GB]

Outputting passthrough result mscope_params

[CPU: 290.7 MB Avail: 247.46 GB]

Passing through outputs for output group micrographs_incomplete from input group movies

[CPU: 290.7 MB Avail: 247.46 GB]

This job outputted results [‘micrograph_blob’]

[CPU: 290.7 MB Avail: 247.46 GB]

Loaded output dset with 5049 items

[CPU: 290.7 MB Avail: 247.46 GB]

Passthrough results [‘movie_blob’, ‘gain_ref_blob’, ‘mscope_params’]

[CPU: 292.2 MB Avail: 247.46 GB]

Loaded passthrough dset with 5049 items

[CPU: 292.2 MB Avail: 247.46 GB]

Intersection of output and passthrough has 5049 items

[CPU: 292.6 MB Avail: 247.46 GB]

Output dataset contains: [‘gain_ref_blob’, ‘movie_blob’, ‘mscope_params’]

[CPU: 292.6 MB Avail: 247.46 GB]

Outputting passthrough result movie_blob

[CPU: 292.6 MB Avail: 247.46 GB]

Outputting passthrough result gain_ref_blob

[CPU: 292.6 MB Avail: 247.46 GB]

Outputting passthrough result mscope_params

[CPU: 292.6 MB Avail: 247.46 GB]

Checking outputs for output group micrographs

[CPU: 292.6 MB Avail: 247.46 GB]

Checking outputs for output group micrographs_incomplete

[CPU: 294.6 MB Avail: 247.46 GB]

Updating job size…

[CPU: 294.6 MB Avail: 247.46 GB]

Exporting job and creating csg files…

[CPU: 294.6 MB Avail: 247.46 GB]

[CPU: 294.6 MB Avail: 247.46 GB]

@aswathy Please can you post the output of the command

cryosparcm joblog P99 J199 | tail -n 50

where P99 and J199 have been replaced with the relevant project and job IDs, respectively.

I am also facing the same issue

License is valid.

Launching job on lane default target iiser-X10DAi …

Running job on master node hostname iiser-X10DAi

[CPU: 213.1 MB Avail: 116.55 GB]

Job J17 Started

[CPU: 213.1 MB Avail: 116.55 GB]

Master running v4.4.1, worker running v4.4.1

[CPU: 213.1 MB Avail: 116.55 GB]

Working in directory: /home/iiser/cryosparcuser/Sivang/FtsZ-SepF/CS-ftsz-sepf/J17

[CPU: 213.1 MB Avail: 116.55 GB]

Running on lane default

[CPU: 213.1 MB Avail: 116.55 GB]

Resources allocated:

[CPU: 213.1 MB Avail: 116.55 GB]

Worker: iiser-X10DAi

[CPU: 213.1 MB Avail: 116.55 GB]

CPU : [0, 1, 2, 3, 4, 5]

[CPU: 213.1 MB Avail: 116.55 GB]

GPU : [0]

[CPU: 213.1 MB Avail: 116.55 GB]

RAM : [0, 1]

[CPU: 213.1 MB Avail: 116.55 GB]

SSD : False

[CPU: 213.1 MB Avail: 116.55 GB]

[CPU: 213.1 MB Avail: 116.55 GB]

Importing job module for job type patch_motion_correction_multi…

[CPU: 239.2 MB Avail: 116.56 GB]

Job ready to run

[CPU: 239.2 MB Avail: 116.56 GB]

[CPU: 240.3 MB Avail: 116.56 GB]

Job will process this many movies: 459

[CPU: 240.3 MB Avail: 116.56 GB]

Random seed: 498764590

[CPU: 240.3 MB Avail: 116.56 GB]

parent process is 1211379

[CPU: 163.6 MB Avail: 116.55 GB]

Calling CUDA init from 1211429

[CPU: 240.3 MB Avail: 116.66 GB]

Child process with PID 1211429 terminated unexpectedly with exit code 1.

[CPU: 241.1 MB Avail: 116.66 GB]

[CPU: 241.1 MB Avail: 116.66 GB]

Compiling job outputs…

[CPU: 241.1 MB Avail: 116.66 GB]

Passing through outputs for output group micrographs from input group movies

[CPU: 241.1 MB Avail: 116.66 GB]

This job outputted results [‘micrograph_blob_non_dw’, ‘micrograph_thumbnail_blob_1x’, ‘micrograph_thumbnail_blob_2x’, ‘micrograph_blob’, ‘background_blob’, ‘rigid_motion’, ‘spline_motion’]

[CPU: 241.1 MB Avail: 116.66 GB]

Loaded output dset with 0 items

[CPU: 241.1 MB Avail: 116.66 GB]

Passthrough results [‘movie_blob’, ‘mscope_params’]

[CPU: 241.1 MB Avail: 116.66 GB]

Loaded passthrough dset with 459 items

[CPU: 241.1 MB Avail: 116.66 GB]

Intersection of output and passthrough has 0 items

[CPU: 241.1 MB Avail: 116.66 GB]

Passing through outputs for output group micrographs_incomplete from input group movies

[CPU: 241.1 MB Avail: 116.65 GB]

This job outputted results [‘micrograph_blob’]

[CPU: 241.1 MB Avail: 116.65 GB]

Loaded output dset with 459 items

[CPU: 241.1 MB Avail: 116.65 GB]

Passthrough results [‘movie_blob’, ‘mscope_params’]

[CPU: 241.1 MB Avail: 116.65 GB]

Loaded passthrough dset with 459 items

[CPU: 241.1 MB Avail: 116.65 GB]

Intersection of output and passthrough has 459 items

[CPU: 241.1 MB Avail: 116.65 GB]

Checking outputs for output group micrographs

[CPU: 241.1 MB Avail: 116.65 GB]

Checking outputs for output group micrographs_incomplete

[CPU: 241.1 MB Avail: 116.65 GB]

Updating job size…

[CPU: 241.1 MB Avail: 116.65 GB]

Exporting job and creating csg files…

[CPU: 241.1 MB Avail: 116.65 GB]

[CPU: 241.1 MB Avail: 116.65 GB]

Job complete. Total time 30.61s

@shivang1307 Please can you post the output of these commands:

tail -n 50 /home/iiser/cryosparcuser/Sivang/FtsZ-SepF/CS-ftsz-sepf/J17/job.log

sudo journalctl | grep -i oom

(the latter command requires admin access to the computer)

tail: cannot open ‘home/iiser/cryosparcuser/Sivang/FtsZ-SepF/CS-ftsz-sepf/J17/job.log’ for reading: No such file or directory

for the first one

The / in front of home/ may have been missing when the tail command was run.

tail: cannot open ‘/home/iiser/cryosparcuser/Sivang/FtsZ-SepF/CS-ftsz-sepf/J17/job.log’ for reading: No such file or directory

I took the path from your error message.

Does the directory exist? Has the job been cleared or deleted?

Yes the directory exist i just saw I don’t know whats happening

Sorry i deleted the job so directory got deleted with it.

I did it again

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 411, in _send_bytes

self._send(header + buf)

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 368, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

Traceback (most recent call last):

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/queues.py", line 245, in _feed

send_bytes(obj)

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 200, in send_bytes

self._send_bytes(m[offset:offset + size])

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 411, in _send_bytes

self._send(header + buf)

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 368, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

Traceback (most recent call last):

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/queues.py", line 245, in _feed

send_bytes(obj)

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 200, in send_bytes

self._send_bytes(m[offset:offset + size])

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 411, in _send_bytes

self._send(header + buf)

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 368, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

Traceback (most recent call last):

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/queues.py", line 245, in _feed

send_bytes(obj)

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 200, in send_bytes

self._send_bytes(m[offset:offset + size])

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 411, in _send_bytes

self._send(header + buf)

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 368, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

Traceback (most recent call last):

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/queues.py", line 245, in _feed

send_bytes(obj)

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 200, in send_bytes

self._send_bytes(m[offset:offset + size])

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 411, in _send_bytes

self._send(header + buf)

***************************************************************

File "/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/multiprocessing/connection.py", line 368, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numpy/core/fromnumeric.py:3440: RuntimeWarning: Mean of empty slice.

return _methods._mean(a, axis=axis, dtype=dtype,

/home/iiser/cryosparcuser/cryosparc/cryosparc_worker/deps/anaconda/envs/cryosparc_worker_env/lib/python3.8/site-packages/numpy/core/_methods.py:189: RuntimeWarning: invalid value encountered in double_scalars

ret = ret.dtype.type(ret / rcount)

Thanks @shivang1307 Please can you also post the outputs of the

sudo journalctl | grep -i oom command and of the command

nvidia-smi.

Apr 25 10:03:33 iiser-X10DAi systemd-oomd[1522]: Failed to connect to /run/systemd/io.system.ManagedOOM: Connection refused

Apr 25 10:03:33 iiser-X10DAi systemd-oomd[1522]: Failed to acquire varlink connection: Connection refused

Apr 25 10:03:33 iiser-X10DAi systemd-oomd[1522]: Event loop failed: Connection refused

Apr 25 10:03:33 iiser-X10DAi systemd[1]: systemd-oomd.service: Main process exited, code=exited, status=1/FAILURE

Apr 25 10:03:33 iiser-X10DAi systemd[1]: systemd-oomd.service: Failed with result ‘exit-code’.

Apr 25 10:03:33 iiser-X10DAi systemd[1]: systemd-oomd.service: Consumed 46min 56.246s CPU time.

Apr 25 10:03:33 iiser-X10DAi systemd[1]: systemd-oomd.service: Scheduled restart job, restart counter is at 1.

Apr 25 10:03:33 iiser-X10DAi systemd[1]: Stopped Userspace Out-Of-Memory (OOM) Killer.

Apr 25 10:03:33 iiser-X10DAi systemd[1]: systemd-oomd.service: Consumed 46min 56.246s CPU time.

Apr 25 10:03:33 iiser-X10DAi systemd[1]: Starting Userspace Out-Of-Memory (OOM) Killer…

Apr 25 10:03:33 iiser-X10DAi systemd[1]: Started Userspace Out-Of-Memory (OOM) Killer.

Apr 25 10:04:41 iiser-X10DAi systemd-oomd[925917]: Failed to connect to /run/systemd/io.system.ManagedOOM: Connection refused

Apr 25 10:04:41 iiser-X10DAi systemd-oomd[925917]: Failed to acquire varlink connection: Connection refused

Apr 25 10:04:41 iiser-X10DAi systemd-oomd[925917]: Event loop failed: Connection refused

Apr 25 10:04:42 iiser-X10DAi systemd[1]: systemd-oomd.service: Main process exited, code=exited, status=1/FAILURE

Apr 25 10:04:42 iiser-X10DAi systemd[1]: systemd-oomd.service: Failed with result ‘exit-code’.

Apr 25 10:04:42 iiser-X10DAi systemd[1]: systemd-oomd.service: Scheduled restart job, restart counter is at 2.

Apr 25 10:04:42 iiser-X10DAi systemd[1]: Stopped Userspace Out-Of-Memory (OOM) Killer.

Apr 25 10:04:42 iiser-X10DAi systemd[1]: Starting Userspace Out-Of-Memory (OOM) Killer…

Apr 25 10:04:42 iiser-X10DAi systemd[1]: Started Userspace Out-Of-Memory (OOM) Killer.

Apr 25 10:04:47 iiser-X10DAi systemd-oomd[928995]: Failed to connect to /run/systemd/io.system.ManagedOOM: Connection refused

Apr 25 10:04:47 iiser-X10DAi systemd-oomd[928995]: Failed to acquire varlink connection: Connection refused

Apr 25 10:04:47 iiser-X10DAi systemd-oomd[928995]: Event loop failed: Connection refused

Apr 25 10:04:47 iiser-X10DAi systemd[1]: systemd-oomd.service: Main process exited, code=exited, status=1/FAILURE

Apr 25 10:04:47 iiser-X10DAi systemd[1]: systemd-oomd.service: Failed with result ‘exit-code’.

Apr 25 10:04:47 iiser-X10DAi systemd[1]: systemd-oomd.service: Scheduled restart job, restart counter is at 3.

Apr 25 10:04:47 iiser-X10DAi systemd[1]: Stopped Userspace Out-Of-Memory (OOM) Killer.

Apr 25 10:04:47 iiser-X10DAi systemd[1]: Starting Userspace Out-Of-Memory (OOM) Killer…

Apr 25 10:04:47 iiser-X10DAi systemd[1]: Started Userspace Out-Of-Memory (OOM) Killer.

Apr 25 10:10:41 iiser-X10DAi systemd[1]: Stopping Userspace Out-Of-Memory (OOM) Killer…

Apr 25 10:10:41 iiser-X10DAi systemd[1]: systemd-oomd.service: Deactivated successfully.

Apr 25 10:10:41 iiser-X10DAi systemd[1]: Stopped Userspace Out-Of-Memory (OOM) Killer.

Apr 25 10:10:41 iiser-X10DAi systemd[1]: Starting Userspace Out-Of-Memory (OOM) Killer…

Apr 25 10:10:41 iiser-X10DAi systemd[1]: Started Userspace Out-Of-Memory (OOM) Killer.

Apr 25 10:10:47 iiser-X10DAi containerd[934985]: time=“2025-04-25T10:10:47.856850114+05:30” level=info msg=“Start cri plugin with config {PluginConfig:{ContainerdConfig:{Snapshotter:overlayfs DefaultRuntimeName:runc DefaultRuntime:{Type: Path: Engine: PodAnnotations: ContainerAnnotations: Root: Options:map PrivilegedWithoutHostDevices:false PrivilegedWithoutHostDevicesAllDevicesAllowed:false BaseRuntimeSpec: NetworkPluginConfDir: NetworkPluginMaxConfNum:0 Snapshotter: SandboxMode:} UntrustedWorkloadRuntime:{Type: Path: Engine: PodAnnotations: ContainerAnnotations: Root: Options:map PrivilegedWithoutHostDevices:false PrivilegedWithoutHostDevicesAllDevicesAllowed:false BaseRuntimeSpec: NetworkPluginConfDir: NetworkPluginMaxConfNum:0 Snapshotter: SandboxMode:} Runtimes:map[runc:{Type:io.containerd.runc.v2 Path: Engine: PodAnnotations: ContainerAnnotations: Root: Options:map[BinaryName: CriuImagePath: CriuPath: CriuWorkPath: IoGid:0 IoUid:0 NoNewKeyring:false NoPivotRoot:false Root: ShimCgroup: SystemdCgroup:false] PrivilegedWithoutHostDevices:false PrivilegedWithoutHostDevicesAllDevicesAllowed:false BaseRuntimeSpec: NetworkPluginConfDir: NetworkPluginMaxConfNum:0 Snapshotter: SandboxMode:podsandbox}] NoPivot:false DisableSnapshotAnnotations:true DiscardUnpackedLayers:false IgnoreBlockIONotEnabledErrors:false IgnoreRdtNotEnabledErrors:false} CniConfig:{NetworkPluginBinDir:/opt/cni/bin NetworkPluginConfDir:/etc/cni/net.d NetworkPluginMaxConfNum:1 NetworkPluginSetupSerially:false NetworkPluginConfTemplate: IPPreference:} Registry:{ConfigPath: Mirrors:map Configs:map Auths:map Headers:map} ImageDecryption:{KeyModel:node} DisableTCPService:true StreamServerAddress:127.0.0.1 StreamServerPort:0 StreamIdleTimeout:4h0m0s EnableSelinux:false SelinuxCategoryRange:1024 SandboxImage:registry.k8s.io/pause:3.8 StatsCollectPeriod:10 SystemdCgroup:false EnableTLSStreaming:false X509KeyPairStreaming:{TLSCertFile: TLSKeyFile:} MaxContainerLogLineSize:16384 DisableCgroup:false DisableApparmor:false RestrictOOMScoreAdj:false MaxConcurrentDownloads:3 DisableProcMount:false UnsetSeccompProfile: TolerateMissingHugetlbController:true DisableHugetlbController:true DeviceOwnershipFromSecurityContext:false IgnoreImageDefinedVolumes:false NetNSMountsUnderStateDir:false EnableUnprivilegedPorts:false EnableUnprivilegedICMP:false EnableCDI:false CDISpecDirs:[/etc/cdi /var/run/cdi] ImagePullProgressTimeout:5m0s DrainExecSyncIOTimeout:0s ImagePullWithSyncFs:false IgnoreDeprecationWarnings:} ContainerdRootDir:/var/lib/containerd ContainerdEndpoint:/run/containerd/containerd.sock RootDir:/var/lib/containerd/io.containerd.grpc.v1.cri StateDir:/run/containerd/io.containerd.grpc.v1.cri}”

Failed to initialize NVML: Driver/library version mismatch

NVML library version: 550.163

In this state, a GPU-accelerated CryoSPARC job is expected to fail, but I am not sure whether it would fail with

You may want to try:

- reboot the computer

- then confirm the command

nvidia-smidisplays meaningful information on the installed nvidia GPUs - then re-try the Patch Motion Correction job.