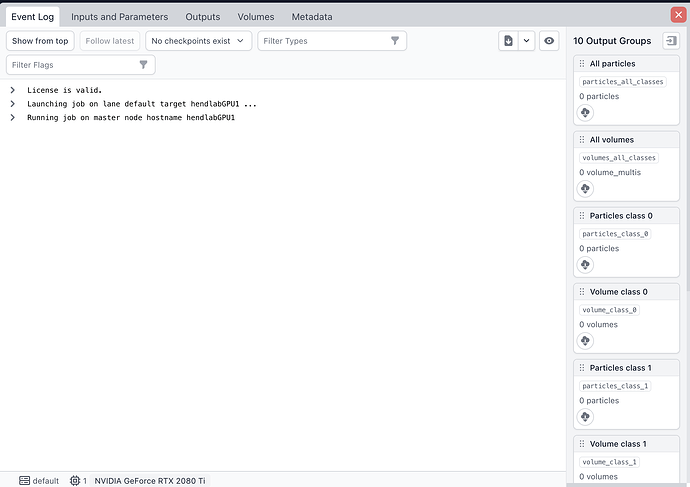

I restalled the cryosaprc and try to run the previous job, however it stuck there and didn’t run.

Here is the log it show. it only show launched but didn’t run.

Please can you post the outputs of the following commands

cryosparcm cli "get_job('P99', 'J199', 'job_type', 'version', 'instance_information', 'status', 'killed_at', 'started_at', 'params_spec', 'cloned_from')"

cryosparcm cli "get_scheduler_targets()"

where you replace P99 and J199 with the failed job’s actual project and job IDs, respectively.

Hi wtwmpel,

There is the result:

input: cryosparcm cli “get_job(‘P21’, ‘J717’, ‘job_type’, ‘version’, ‘instance_information’, ‘status’, ‘killed_at’, ‘started_at’, ‘params_spec’, ‘cloned_from’)”

output: {‘_id’: ‘66fabb68f7542510ff4ad864’, ‘cloned_from’: None, ‘instance_information’: {}, ‘job_type’: ‘hetero_refine’, ‘killed_at’: None, ‘params_spec’: {}, ‘project_uid’: ‘P21’, ‘started_at’: None, ‘status’: ‘launched’, ‘uid’: ‘J717’, ‘version’: ‘v4.6.0’}

input: cryosparcm cli “get_scheduler_targets()”

output: [{‘cache_path’: ‘/media/hendlab/SCRATCH1/cryosparc_cache/’, ‘cache_quota_mb’: None, ‘cache_reserve_mb’: 10000, ‘desc’: None, ‘gpus’: [{‘id’: 0, ‘mem’: 11543379968, ‘name’: ‘NVIDIA GeForce RTX 2080 Ti’}], ‘hostname’: ‘hendlabGPU1’, ‘lane’: ‘default’, ‘name’: ‘hendlabGPU1’, ‘resource_fixed’: {‘SSD’: True}, ‘resource_slots’: {‘CPU’: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31], ‘GPU’: [0], ‘RAM’: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15]}, ‘ssh_str’: ‘hendlab@hendlabGPU1’, ‘title’: ‘Worker node hendlabGPU1’, ‘type’: ‘node’, ‘worker_bin_path’: ‘/home/hendlab/software/cryosparc2/cryosparc2_worker/bin/cryosparcw’}]

Thanks @robinbinchen for this info.

What are the outputs of the commands

ls -l /home/hendlab/software/cryosparc2/cryosparc2_worker/config.sh

cat /home/hendlab/software/cryosparc2/cryosparc2_worker/version

?

under cryosprac2 folder only cryosaprc_worker folder, but cryosparc2_worker folder:

ls -l /home/hendlab/software/cryosparc2/cryosparc_worker/config.sh

-rw-rw-r-- 1 hendlab hendlab 98 Oct 1 11:01 /home/hendlab/software/cryosparc2/cryosparc_worker/config.sh

cat /home/hendlab/software/cryosparc2/cryosparc_worker/version

v4.6.0

@robinbinchen There seems to be a mismatch between the absolute path to the cryosparc_worker/ directory on disk and the worker configuration in the database.

If you still need help with this problem, please can you post the output of the commands in a fresh shell

eval $(cryosparcm env) # load the CryoSPARC environment

echo $CRYOSPARC_MASTER_HOSTNAME

echo $CRYOSPARC_BASE_PORT

host $CRYOSPARC_MASTER_HOSTNAME

Please exit the shell after recording the outputs to avoid running commands inadvertently in the CryoSPARC shell.

Hi @wtempel ,

Thank you for your reply.

I only have single workstation so I didn’t install the worker. I had the previous cryosparc database, so I change the config.sh in master folder to change the directory of cryosprac databas.

So which configuration need to change.

Here is the result:

echo $CRYOSPARC_MASTER_HOSTNAME

hendlabGPU1

echo $CRYOSPARC_BASE_PORT

39000

host $CRYOSPARC_MASTER_HOSTNAME

hendlabGPU1 has address 127.0.1.1

A “single workstation” installation still requires an installation of cryosparc_worker/, but it is possible that this installation occurred automatically if you used the

cryosparc_master/install.sh --standalone option.

If all of

cryosparc_worker/has been installed automatically- you are trying to use a new installation of CryoSPARC with an old database

- you still want to cache particles under

/media/hendlab/SCRATCH1/cryosparc_cache

are true, the the following sequence of commands may help (ref 1, replace the actual path to the cryosparc_worker/bin/cryosparcw command):

cryosparcm cli "remove_scheduler_lane('default')"

/path/to/cryosparc_worker/bin/cryosparcw connect --worker hendlabGPU1 --master hendlabGPU1 --port 39000 --ssdpath /media/hendlab/SCRATCH1/cryosparc_cache

Does this work?

Thank you so much! it works now.