Dear all

We experience sometimes that jobs cant be queued.

You create a job, build it, queue it and after selecting the lane or a direct GPU you are going without any message back to the build state.

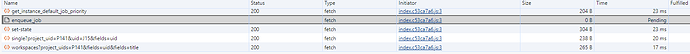

With Edge i can see that:

The enqueue_job is “pending” forever.

Perhaps related in the app_api i can see this:

app_api log

cryoSPARC Application API server running

getJobError P86 J1711

<— Last few GCs —>

[28400:0x65a5640] 1476002597 ms: Scavenge 528.1 (547.1) → 523.5 (552.8) MB, 65.8 / 0.0 ms (average mu = 0.998, current mu = 0.949) allocation failure

[28400:0x65a5640] 1476002732 ms: Scavenge 534.1 (553.1) → 529.3 (558.8) MB, 69.7 / 0.0 ms (average mu = 0.998, current mu = 0.949) allocation failure

[28400:0x65a5640] 1476002870 ms: Scavenge 540.2 (559.3) → 535.5 (564.8) MB, 71.8 / 0.0 ms (average mu = 0.998, current mu = 0.949) allocation failure

<— JS stacktrace —>

FATAL ERROR: Zone Allocation failed - process out of memory

1: 0xa3aaf0 node::Abort() [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

2: 0x970199 node::FatalError(char const*, char const*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

3: 0xbba42e v8::Utils::ReportOOMFailure(v8::internal::Isolate*, char const*, bool) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

4: 0xbba7a7 v8::internal::V8::FatalProcessOutOfMemory(v8::internal::Isolate*, char const*, bool) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

5: 0x126068e v8::internal::Zone::NewExpand(unsigned long) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

6: 0xe45145 void std::vector<unsigned char, v8::internal::ZoneAllocator >::emplace_back(unsigned char&&) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

7: 0xe451d6 v8::internal::interpreter::BytecodeArrayWriter::EmitBytecode(v8::internal::interpreter::BytecodeNode const*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

8: 0xe3dec5 v8::internal::interpreter::BytecodeArrayBuilder::ToString() [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

9: 0xe54df8 v8::internal::interpreter::BytecodeGenerator::VisitTemplateLiteral(v8::internal::TemplateLiteral*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

10: 0xe53068 v8::internal::interpreter::BytecodeGenerator::VisitForAccumulatorValue(v8::internal::Expression*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

11: 0xe530d7 v8::internal::interpreter::BytecodeGenerator::VisitReturnStatement(v8::internal::ReturnStatement*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

12: 0xe51856 v8::internal::interpreter::BytecodeGenerator::VisitIfStatement(v8::internal::IfStatement*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

13: 0xe51d04 v8::internal::interpreter::BytecodeGenerator::VisitStatements(v8::internal::ZoneListv8::internal::Statement* const*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

14: 0xe51e5b v8::internal::interpreter::BytecodeGenerator::VisitBlockDeclarationsAndStatements(v8::internal::Block*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

15: 0xe51ed7 v8::internal::interpreter::BytecodeGenerator::VisitBlock(v8::internal::Block*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

16: 0xe51856 v8::internal::interpreter::BytecodeGenerator::VisitIfStatement(v8::internal::IfStatement*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

17: 0xe5181b v8::internal::interpreter::BytecodeGenerator::VisitIfStatement(v8::internal::IfStatement*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

18: 0xe51d04 v8::internal::interpreter::BytecodeGenerator::VisitStatements(v8::internal::ZoneListv8::internal::Statement* const*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

19: 0xe51e5b v8::internal::interpreter::BytecodeGenerator::VisitBlockDeclarationsAndStatements(v8::internal::Block*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

20: 0xe51ed7 v8::internal::interpreter::BytecodeGenerator::VisitBlock(v8::internal::Block*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

21: 0xe51856 v8::internal::interpreter::BytecodeGenerator::VisitIfStatement(v8::internal::IfStatement*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

22: 0xe51d04 v8::internal::interpreter::BytecodeGenerator::VisitStatements(v8::internal::ZoneListv8::internal::Statement* const*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

23: 0xe5249a v8::internal::interpreter::BytecodeGenerator::GenerateBytecodeBody() [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

24: 0xe527c9 v8::internal::interpreter::BytecodeGenerator::GenerateBytecode(unsigned long) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

25: 0xe64ee0 v8::internal::interpreter::InterpreterCompilationJob::ExecuteJobImpl() [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

26: 0xc8238b [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

27: 0xc88598 [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

28: 0xc8b59e v8::internal::Compiler::Compile(v8::internal::Handlev8::internal::SharedFunctionInfo, v8::internal::Compiler::ClearExceptionFlag, v8::internal::IsCompiledScope*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

29: 0xc8d6bc v8::internal::Compiler::Compile(v8::internal::Handlev8::internal::JSFunction, v8::internal::Compiler::ClearExceptionFlag, v8::internal::IsCompiledScope*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

30: 0x108658a v8::internal::Runtime_CompileLazy(int, unsigned long*, v8::internal::Isolate*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

31: 0x1448df9 [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/api/nodejs/bin/node]

cryoSPARC Application API server running

cryoSPARC Application API server running

cryoSPARC Application API server running

cryoSPARC Application API server running

cryoSPARC Application API server running

cryoSPARC Application API server running

cryoSPARC Application API server running

cryoSPARC Application API server running

cryoSPARC Application API server running

cryoSPARC Application API server running

cryoSPARC Application API server running

cryoSPARC Application API server running

<— Last few GCs —>

[9574:0x4c933e0] 1656316547 ms: Scavenge 628.8 (647.9) → 624.1 (653.7) MB, 59.4 / 0.0 ms (average mu = 0.976, current mu = 0.963) allocation failure

[9574:0x4c933e0] 1656316674 ms: Scavenge 634.7 (653.9) → 630.0 (659.2) MB, 62.7 / 0.0 ms (average mu = 0.976, current mu = 0.963) allocation failure

[9574:0x4c933e0] 1656316803 ms: Scavenge 640.7 (659.7) → 636.0 (665.4) MB, 64.2 / 0.0 ms (average mu = 0.976, current mu = 0.963) allocation failure

<— JS stacktrace —>

FATAL ERROR: Zone Allocation failed - process out of memory

1: 0xa3ad50 node::Abort() [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/nodejs/bin/node]

2: 0x970199 node::FatalError(char const*, char const*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/nodejs/bin/node]

3: 0xbba90e v8::Utils::ReportOOMFailure(v8::internal::Isolate*, char const*, bool) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/nodejs/bin/node]

4: 0xbbac87 v8::internal::V8::FatalProcessOutOfMemory(v8::internal::Isolate*, char const*, bool) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/nodejs/bin/node]

5: 0x1260bfe v8::internal::Zone::NewExpand(unsigned long) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/nodejs/bin/node]

6: 0xc030ce v8::internal::AstValueFactory::NewConsString() [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/nodejs/bin/node]

7: 0xfc116e v8::internal::ParseInfo::GetOrCreateAstValueFactory() [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/nodejs/bin/node]

8: 0xfc3473 v8::internal::Parser::Parser(v8::internal::ParseInfo*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/nodejs/bin/node]

9: 0xfe6e7c v8::internal::parsing::ParseFunction(v8::internal::ParseInfo*, v8::internal::Handlev8::internal::SharedFunctionInfo, v8::internal::Isolate*, v8::internal::parsing::ReportErrorsAndStatisticsMode) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/nodejs/bin/node]

10: 0xfe72b5 v8::internal::parsing::ParseAny(v8::internal::ParseInfo*, v8::internal::Handlev8::internal::SharedFunctionInfo, v8::internal::Isolate*, v8::internal::parsing::ReportErrorsAndStatisticsMode) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/nodejs/bin/node]

11: 0xc8ba30 v8::internal::Compiler::Compile(v8::internal::Handlev8::internal::SharedFunctionInfo, v8::internal::Compiler::ClearExceptionFlag, v8::internal::IsCompiledScope*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/nodejs/bin/node]

12: 0xc8db9c v8::internal::Compiler::Compile(v8::internal::Handlev8::internal::JSFunction, v8::internal::Compiler::ClearExceptionFlag, v8::internal::IsCompiledScope*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/nodejs/bin/node]

13: 0x1086afa v8::internal::Runtime_CompileLazy(int, unsigned long*, v8::internal::Isolate*) [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/nodejs/bin/node]

14: 0x1449379 [/opt/cryoSPARC2/cryosparc2_master/cryosparc_app/nodejs/bin/node]

cryoSPARC Application API server running

cryoSPARC Application API server running

Is there a way to recover from that state without restarting the complete cryosparc instance?