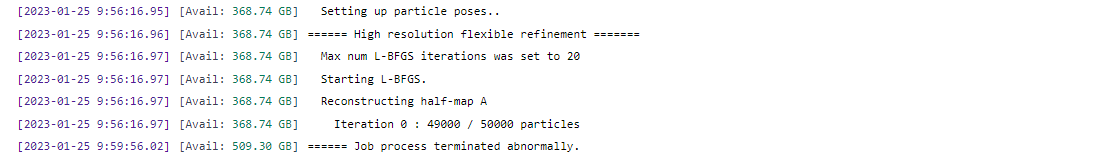

My 3d flex reconstruction job keeps stalling at iteration 0.

It shows “job process terminated abnormally” and stalls just before the completion of iteration 0

I did try reducing the number of particles used for the reconstruction but it still keeps failing again at this step.

It would be great if somebody could help me out

Welcome to the forum @Justus. Please post any error messages (like those in your screenshot) as text.

Does the job.log file inside the job’s directory contain any additional hints about the job’s failure?

Probably the same issue as me and @Flow describe here 3D Flex Reconstruc fails at iteration 0 - #5 by Flow. Nothing useful in logs.

Hi @bsobol,

Could you please trigger this bug and then have a look at dmesg? Depending on your OS distro and version, there may be some additional information about why the crash happened.

Specifically, I’d be interested in a dmesg entry that looks vaguely like this:

[76771.355512] python[39968]: segfault at 854 ip 00007f66deb53b65 sp 00007f66beffb1b0 error 4 in blobio_native.so[7f66deb4a000+2f000]

[76771.355533] Code: 00 00 0f 29 9c 24 90 00 00 00 0f 29 a4 24 a0 00 00 00 0f 29 ac 24 b0 00 00 00 0f 29 b4 24 c0 00 00 00 0f 29 bc 24 d0 00 00 00 <48> 63 bb 54 08 00 00 4c 8d 63 54 48 8d 84 24 10 01 00 00 c7 04 24”Hi @wtempel and @hsnyder

My job log file looks like this…

================= CRYOSPARCW ======= 2023-03-07 17:10:04.817857 =========

Project P4 Job J583

Master csir Port 39002

========= monitor process now starting main process at 2023-03-07 17:10:04.817899

MAINPROCESS PID 58221

MAIN PID 58221

flex_refine.run_highres cryosparc_compute.jobs.jobregister

========= monitor process now waiting for main process

========= sending heartbeat at 2023-03-07 17:10:18.206409

========= sending heartbeat at 2023-03-07 17:10:28.250628

========= sending heartbeat at 2023-03-07 17:10:38.269944

========= sending heartbeat at 2023-03-07 17:10:48.289628

========= sending heartbeat at 2023-03-07 17:10:58.309331

========= sending heartbeat at 2023-03-07 17:11:08.328144

========= sending heartbeat at 2023-03-07 17:11:18.347817

========= sending heartbeat at 2023-03-07 17:11:28.366976

========= sending heartbeat at 2023-03-07 17:11:38.386573

========= sending heartbeat at 2023-03-07 17:11:48.406711

========= sending heartbeat at 2023-03-07 17:11:58.426201

========= sending heartbeat at 2023-03-07 17:12:08.445632

========= sending heartbeat at 2023-03-07 17:12:18.465494

========= sending heartbeat at 2023-03-07 17:12:28.483415

========= sending heartbeat at 2023-03-07 17:12:38.502939

========= sending heartbeat at 2023-03-07 17:12:48.522522

========= sending heartbeat at 2023-03-07 17:12:58.541806

========= sending heartbeat at 2023-03-07 17:13:08.561357

========= sending heartbeat at 2023-03-07 17:13:18.580471

========= sending heartbeat at 2023-03-07 17:13:28.599939

========= sending heartbeat at 2023-03-07 17:13:38.619642

========= sending heartbeat at 2023-03-07 17:13:48.638557

========= sending heartbeat at 2023-03-07 17:13:58.658072

========= sending heartbeat at 2023-03-07 17:14:08.677265

========= sending heartbeat at 2023-03-07 17:14:18.696897

========= sending heartbeat at 2023-03-07 17:14:28.716216

========= sending heartbeat at 2023-03-07 17:14:38.735152

========= sending heartbeat at 2023-03-07 17:14:48.754832

========= sending heartbeat at 2023-03-07 17:14:58.774575

========= sending heartbeat at 2023-03-07 17:15:08.792497

========= sending heartbeat at 2023-03-07 17:15:18.811966

========= sending heartbeat at 2023-03-07 17:15:28.831184

========= sending heartbeat at 2023-03-07 17:15:38.850861

========= sending heartbeat at 2023-03-07 17:15:48.870341

========= sending heartbeat at 2023-03-07 17:15:58.888923

========= sending heartbeat at 2023-03-07 17:16:08.908471

========= sending heartbeat at 2023-03-07 17:16:18.927831

========= sending heartbeat at 2023-03-07 17:16:28.949852

========= sending heartbeat at 2023-03-07 17:16:38.969392

========= sending heartbeat at 2023-03-07 17:16:48.987903

========= sending heartbeat at 2023-03-07 17:16:59.007366

========= sending heartbeat at 2023-03-07 17:17:09.026713

========= sending heartbeat at 2023-03-07 17:17:19.045956

========= sending heartbeat at 2023-03-07 17:17:29.065569

========= sending heartbeat at 2023-03-07 17:17:39.084251

========= sending heartbeat at 2023-03-07 17:17:49.103967

========= sending heartbeat at 2023-03-07 17:17:59.127503

========= sending heartbeat at 2023-03-07 17:18:09.146900

========= sending heartbeat at 2023-03-07 17:18:19.167214

========= sending heartbeat at 2023-03-07 17:18:29.186675

========= sending heartbeat at 2023-03-07 17:18:39.204050

========= sending heartbeat at 2023-03-07 17:18:49.222803

========= sending heartbeat at 2023-03-07 17:18:59.241755

========= sending heartbeat at 2023-03-07 17:19:09.260283

========= sending heartbeat at 2023-03-07 17:19:19.279170

========= sending heartbeat at 2023-03-07 17:19:29.298099

========= sending heartbeat at 2023-03-07 17:19:39.316821

========= sending heartbeat at 2023-03-07 17:19:49.336750

========= sending heartbeat at 2023-03-07 17:19:59.357680

========= sending heartbeat at 2023-03-07 17:20:09.377122

========= sending heartbeat at 2023-03-07 17:20:19.398057

========= sending heartbeat at 2023-03-07 17:20:29.410627

========= sending heartbeat at 2023-03-07 17:20:39.430742

========= sending heartbeat at 2023-03-07 17:20:49.450155

========= sending heartbeat at 2023-03-07 17:20:59.469832

========= sending heartbeat at 2023-03-07 17:21:09.489566

========= sending heartbeat at 2023-03-07 17:21:19.511450

========= sending heartbeat at 2023-03-07 17:21:29.530981

========= sending heartbeat at 2023-03-07 17:21:39.550584

========= sending heartbeat at 2023-03-07 17:21:49.560344

========= sending heartbeat at 2023-03-07 17:21:59.578905

========= sending heartbeat at 2023-03-07 17:22:09.598467

========= sending heartbeat at 2023-03-07 17:22:19.617388

========= sending heartbeat at 2023-03-07 17:22:29.638622

========= sending heartbeat at 2023-03-07 17:22:39.657272

========= sending heartbeat at 2023-03-07 17:22:49.677209

========= sending heartbeat at 2023-03-07 17:22:59.697509

========= sending heartbeat at 2023-03-07 17:23:09.718399

========= sending heartbeat at 2023-03-07 17:23:19.736916

========= sending heartbeat at 2023-03-07 17:23:29.767839

========= sending heartbeat at 2023-03-07 17:23:39.787753

========= sending heartbeat at 2023-03-07 17:23:49.808114

========= sending heartbeat at 2023-03-07 17:23:59.834213

========= sending heartbeat at 2023-03-07 17:24:09.852565

========= sending heartbeat at 2023-03-07 17:24:19.871538

========= sending heartbeat at 2023-03-07 17:24:29.899195

========= sending heartbeat at 2023-03-07 17:24:39.917097

========= sending heartbeat at 2023-03-07 17:24:49.936630

========= sending heartbeat at 2023-03-07 17:24:59.956419

========= sending heartbeat at 2023-03-07 17:25:10.008030

========= sending heartbeat at 2023-03-07 17:25:20.027601

========= sending heartbeat at 2023-03-07 17:25:30.058146

========= sending heartbeat at 2023-03-07 17:25:40.077335

========= sending heartbeat at 2023-03-07 17:25:50.114601

========= sending heartbeat at 2023-03-07 17:26:00.134531

========= sending heartbeat at 2023-03-07 17:26:10.191224

========= sending heartbeat at 2023-03-07 17:26:20.212472

========= sending heartbeat at 2023-03-07 17:26:30.247825

========= sending heartbeat at 2023-03-07 17:26:40.267190

========= sending heartbeat at 2023-03-07 17:26:50.299234

========= sending heartbeat at 2023-03-07 17:27:00.310700

========= sending heartbeat at 2023-03-07 17:27:10.348891

========= sending heartbeat at 2023-03-07 17:27:20.369498

========= sending heartbeat at 2023-03-07 17:27:30.405754

========= sending heartbeat at 2023-03-07 17:27:40.425335

========= sending heartbeat at 2023-03-07 17:27:50.455254

========= sending heartbeat at 2023-03-07 17:28:00.473219

========= sending heartbeat at 2023-03-07 17:28:10.491421

========= sending heartbeat at 2023-03-07 17:28:20.510282

========= sending heartbeat at 2023-03-07 17:28:30.544708

========= sending heartbeat at 2023-03-07 17:28:40.564607

========= sending heartbeat at 2023-03-07 17:28:50.596674

========= sending heartbeat at 2023-03-07 17:29:00.615914

========= sending heartbeat at 2023-03-07 17:29:10.645354

========= sending heartbeat at 2023-03-07 17:29:20.664575

========= sending heartbeat at 2023-03-07 17:29:30.684000

========= sending heartbeat at 2023-03-07 17:29:40.703416

========= sending heartbeat at 2023-03-07 17:29:50.722337

========= sending heartbeat at 2023-03-07 17:30:00.741321

========= sending heartbeat at 2023-03-07 17:30:10.758570

========= sending heartbeat at 2023-03-07 17:30:20.776725

========= sending heartbeat at 2023-03-07 17:30:30.796285

========= sending heartbeat at 2023-03-07 17:30:40.815539

========= sending heartbeat at 2023-03-07 17:30:50.856850

========= sending heartbeat at 2023-03-07 17:31:00.878079

Running job J583 of type flex_highres

Running job on hostname %s csir

Allocated Resources : {‘fixed’: {‘SSD’: False}, ‘hostname’: ‘csir’, ‘lane’: ‘default’, ‘lane_type’: ‘node’, ‘license’: True, ‘licenses_acquired’: 1, ‘slots’: {‘CPU’: [0, 1, 2, 3], ‘GPU’: [0], ‘RAM’: [0, 1, 2, 3, 4, 5, 6, 7]}, ‘target’: {‘cache_path’: ‘/home/scratch’, ‘cache_quota_mb’: None, ‘cache_reserve_mb’: 10000, ‘desc’: None, ‘gpus’: [{‘id’: 0, ‘mem’: 25434324992, ‘name’: ‘NVIDIA RTX A5000’}, {‘id’: 1, ‘mem’: 25434324992, ‘name’: ‘NVIDIA RTX A5000’}, {‘id’: 2, ‘mem’: 25434324992, ‘name’: ‘NVIDIA RTX A5000’}, {‘id’: 3, ‘mem’: 25434324992, ‘name’: ‘NVIDIA RTX A5000’}], ‘hostname’: ‘csir’, ‘lane’: ‘default’, ‘monitor_port’: None, ‘name’: ‘csir’, ‘resource_fixed’: {‘SSD’: True}, ‘resource_slots’: {‘CPU’: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111], ‘GPU’: [0, 1, 2, 3], ‘RAM’: [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63]}, ‘ssh_str’: ‘cryosparcuser@csir’, ‘title’: ‘Worker node csir’, ‘type’: ‘node’, ‘worker_bin_path’: ‘/home/cryosparcuser/software/cryosparc/cryosparc_worker/bin/cryosparcw’}}

========= sending heartbeat at 2023-03-07 17:31:10.897306

========= sending heartbeat at 2023-03-07 17:31:20.915110

========= sending heartbeat at 2023-03-07 17:31:30.935954

========= sending heartbeat at 2023-03-07 17:31:40.953789

========= sending heartbeat at 2023-03-07 17:31:50.970527

========= sending heartbeat at 2023-03-07 17:32:00.990508

========= sending heartbeat at 2023-03-07 17:32:11.009385

========= sending heartbeat at 2023-03-07 17:32:21.028438

========= sending heartbeat at 2023-03-07 17:32:31.043106

========= sending heartbeat at 2023-03-07 17:32:41.062436

========= sending heartbeat at 2023-03-07 17:32:51.081353

========= sending heartbeat at 2023-03-07 17:33:01.101028

========= sending heartbeat at 2023-03-07 17:33:11.111506

========= sending heartbeat at 2023-03-07 17:33:21.130824

========= sending heartbeat at 2023-03-07 17:33:31.149827

========= sending heartbeat at 2023-03-07 17:33:41.168829

========= sending heartbeat at 2023-03-07 17:33:51.188067

========= sending heartbeat at 2023-03-07 17:34:01.206616

========= sending heartbeat at 2023-03-07 17:34:11.265377

========= sending heartbeat at 2023-03-07 17:34:21.337108

========= sending heartbeat at 2023-03-07 17:34:31.356937

========= sending heartbeat at 2023-03-07 17:34:41.376524

========= sending heartbeat at 2023-03-07 17:34:51.395858

========= sending heartbeat at 2023-03-07 17:35:01.415576

========= sending heartbeat at 2023-03-07 17:35:11.435576

========= sending heartbeat at 2023-03-07 17:35:21.459402

========= sending heartbeat at 2023-03-07 17:35:31.477519

========= sending heartbeat at 2023-03-07 17:35:41.496825

========= sending heartbeat at 2023-03-07 17:35:51.515903

========= sending heartbeat at 2023-03-07 17:36:01.534551

========= sending heartbeat at 2023-03-07 17:36:11.618282

========= sending heartbeat at 2023-03-07 17:36:21.634625

========= sending heartbeat at 2023-03-07 17:36:31.680260

========= sending heartbeat at 2023-03-07 17:36:41.699473

========= sending heartbeat at 2023-03-07 17:36:51.719056

========= sending heartbeat at 2023-03-07 17:37:01.737165

========= sending heartbeat at 2023-03-07 17:37:11.756474

========= sending heartbeat at 2023-03-07 17:37:21.775084

========= sending heartbeat at 2023-03-07 17:37:31.794470

========= sending heartbeat at 2023-03-07 17:37:41.813419

========= main process now complete at 2023-03-07 17:37:48.711001.

========= monitor process now complete at 2023-03-07 17:37:48.920223.

Hi @wtempel and @hsnyder

Running the command dmesg gives me the following message for " python " and the “Code” lines…

[16290.813729] python[58221]: segfault at 7eec718d7e70 ip 00007f71f9b74ff8 sp 00007ffdb4ff73b0 error 6 in _lbfgsb.cpython-38-x86_64-linux-gnu.so[7f71f9b65000+17000]

[16290.813744] Code: 48 8b bc 24 c8 00 00 00 8b 07 66 44 0f 28 da 4c 8b 4c 24 08 4c 8b 2c 24 66 44 0f 28 d2 f2 44 0f 59 da 66 44 0f 57 d7 49 63 39 45 0f 11 54 cd f8 f2 41 0f 5c cb 85 ff 0f 8e 04 02 00 00 48 8b

Dear @wtempel and @hsnyder

Please suggest any ways to fix this so that 3D flex job doesn’t terminate suddenly .

P.S. I already updated cryosparc to v4.2.0 and still getting the same error.

Thank you

Justus

We get the same error on v4.2.1+230403 (latest as of today). We are running on CentOS 7.9 (3.10.0-1160.83.1.el7) with slurm 22.05.8. The node running the job is a 64 physical core (AMD EPYC 7452) node with 1TB RAM and 2 x 40GB A100 GPUs. We are running NVIDIA driver 530.30.02 and have CryoSPARC configured to use CUDA 11.8.

abrt reason:

python3.8 killed by SIGSEGV

dmesg:

python[56544]: segfault at 2bf658007270 ip 00002b73180afff8 sp 00007fff351dad60 error 6 in _lbfgsb.cpython-38-x86_64-linux-gnu.so[2b73180a0000+17000]

job.log

================= CRYOSPARCW ======= 2023-04-13 11:46:08.204537 =========

Project P28 Job J1032

Master ??????????? Port 39002

===========================================================================

========= monitor process now starting main process at 2023-04-13 11:46:08.204591

MAINPROCESS PID 56544

MAIN PID 56544

flex_refine.run_highres cryosparc_compute.jobs.jobregister

========= monitor process now waiting for main process

========= sending heartbeat at 2023-04-13 11:46:22.674796

========= sending heartbeat at 2023-04-13 11:46:32.700129

========= sending heartbeat at 2023-04-13 11:46:42.718306

========= sending heartbeat at 2023-04-13 11:46:52.735723

========= sending heartbeat at 2023-04-13 11:47:02.759967

========= sending heartbeat at 2023-04-13 11:47:12.776408

========= sending heartbeat at 2023-04-13 11:47:22.793579

========= sending heartbeat at 2023-04-13 11:47:32.811300

========= sending heartbeat at 2023-04-13 11:47:42.841504

========= sending heartbeat at 2023-04-13 11:47:53.023129

========= sending heartbeat at 2023-04-13 11:48:03.096584

========= sending heartbeat at 2023-04-13 11:48:13.113060

========= sending heartbeat at 2023-04-13 11:48:23.139763

========= sending heartbeat at 2023-04-13 11:48:33.196175

========= sending heartbeat at 2023-04-13 11:48:43.212891

========= sending heartbeat at 2023-04-13 11:48:53.230247

========= sending heartbeat at 2023-04-13 11:49:03.246836

========= sending heartbeat at 2023-04-13 11:49:13.263209

========= sending heartbeat at 2023-04-13 11:49:23.279980

========= sending heartbeat at 2023-04-13 11:49:33.295508

========= sending heartbeat at 2023-04-13 11:49:43.312774

========= sending heartbeat at 2023-04-13 11:49:53.381856

========= sending heartbeat at 2023-04-13 11:50:03.400003

========= sending heartbeat at 2023-04-13 11:50:13.417564

========= sending heartbeat at 2023-04-13 11:50:23.433831

========= sending heartbeat at 2023-04-13 11:50:33.450860

========= sending heartbeat at 2023-04-13 11:50:43.467245

========= sending heartbeat at 2023-04-13 11:50:53.494777

========= sending heartbeat at 2023-04-13 11:51:03.510514

========= sending heartbeat at 2023-04-13 11:51:13.594883

========= sending heartbeat at 2023-04-13 11:51:23.620676

========= sending heartbeat at 2023-04-13 11:51:33.638073

========= sending heartbeat at 2023-04-13 11:51:43.654994

========= sending heartbeat at 2023-04-13 11:51:53.671701

========= sending heartbeat at 2023-04-13 11:52:03.689341

========= sending heartbeat at 2023-04-13 11:52:13.720759

========= sending heartbeat at 2023-04-13 11:52:23.736615

========= sending heartbeat at 2023-04-13 11:52:33.772518

========= sending heartbeat at 2023-04-13 11:52:43.789690

========= sending heartbeat at 2023-04-13 11:52:53.805636

========= sending heartbeat at 2023-04-13 11:53:03.823106

========= sending heartbeat at 2023-04-13 11:53:13.842358

========= sending heartbeat at 2023-04-13 11:53:23.860117

========= sending heartbeat at 2023-04-13 11:53:33.874605

========= sending heartbeat at 2023-04-13 11:53:43.892513

========= sending heartbeat at 2023-04-13 11:53:53.910756

========= sending heartbeat at 2023-04-13 11:54:03.928158

========= sending heartbeat at 2023-04-13 11:54:13.945392

========= sending heartbeat at 2023-04-13 11:54:23.961885

========= sending heartbeat at 2023-04-13 11:54:34.013508

========= sending heartbeat at 2023-04-13 11:54:44.030040

========= sending heartbeat at 2023-04-13 11:54:54.048886

========= sending heartbeat at 2023-04-13 11:55:04.100822

========= sending heartbeat at 2023-04-13 11:55:14.116350

========= sending heartbeat at 2023-04-13 11:55:24.134546

***************************************************************

Running job J1032 of type flex_highres

Running job on hostname %s bs2

Allocated Resources : {'fixed': {'SSD': False}, 'hostname': 'bs2', 'lane': 'bs2', 'lane_type': 'cluster', 'license': True, 'licenses_acquired': 1, 'slots': {'CPU': [0, 1, 2, 3], 'GPU': [0], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7]}, 'target': {'cache_path': '?????????/cryosparc-scratch', 'cache_quota_mb': None, 'cache_reserve_mb': 10000, 'custom_var_names': ['ram_multiplier', 'command', 'cpu_multiplier'], 'custom_vars': {}, 'desc': None, 'hostname': 'bs2', 'lane': 'bs2', 'name': 'bs2', 'qdel_cmd_tpl': 'scancel {{ cluster_job_id }}', 'qinfo_cmd_tpl': 'sinfo', 'qstat_cmd_tpl': 'squeue -j {{ cluster_job_id }}', 'qstat_code_cmd_tpl': None, 'qsub_cmd_tpl': 'sbatch {{ script_path_abs }}', 'script_tpl': '#!/usr/bin/env bash\n#### cryoSPARC cluster submission script template for SLURM\n## Available built-in variables:\n## {{ run_cmd }} - the complete command string to run the job\n## {{ num_cpu }} - the number of CPUs needed\n## {{ num_gpu }} - the number of GPUs needed. \n## Note: the code will use this many GPUs starting from dev id 0\n## the cluster scheduler or this script have the responsibility\n## of setting CUDA_VISIBLE_DEVICES so that the job code ends up\n## using the correct cluster-allocated GPUs.\n## {{ ram_gb }} - the amount of RAM needed in GB\n## {{ job_dir_abs }} - absolute path to the job directory\n## {{ project_dir_abs }} - absolute path to the project dir\n## {{ job_log_path_abs }} - absolute path to the log file for the job\n## {{ worker_bin_path }} - absolute path to the cryosparc worker command\n## {{ run_args }} - arguments to be passed to cryosparcw run\n## {{ project_uid }} - uid of the project\n## {{ job_uid }} - uid of the job\n## {{ job_creator }} - name of the user that created the job (may contain spaces)\n## {{ cryosparc_username }} - cryosparc username of the user that created the job (usually an email)\n## {{ job_type }} - CryoSPARC job type\n##\n## Available custom variables\n## {{ ram_multiplier }} Multiple the job types ram_gb by this\n## {{ cpu_multiplier }} Multiple the job types num_cpu by this\n##\n## What follows is a simple SLURM script:\n\n#SBATCH --job-name "cryosparcprd_{{ project_uid }}_{{ job_uid }}_{{ job_creator }}"\n#SBATCH --nodes=1\n#SBATCH --ntasks-per-node=1\n#SBATCH --gres=gpu:{%if num_gpu > 2%}2{%else%}{{ num_gpu }}{%endif%}\n#SBATCH --constraint="a100"\n#SBATCH -p gpu,int\n#SBATCH --cpus-per-task={{ (num_cpu|float * cpu_multiplier|float)|int }}\n#SBATCH --mem={{ (ram_gb|float * ram_multiplier|float)|int }}G\n#SBATCH --output=slurm_logs/%x-{{ cryosparc_username }}-%N-%j-stdout.log\n#SBATCH --error=slurm_logs/%x-{{ cryosparc_username }}-%N-%j-stderr.log\n\nsrun {{ run_cmd }}\n\n\n', 'send_cmd_tpl': '{{ command }}', 'title': 'bs2', 'tpl_vars': ['cluster_job_id', 'worker_bin_path', 'num_gpu', 'job_creator', 'num_cpu', 'job_log_path_abs', 'job_uid', 'project_uid', 'ram_gb', 'ram_multiplier', 'run_cmd', 'project_dir_abs', 'command', 'run_args', 'job_type', 'cryosparc_username', 'cpu_multiplier', 'job_dir_abs'], 'type': 'cluster', 'worker_bin_path': '???????/Software/CryoSPARCv2/cryosparc2_worker/bin/cryosparcw'}}

========= sending heartbeat at 2023-04-13 11:55:34.152144

========= sending heartbeat at 2023-04-13 11:55:44.169943

========= sending heartbeat at 2023-04-13 11:55:54.186681

========= sending heartbeat at 2023-04-13 11:56:04.205055

========= sending heartbeat at 2023-04-13 11:56:14.222398

========= sending heartbeat at 2023-04-13 11:56:24.240349

========= sending heartbeat at 2023-04-13 11:56:34.256364

========= sending heartbeat at 2023-04-13 11:56:44.273065

========= sending heartbeat at 2023-04-13 11:56:54.288502

========= sending heartbeat at 2023-04-13 11:57:04.370883

========= sending heartbeat at 2023-04-13 11:57:14.388254

========= sending heartbeat at 2023-04-13 11:57:24.405597

========= sending heartbeat at 2023-04-13 11:57:34.416041

========= sending heartbeat at 2023-04-13 11:57:44.474340

========= sending heartbeat at 2023-04-13 11:57:54.492260

========= sending heartbeat at 2023-04-13 11:58:04.509395

========= sending heartbeat at 2023-04-13 11:58:14.527337

========= sending heartbeat at 2023-04-13 11:58:24.545284

========= sending heartbeat at 2023-04-13 11:58:34.562547

========= sending heartbeat at 2023-04-13 11:58:44.579647

========= sending heartbeat at 2023-04-13 11:58:54.664636

========= sending heartbeat at 2023-04-13 11:59:04.682558

========= sending heartbeat at 2023-04-13 11:59:14.699935

========= sending heartbeat at 2023-04-13 11:59:24.717139

========= sending heartbeat at 2023-04-13 11:59:34.735425

========= sending heartbeat at 2023-04-13 11:59:44.753497

========= sending heartbeat at 2023-04-13 11:59:54.769506

========= sending heartbeat at 2023-04-13 12:00:04.788649

========= sending heartbeat at 2023-04-13 12:00:14.854318

========= sending heartbeat at 2023-04-13 12:00:24.862506

========= sending heartbeat at 2023-04-13 12:00:34.879693

RUNNING THE L-BFGS-B CODE

* * *

Machine precision = 2.220D-16

N = 134217728 M = 10

========= sending heartbeat at 2023-04-13 12:00:44.896620

This problem is unconstrained.

At X0 0 variables are exactly at the bounds

At iterate 0 f= 4.30635D+10 |proj g|= 1.34557D+04

========= sending heartbeat at 2023-04-13 12:00:54.927548

========= sending heartbeat at 2023-04-13 12:01:04.945495

========= sending heartbeat at 2023-04-13 12:01:14.960488

========= main process now complete at 2023-04-13 12:01:21.516402.

========= monitor process now complete at 2023-04-13 12:01:21.546583.

Event Log

[CPU: 4.84 GB] ====== Load particle data =======

[CPU: 4.84 GB] Reading in all particle data...

[CPU: 4.84 GB] Reading file 67 of 67 (J1027/J1027_particles_fullres_batch_00066.mrc)

[CPU: 341.90 GB] Reading in all particle CTF data...

[CPU: 341.90 GB] Reading file 67 of 67 (J1027/J1027_particles_fullres_batch_00066_ctf.mrc)

[CPU: 504.65 GB] Parameter "Force re-do GS split" was off. Using input split..

[CPU: 504.65 GB] Split A contains 166000 particles

[CPU: 504.65 GB] Split B contains 166000 particles

[CPU: 504.65 GB] Setting up particle poses..

[CPU: 504.65 GB] ====== High resolution flexible refinement =======

[CPU: 504.65 GB] Max num L-BFGS iterations was set to 20

[CPU: 504.65 GB] Starting L-BFGS.

[CPU: 504.65 GB] Reconstructing half-map A

[CPU: 504.65 GB] Iteration 0 : 165000 / 166000 particles

[CPU: 174.7 MB] ====== Job process terminated abnormally.

ulimits

cat limits

Limit Soft Limit Hard Limit Units

Max cpu time unlimited unlimited seconds

Max file size unlimited unlimited bytes

Max data size unlimited unlimited bytes

Max stack size unlimited unlimited bytes

Max core file size 0 unlimited bytes

Max resident set 962072674304 962072674304 bytes

Max processes 16384 4125793 processes

Max open files 131072 131072 files

Max locked memory unlimited unlimited bytes

Max address space unlimited unlimited bytes

Max file locks unlimited unlimited locks

Max pending signals 4125793 4125793 signals

Max msgqueue size 819200 819200 bytes

Max nice priority 0 0

Max realtime priority 0 0

Max realtime timeout unlimited unlimited us

seff 936631

Job ID: 936631

Cluster: bs2

User/Group: cryo1/cryo1

State: COMPLETED (exit code 0)

Nodes: 1

Cores per node: 64

CPU Utilized: 00:14:14

CPU Efficiency: 1.45% of 16:19:12 core-walltime

Job Wall-clock time: 00:15:18

Memory Utilized: 510.20 GB

Memory Efficiency: 56.94% of 896.00 GB

Hi @Justus and @andrew-niaid ,

Sorry for the slow response on my part. Does this error occur on every dataset, or does it appear only on some datasets? We have looked into this issue but haven’t been able to reproduce it, (we also use the same BFGS algorithm in many other places in CryoSPARC), which makes me wonder if it is triggered by some property of the data.

—Harris

Hi @hsnyder,

Here is what my user (@hansenbry) is reporting:

“With the smaller box size it’s working. Haven’t gone into depth testing, but 512 regularly fails and I’ve been able to have success at up to 360.”

Thanks,

-Andrew

@hsnyder that’s correct. I’ve seen it on multiple datasets too so if it was a property of the data it would be global to our collection strategy which would be good to know too if that’s the case. Thanks for your help.

Bryan

That’s very helpful information - it makes sense that the issue might be related to box size, as that’s a powerful determinant of overall memory footprint… I will look into this further and reply with what I discover. In the meantime any additional information is of course welcome.

@andrew-niaid or @hansenbry if you could trigger this one more time and paste the dmesg including the following line that looks like [16290.813744] Code: 48 8b bc 24 c8 00 00 00 8b 07 66 44 0f 28 da 4c 8b 4c 24 08 4c 8b 2c 24 66 44 0f 28 d2 f2 44 0f 59 da 66 44 0f 57 d7 49 63 39 45 0f 11 54 cd f8 f2 41 0f 5c cb 85 ff 0f 8e 04 02 00 00 48 8b, that would be helpful.

I started to experience a similar problem on one of our workstations. with job terminating abnormally and nothing useful in the logs.

Please post

- CryoSPARC version and patch info

- error information: any outputs that indicated that there is a problem, including lines above Job process terminated abnormally.

- job information

- job type

- particle count

- box size

- worker in formation: Before You Post: Troubleshooting Guidelines

This will be

CS v4.2.1+230427

[2023-06-21 10:36:08.11]

[CPU: 6.25 GB Avail: 449.80 GB]

====== Load particle data =======

[2023-06-21 10:36:08.11]

[CPU: 6.25 GB Avail: 449.80 GB]

Reading in all particle data...

[2023-06-21 10:36:08.12]

[CPU: 6.25 GB Avail: 449.78 GB]

Reading file 3 of 3 (J221/J221_particles_fullres_batch_00002.mrc)

[2023-06-21 10:36:43.36]

[CPU: 23.83 GB Avail: 427.95 GB]

Reading in all particle CTF data...

[2023-06-21 10:36:43.36]

[CPU: 23.83 GB Avail: 427.95 GB]

Reading file 3 of 3 (J221/J221_particles_fullres_batch_00002_ctf.mrc)

[2023-06-21 10:36:59.68]

[CPU: 28.26 GB Avail: 424.55 GB]

Parameter "Force re-do GS split" was on. Splitting particles..

[2023-06-21 10:36:59.69]

[CPU: 28.26 GB Avail: 424.55 GB]

Split A contains 7500 particles

[2023-06-21 10:36:59.70]

[CPU: 28.26 GB Avail: 424.52 GB]

Split B contains 7500 particles

[2023-06-21 10:36:59.73]

[CPU: 28.26 GB Avail: 424.48 GB]

Setting up particle poses..

[2023-06-21 10:36:59.73]

[CPU: 28.26 GB Avail: 424.46 GB]

====== High resolution flexible refinement =======

[2023-06-21 10:36:59.74]

[CPU: 28.26 GB Avail: 424.46 GB]

Max num L-BFGS iterations was set to 20

[2023-06-21 10:36:59.74]

[CPU: 28.26 GB Avail: 424.45 GB]

Starting L-BFGS.

[2023-06-21 10:36:59.74]

[CPU: 28.26 GB Avail: 424.45 GB]

Reconstructing half-map A

[2023-06-21 10:36:59.75]

[CPU: 28.26 GB Avail: 424.44 GB]

Iteration 0 : 7000 / 7500 particles

[2023-06-21 10:42:39.25]

[CPU: 173.3 MB Avail: 448.21 GB]

====== Job process terminated abnormally.

Flex Reconstruction

15K Particles

128 boxsize

Thanks @Bassem. Please can you

- confirm there are no errors or

Tracebacks inside the job log (under Metadata|Log) - post the output of

sudo dmesg | grep '] code:'

1- no errors

================ CRYOSPARCW ======= 2023-06-21 10:35:19.510704 =========

Project P10 Job J227

Master c114718 Port 39002

===========================================================================

========= monitor process now starting main process at 2023-06-21 10:35:19.510759

MAINPROCESS PID 38973

========= monitor process now waiting for main process

MAIN PID 38973

flex_refine.run_highres cryosparc_compute.jobs.jobregister

========= sending heartbeat at 2023-06-21 10:35:33.515079

========= sending heartbeat at 2023-06-21 10:35:43.558367

========= sending heartbeat at 2023-06-21 10:35:53.600417

========= sending heartbeat at 2023-06-21 10:36:03.641399

========= sending heartbeat at 2023-06-21 10:36:13.683001

========= sending heartbeat at 2023-06-21 10:36:24.482194

========= sending heartbeat at 2023-06-21 10:36:34.524314

========= sending heartbeat at 2023-06-21 10:36:44.566137

========= sending heartbeat at 2023-06-21 10:36:54.608952

========= sending heartbeat at 2023-06-21 10:37:04.644403

========= sending heartbeat at 2023-06-21 10:37:14.687673

========= sending heartbeat at 2023-06-21 10:37:24.728688

========= sending heartbeat at 2023-06-21 10:37:35.166084

========= sending heartbeat at 2023-06-21 10:37:45.208182

========= sending heartbeat at 2023-06-21 10:37:55.519640

========= sending heartbeat at 2023-06-21 10:38:05.583763

========= sending heartbeat at 2023-06-21 10:38:15.627336

========= sending heartbeat at 2023-06-21 10:38:25.671943

========= sending heartbeat at 2023-06-21 10:38:35.711318

========= sending heartbeat at 2023-06-21 10:38:45.752334

========= sending heartbeat at 2023-06-21 10:38:55.793178

========= sending heartbeat at 2023-06-21 10:39:05.835326

========= sending heartbeat at 2023-06-21 10:39:15.874933

========= sending heartbeat at 2023-06-21 10:39:25.909384

========= sending heartbeat at 2023-06-21 10:39:35.952221

========= sending heartbeat at 2023-06-21 10:39:45.994874

========= sending heartbeat at 2023-06-21 10:39:56.035497

***************************************************************

Running job J227 of type flex_highres

Running job on hostname %s c114718

Allocated Resources : {'fixed': {'SSD': False}, 'hostname': 'c114718', 'lane': 'default', 'lane_type': 'node', 'license': True, 'licenses_acquired': 1, 'slots': {'CPU': [8, 9, 10, 11], 'GPU': [2], 'RAM': [6, 7, 8, 9, 10, 11, 12, 13]}, 'target': {'cache_path': '/scr/cryosparc_cache', 'cache_quota_mb': None, 'cache_reserve_mb': 10000, 'desc': None, 'gpus': [{'id': 0, 'mem': 51050250240, 'name': 'NVIDIA RTX A6000'}, {'id': 1, 'mem': 51049857024, 'name': 'NVIDIA RTX A6000'}, {'id': 2, 'mem': 51050250240, 'name': 'NVIDIA RTX A6000'}, {'id': 3, 'mem': 51050250240, 'name': 'NVIDIA RTX A6000'}], 'hostname': 'c114718', 'lane': 'default', 'monitor_port': None, 'name': 'c114718', 'resource_fixed': {'SSD': True}, 'resource_slots': {'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63], 'GPU': [0, 1, 2, 3], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63]}, 'ssh_str': 'cryosparc_user@c114718', 'title': 'Worker node c114718', 'type': 'node', 'worker_bin_path': '/home/cryosparc_user/software/cryosparc/cryosparc_worker/bin/cryosparcw'}}

========= sending heartbeat at 2023-06-21 10:40:06.069453

========= sending heartbeat at 2023-06-21 10:40:16.109892

========= sending heartbeat at 2023-06-21 10:40:26.149341

========= sending heartbeat at 2023-06-21 10:40:36.190342

========= sending heartbeat at 2023-06-21 10:40:46.235192

========= sending heartbeat at 2023-06-21 10:40:56.277434

========= sending heartbeat at 2023-06-21 10:41:06.319691

========= sending heartbeat at 2023-06-21 10:41:16.361603

========= sending heartbeat at 2023-06-21 10:41:26.401363

========= sending heartbeat at 2023-06-21 10:41:36.441073

========= sending heartbeat at 2023-06-21 10:41:46.475600

========= sending heartbeat at 2023-06-21 10:41:56.518215

========= sending heartbeat at 2023-06-21 10:42:06.558335

========= sending heartbeat at 2023-06-21 10:42:16.599405

========= sending heartbeat at 2023-06-21 10:42:26.642306

========= sending heartbeat at 2023-06-21 10:42:36.684330

========= main process now complete at 2023-06-21 10:42:39.120748.

========= monitor process now complete at 2023-06-21 10:42:39.261764.

2- The command did not give me any message back

Thanks. Please can you also post outputs (on the GPU node) of the commands

nvidia-smiuname -a

Also, is there a risk of other GPU workloads “colliding” with the 3DFlex reconstruction job by running on the same GPU?

1- smi

Wed Jun 21 10:36:42 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 510.60.02 Driver Version: 510.60.02 CUDA Version: 11.6 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA RTX A6000 Off | 00000000:18:00.0 Off | Off |

| 38% 66C P2 91W / 300W | 1008MiB / 49140MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 NVIDIA RTX A6000 Off | 00000000:3B:00.0 On | Off |

| 53% 80C P2 244W / 300W | 5890MiB / 49140MiB | 31% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 2 NVIDIA RTX A6000 Off | 00000000:86:00.0 Off | Off |

| 30% 51C P8 19W / 300W | 3076MiB / 49140MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 3 NVIDIA RTX A6000 Off | 00000000:AF:00.0 Off | Off |

| 30% 40C P8 21W / 300W | 3MiB / 49140MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 36621 C python 1005MiB |

| 1 N/A N/A 2523 G /usr/bin/X 229MiB |

| 1 N/A N/A 4045 G /usr/bin/gnome-shell 44MiB |

| 1 N/A N/A 5039 G /usr/lib64/firefox/firefox 222MiB |

| 1 N/A N/A 37003 G /usr/lib64/firefox/firefox 88MiB |

| 1 N/A N/A 37638 C python 4871MiB |

| 1 N/A N/A 82692 G /usr/lib64/firefox/firefox 4MiB |

| 1 N/A N/A 106666 G /usr/lib64/firefox/firefox 88MiB |

| 1 N/A N/A 117873 G /usr/lib64/firefox/firefox 4MiB |

| 1 N/A N/A 118039 G /usr/lib64/firefox/firefox 90MiB |

| 1 N/A N/A 119616 G /usr/lib64/firefox/firefox 88MiB |

| 1 N/A N/A 154365 G /usr/lib64/firefox/firefox 4MiB |

| 1 N/A N/A 159823 G /usr/lib64/firefox/firefox 97MiB |

| 1 N/A N/A 241697 G ...mera64-1.16/bin/python2.7 44MiB |

| 2 N/A N/A 38973 C python 3073MiB |

+-----------------------------------------------------------------------------+

2- uname

Linux c114718 3.10.0-1160.59.1.el7.x86_64 #1 SMP Wed Feb 23 16:47:03 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux

I do not think we may have been overloading the GPU. But to make sure, i will schedule it during a quiet period and see if it makes any different.

Given the kernel version

you may also try if defining

export CRYOSPARC_NO_PAGELOCK="true"

inside /path/to/cryosparc_worker/config.sh

(details) and see if this change affects the job’s behavior.