Hello @wtempel,

Thanks a lot for reaching out again, I appreciate it.

Please find below the output of the commands:

\[sdaou@quad3 cryoDB\]$ cd /cryoDB/cryosparc_master

\[sdaou@quad3 cryosparc_master\]$

\[sdaou@quad3 cryosparc_master\]$

\[sdaou@quad3 cryosparc_master\]$

\[sdaou@quad3 cryosparc_master\]$

\[sdaou@quad3 cryosparc_master\]$ csprojectid='P19'

csjobid='J220'

./bin/cryosparcm cli "get_job('$csprojectid', '$csjobid', 'job_type', 'version', 'instance_information', 'status', 'params_spec', 'errors_run', 'input_slot_groups', 'started_at')"

./bin/cryosparcm eventlog $csprojectid $csjobid | tail -n 40

./bin/cryosparcm joblog $csprojectid $csjobid | tail -n 20

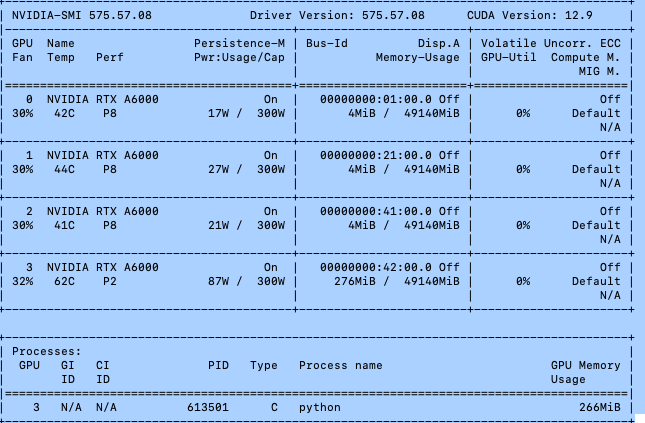

{'\_id': '690633ee1b25f930335eeec0', 'errors_run': \[{'message': 'Subprocess exited with status 1 (/programs/x86_64-linux/topaz/0.2.5-2/bin/topaz train --train-images /Stx2Data3/Salima/CS-mark3-crbn-ddb1-protac-glacios-october-2025/J220/image_list_train.txt --train-targets /Stx2Data3/Salima/CS-mark3-crbn-ddb1-protac-glacios-october-2025/J220/topaz_parti…)', 'warning': False}\], 'input_slot_groups': \[{'connections': \[{'group_name': 'exposures_accepted', 'job_uid': 'J219', 'slots': \[{'group_name': 'exposures_accepted', 'job_uid': 'J219', 'result_name': 'micrograph_blob', 'result_type': 'exposure.micrograph_blob', 'slot_name': 'micrograph_blob', 'version': 'F'}, {'group_name': 'exposures_accepted', 'job_uid': 'J219', 'result_name': 'mscope_params', 'result_type': 'exposure.mscope_params', 'slot_name': 'mscope_params', 'version': 'F'}, {'group_name': 'exposures_accepted', 'job_uid': 'J219', 'result_name': 'movie_blob', 'result_type': 'exposure.movie_blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'exposures_accepted', 'job_uid': 'J219', 'result_name': 'background_blob', 'result_type': 'exposure.stat_blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'exposures_accepted', 'job_uid': 'J219', 'result_name': 'micrograph_thumbnail_blob_1x', 'result_type': 'exposure.thumbnail_blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'exposures_accepted', 'job_uid': 'J219', 'result_name': 'micrograph_thumbnail_blob_2x', 'result_type': 'exposure.thumbnail_blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'exposures_accepted', 'job_uid': 'J219', 'result_name': 'ctf', 'result_type': 'exposure.ctf', 'slot_name': None, 'version': 'F'}, {'group_name': 'exposures_accepted', 'job_uid': 'J219', 'result_name': 'ctf_stats', 'result_type': 'exposure.ctf_stats', 'slot_name': None, 'version': 'F'}, {'group_name': 'exposures_accepted', 'job_uid': 'J219', 'result_name': 'rigid_motion', 'result_type': 'exposure.motion', 'slot_name': None, 'version': 'F'}, {'group_name': 'exposures_accepted', 'job_uid': 'J219', 'result_name': 'spline_motion', 'result_type': 'exposure.motion', 'slot_name': None, 'version': 'F'}, {'group_name': 'exposures_accepted', 'job_uid': 'J219', 'result_name': 'micrograph_blob_non_dw', 'result_type': 'exposure.micrograph_blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'exposures_accepted', 'job_uid': 'J219', 'result_name': 'micrograph_blob_non_dw_AB', 'result_type': 'exposure.micrograph_blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'exposures_accepted', 'job_uid': 'J219', 'result_name': 'gain_ref_blob', 'result_type': 'exposure.gain_ref_blob', 'slot_name': None, 'version': 'F'}\]}\], 'count_max': inf, 'count_min': 1, 'description': 'Micrographs for training Topaz', 'name': 'micrographs', 'repeat_allowed': False, 'slots': \[{'description': '', 'name': 'micrograph_blob', 'optional': False, 'title': 'Raw micrograph data', 'type': 'exposure.micrograph_blob'}, {'description': '', 'name': 'micrograph_blob_denoised', 'optional': True, 'title': 'Denoised micrograph data', 'type': 'exposure.micrograph_blob'}, {'description': '', 'name': 'mscope_params', 'optional': True, 'title': 'Microscope parameters for identifying negatively stained data', 'type': 'exposure.mscope_params'}\], 'title': 'Micrographs', 'type': 'exposure'}, {'connections': \[{'group_name': 'particles_accepted', 'job_uid': 'J219', 'slots': \[{'group_name': 'particles_accepted', 'job_uid': 'J219', 'result_name': 'location', 'result_type': 'particle.location', 'slot_name': 'location', 'version': 'F'}, {'group_name': 'particles_accepted', 'job_uid': 'J219', 'result_name': 'pick_stats', 'result_type': 'particle.pick_stats', 'slot_name': None, 'version': 'F'}, {'group_name': 'particles_accepted', 'job_uid': 'J219', 'result_name': 'alignments3D', 'result_type': 'particle.alignments3D', 'slot_name': None, 'version': 'F'}, {'group_name': 'particles_accepted', 'job_uid': 'J219', 'result_name': 'ctf', 'result_type': 'particle.ctf', 'slot_name': None, 'version': 'F'}, {'group_name': 'particles_accepted', 'job_uid': 'J219', 'result_name': 'blob', 'result_type': 'particle.blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'particles_accepted', 'job_uid': 'J219', 'result_name': 'alignments2D', 'result_type': 'particle.alignments2D', 'slot_name': None, 'version': 'F'}\]}\], 'count_max': inf, 'count_min': 1, 'description': 'Particle locations for training Topaz', 'name': 'particles', 'repeat_allowed': False, 'slots': \[{'description': '', 'name': 'location', 'optional': False, 'title': 'Particle locations', 'type': 'particle.location'}\], 'title': 'Particles', 'type': 'particle'}\], 'instance_information': {'CUDA_version': '11.8', 'available_memory': '117.76GB', 'cpu_model': 'AMD Ryzen Threadripper PRO 5965WX 24-Cores', 'driver_version': '12.9', 'gpu_info': \[{'id': 0, 'mem': 50897289216, 'name': 'NVIDIA RTX A6000', 'pcie': '0000:01:00'}, {'id': 1, 'mem': 50897289216, 'name': 'NVIDIA RTX A6000', 'pcie': '0000:21:00'}, {'id': 2, 'mem': 50897289216, 'name': 'NVIDIA RTX A6000', 'pcie': '0000:41:00'}, {'id': 3, 'mem': 50897289216, 'name': 'NVIDIA RTX A6000', 'pcie': '0000:42:00'}\], 'ofd_hard_limit': 524288, 'ofd_soft_limit': 1024, 'physical_cores': 24, 'platform_architecture': 'x86_64', 'platform_node': 'quad3.mshri.on.ca', 'platform_release': '5.14.0-570.28.1.el9_6.x86_64', 'platform_version': '#1 SMP PREEMPT_DYNAMIC Thu Jul 24 10:32:22 UTC 2025', 'total_memory': '125.13GB', 'used_memory': '6.17GB'}, 'job_type': 'topaz_train', 'params_spec': {'compute_num_workers': {'value': 1}, 'exec_path': {'value': '/programs/x86_64-linux/topaz/0.2.5-2/bin/topaz'}, 'num_distribute': {'value': 1}, 'num_particles': {'value': 2000}, 'par_diam': {'value': 130}, 'pretrained': {'value': True}}, 'project_uid': 'P19', 'started_at': 'Sun, 02 Nov 2025 21:14:30 GMT', 'status': 'failed', 'uid': 'J220', 'version': 'v4.5.3'}

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/topaz/main.py", line 148, in main

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] args.func(args)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/topaz/commands/train.py", line 695, in main

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] , save_prefix=save_prefix, use_cuda=use_cuda, output=output)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/topaz/commands/train.py", line 577, in fit_epochs

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] , use_cuda=use_cuda, output=output)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/topaz/commands/train.py", line 557, in fit_epoch

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] metrics = step_method.step(X, Y)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/topaz/methods.py", line 103, in step

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] score = self.model(X).view(-1)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/torch/nn/modules/module.py", line 532, in \__call_\_

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] result = self.forward(\*input, \*\*kwargs)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/topaz/model/classifier.py", line 28, in forward

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] z = self.features(x)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/torch/nn/modules/module.py", line 532, in \__call_\_

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] result = self.forward(\*input, \*\*kwargs)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/topaz/model/features/resnet.py", line 54, in forward

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] z = self.features(x)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/torch/nn/modules/module.py", line 532, in \__call_\_

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] result = self.forward(\*input, \*\*kwargs)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/torch/nn/modules/container.py", line 100, in forward

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] input = module(input)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/torch/nn/modules/module.py", line 532, in \__call_\_

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] result = self.forward(\*input, \*\*kwargs)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/topaz/model/features/resnet.py", line 270, in forward

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] y = self.conv(x)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/torch/nn/modules/module.py", line 532, in \__call_\_

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] result = self.forward(\*input, \*\*kwargs)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/torch/nn/modules/conv.py", line 345, in forward

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] return self.conv2d_forward(input, self.weight)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] File "/programs/x86_64-linux/topaz/0.2.5-2/topaz_extlib/miniconda3-4.8.2-b5qb/envs/topaz/lib/python3.6/site-packages/torch/nn/modules/conv.py", line 342, in conv2d_forward

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] self.padding, self.dilation, self.groups)

\[Sun, 02 Nov 2025 21:58:26 GMT\] \[CPU RAM used: 326 MB\] RuntimeError: cuDNN error: CUDNN_STATUS_MAPPING_ERROR

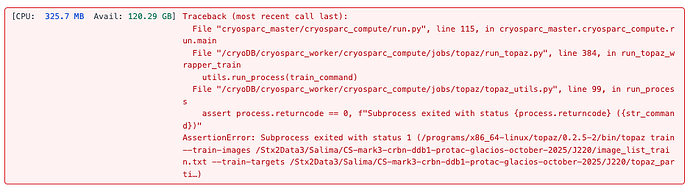

\[Sun, 02 Nov 2025 21:58:27 GMT\] \[CPU RAM used: 326 MB\] Traceback (most recent call last):

File "cryosparc_master/cryosparc_compute/run.py", line 115, in cryosparc_master.cryosparc_compute.run.main

File "/cryoDB/cryosparc_worker/cryosparc_compute/jobs/topaz/run_topaz.py", line 384, in run_topaz_wrapper_train

utils.run_process(train_command)

File "/cryoDB/cryosparc_worker/cryosparc_compute/jobs/topaz/topaz_utils.py", line 99, in run_process

assert process.returncode == 0, f"Subprocess exited with status {process.returncode} ({str_command})"

AssertionError: Subprocess exited with status 1 (/programs/x86_64-linux/topaz/0.2.5-2/bin/topaz train --train-images /Stx2Data3/Salima/CS-mark3-crbn-ddb1-protac-glacios-october-2025/J220/image_list_train.txt --train-targets /Stx2Data3/Salima/CS-mark3-crbn-ddb1-protac-glacios-october-2025/J220/topaz_parti…)

========= sending heartbeat at 2025-11-02 16:57:36.636152

========= sending heartbeat at 2025-11-02 16:57:46.650376

========= sending heartbeat at 2025-11-02 16:57:56.664358

========= sending heartbeat at 2025-11-02 16:58:06.678569

========= sending heartbeat at 2025-11-02 16:58:16.692996

========= sending heartbeat at 2025-11-02 16:58:26.709436

\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*\*

Running job on hostname %s quad3.mshri.on.ca

Allocated Resources : {'fixed': {'SSD': False}, 'hostname': 'quad3.mshri.on.ca', 'lane': 'default', 'lane_type': 'node', 'license': False, 'licenses_acquired': 0, 'slots': {'CPU': \[0\], 'GPU': \[0\], 'RAM': \[0\]}, 'target': {'cache_path': '/ssd_cache', 'cache_quota_mb': None, 'cache_reserve_mb': 10000, 'desc': None, 'gpus': \[{'id': 0, 'mem': 50897289216, 'name': 'NVIDIA RTX A6000'}, {'id': 1, 'mem': 50897289216, 'name': 'NVIDIA RTX A6000'}, {'id': 2, 'mem': 50897289216, 'name': 'NVIDIA RTX A6000'}, {'id': 3, 'mem': 50897289216, 'name': 'NVIDIA RTX A6000'}\], 'hostname': 'quad3.mshri.on.ca', 'lane': 'default', 'monitor_port': None, 'name': 'quad3.mshri.on.ca', 'resource_fixed': {'SSD': True}, 'resource_slots': {'CPU': \[0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47\], 'GPU': \[0, 1, 2, 3\], 'RAM': \[0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15\]}, 'ssh_str': 'cryspc@quad3.mshri.on.ca', 'title': 'Worker node quad3.mshri.on.ca', 'type': 'node', 'worker_bin_path': '/cryoDB/cryosparc_worker/bin/cryosparcw'}}

\*\*\*\* handle exception rc

Traceback (most recent call last):

File "cryosparc_master/cryosparc_compute/run.py", line 115, in cryosparc_master.cryosparc_compute.run.main

File "/cryoDB/cryosparc_worker/cryosparc_compute/jobs/topaz/run_topaz.py", line 384, in run_topaz_wrapper_train

utils.run_process(train_command)

File "/cryoDB/cryosparc_worker/cryosparc_compute/jobs/topaz/topaz_utils.py", line 99, in run_process

assert process.returncode == 0, f"Subprocess exited with status {process.returncode} ({str_command})"

AssertionError: Subprocess exited with status 1 (/programs/x86_64-linux/topaz/0.2.5-2/bin/topaz train --train-images /Stx2Data3/Salima/CS-mark3-crbn-ddb1-protac-glacios-october-2025/J220/image_list_train.txt --train-targets /Stx2Data3/Salima/CS-mark3-crbn-ddb1-protac-glacios-october-2025/J220/topaz_parti…)

set status to failed

========= main process now complete at 2025-11-02 16:58:36.724043.

========= monitor process now complete at 2025-11-02 16:58:36.728125.

Thanks a lot,

Salima