I have trained a Topaz model off 50 micrographs but when I go to use this model on the entire dataset (3600 micrographs) it only extracts the same particles from those original 50 micrographs.

This shouldn’t happen. It might help to post part of the log to figure out what is going wrong. If you look in the log for Topaz extract, does it say it is processing the correct (3600) number of micrographs, or only 50?

It was 3477 micrographs to be exact, and it does mention that it is processing the full set. (I took some of the lines out that are identity sensitive in case you notice some output locations missing)

[CPU: 199.4 MB] Starting Topaz process using version 0.2.5a…

[CPU: 200.5 MB] --------------------------------------------------------------

[CPU: 200.5 MB] Starting preprocessing…

[CPU: 200.5 MB] Starting micrograph preprocessing by running command ]

[CPU: 200.5 MB] Preprocessing over 8 processes…

[CPU: 201.1 MB] Preprocessing command complete.

[CPU: 201.1 MB] Preprocessing done in 2681.313s.

[CPU: 201.1 MB] Inverting negative staining…

[CPU: 202.0 MB] Inverting negative staining complete.

[CPU: 202.0 MB] --------------------------------------------------------------

[CPU: 202.1 MB] Starting extraction…

[CPU: 202.1 MB] Starting extraction by running command /hpcf/authorized_apps/rhel7_apps/anaconda3/install/201903/envs/topaz/bin/topaz extract --radius 7 --threshold -6 --up-scale 16 --assignment-radius -1 --min-radius 5 --max-radius 100 --step-radius 5 --num-workers 8 --device 0 --model

[MICROGRAPH PATHS EXCLUDED FOR LEGIBILITY]

[CPU: 202.2 MB] UserWarning: The given NumPy array is not writeable, and PyTorch does not support non-writeable tensors. This means you can write to the underlying (supposedly non-writeable) NumPy array using the tensor. You may want to copy the array to protect its data or make it writeable before converting it to a tensor. This type of warning will be suppressed for the rest of this program. (Triggered internally at /opt/conda/conda-bld/pytorch_1607370120218/work/torch/csrc/utils/tensor_numpy.cpp:141.)

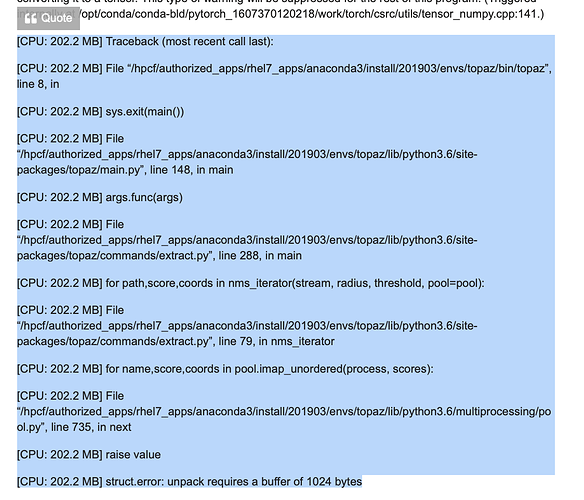

[CPU: 202.2 MB] Traceback (most recent call last):

[CPU: 202.2 MB] File “/hpcf/authorized_apps/rhel7_apps/anaconda3/install/201903/envs/topaz/bin/topaz”, line 8, in

[CPU: 202.2 MB] sys.exit(main())

[CPU: 202.2 MB] File “/hpcf/authorized_apps/rhel7_apps/anaconda3/install/201903/envs/topaz/lib/python3.6/site-packages/topaz/main.py”, line 148, in main

[CPU: 202.2 MB] args.func(args)

[CPU: 202.2 MB] File “/hpcf/authorized_apps/rhel7_apps/anaconda3/install/201903/envs/topaz/lib/python3.6/site-packages/topaz/commands/extract.py”, line 288, in main

[CPU: 202.2 MB] for path,score,coords in nms_iterator(stream, radius, threshold, pool=pool):

[CPU: 202.2 MB] File “/hpcf/authorized_apps/rhel7_apps/anaconda3/install/201903/envs/topaz/lib/python3.6/site-packages/topaz/commands/extract.py”, line 79, in nms_iterator

[CPU: 202.2 MB] for name,score,coords in pool.imap_unordered(process, scores):

[CPU: 202.2 MB] File “/hpcf/authorized_apps/rhel7_apps/anaconda3/install/201903/envs/topaz/lib/python3.6/multiprocessing/pool.py”, line 735, in next

[CPU: 202.2 MB] raise value

[CPU: 202.2 MB] struct.error: unpack requires a buffer of 1024 bytes

[CPU: 202.2 MB] Extraction command complete.

[CPU: 202.2 MB] Starting particle pick thresholding by running command /hpcf/authorized_apps/rhel7_apps/anaconda3/install/201903/envs/topaz/bin/topaz convert -t 0 -o

[CPU: 202.2 MB] Particle pick thresholding command complete.

[CPU: 477.2 MB] Extraction done in 26.010s.

[CPU: 477.2 MB] --------------------------------------------------------------

[CPU: 477.2 MB] Finished Topaz process in 2707.42s

[CPU: 206.5 MB] --------------------------------------------------------------

[CPU: 206.5 MB] Compiling job outputs…

[CPU: 206.5 MB] Passing through outputs for output group micrographs from input group micrographs

[CPU: 206.6 MB] This job outputted results [‘micrograph_blob’]

[CPU: 206.6 MB] Loaded output dset with 3477 items

[CPU: 206.6 MB] Passthrough results [‘ctf’, ‘mscope_params’, ‘ctf_stats’, ‘micrograph_blob_non_dw’]

[CPU: 209.5 MB] Loaded passthrough dset with 3477 items

[CPU: 209.7 MB] Intersection of output and passthrough has 3477 items

[CPU: 207.1 MB] Checking outputs for output group micrographs

[CPU: 207.6 MB] Updating job size…

[CPU: 207.6 MB] Exporting job and creating csg files…

[CPU: 207.6 MB] ***************************************************************

[CPU: 207.6 MB] Job complete. Total time 2736.95s

The reason I suspect it is processing the same 50 micrographs as the training set is that it produces the exact same number of particles, and leads to the exact same 2D classes.

This bit looks a bit odd - don’t normally see this. Not sure what it means though. @alexjamesnoble might know?