- Here are the outputs.

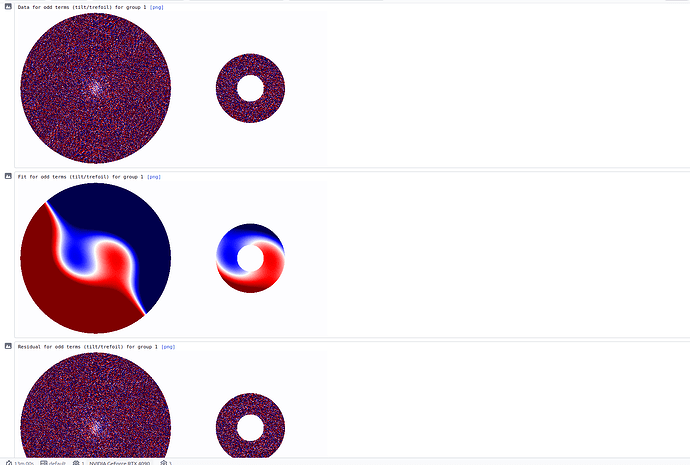

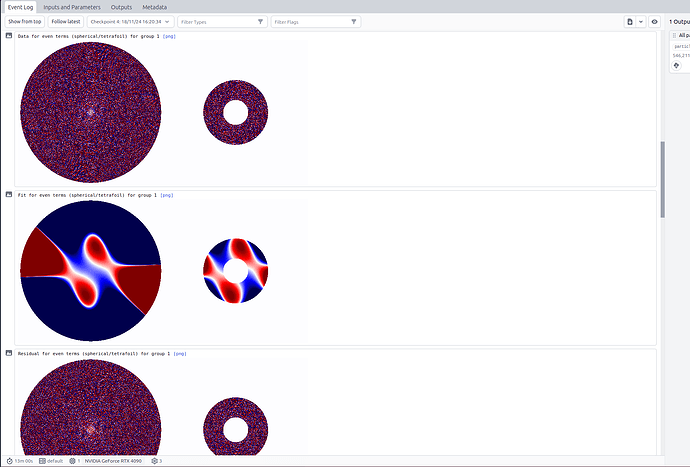

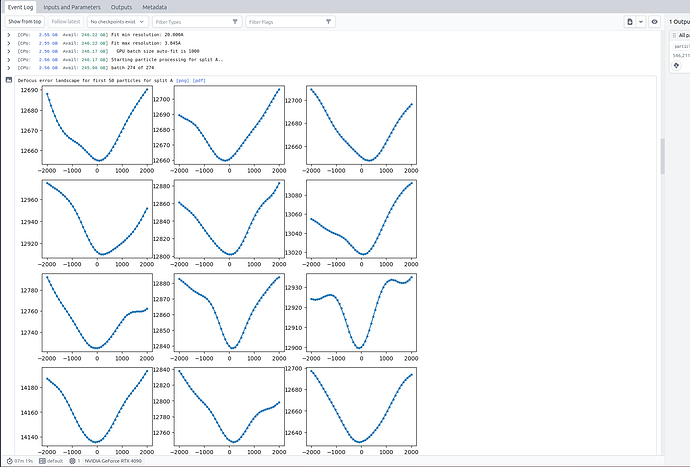

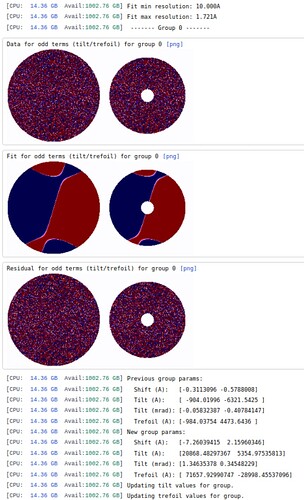

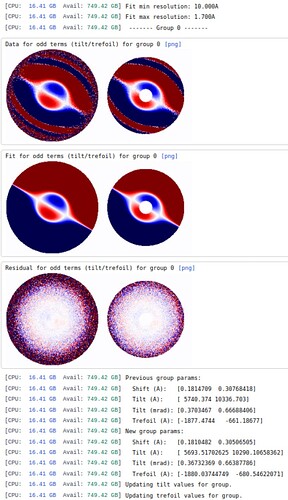

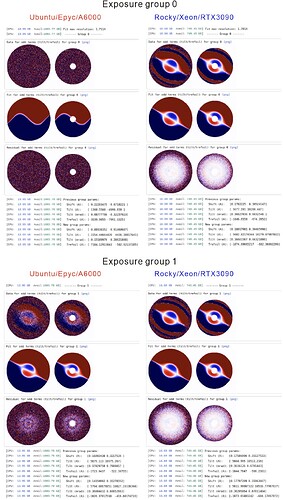

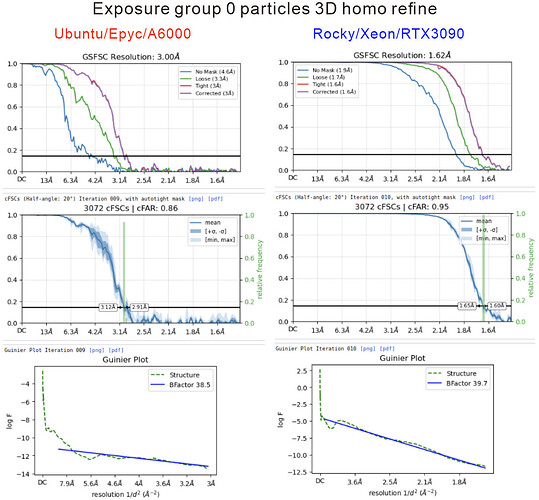

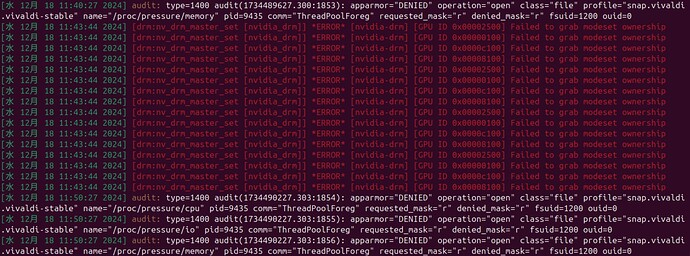

Aberrant job run on the Ubuntu node:

{'_id': '675f71eab6d3b9a9b3c174fe', 'input_slot_groups': [{'connections': [{'group_name': 'particles', 'job_uid': 'J25', 'slots': [{'group_name': 'particles', 'job_uid': 'J25', 'result_name': 'blob', 'result_type': 'particle.blob', 'slot_name': 'blob', 'version': 'F'}, {'group_name': 'particles', 'job_uid': 'J25', 'result_name': 'ctf', 'result_type': 'particle.ctf', 'slot_name': 'ctf', 'version': 'F'}, {'group_name': 'particles', 'job_uid': 'J25', 'result_name': 'alignments3D', 'result_type': 'particle.alignments3D', 'slot_name': 'alignments3D', 'version': 'F'}, {'group_name': 'particles', 'job_uid': 'J25', 'result_name': 'location', 'result_type': 'particle.location', 'slot_name': None, 'version': 'F'}, {'group_name': 'particles', 'job_uid': 'J25', 'result_name': 'alignments2D', 'result_type': 'particle.alignments2D', 'slot_name': None, 'version': 'F'}, {'group_name': 'particles', 'job_uid': 'J25', 'result_name': 'pick_stats', 'result_type': 'particle.pick_stats', 'slot_name': None, 'version': 'F'}]}], 'count_max': inf, 'count_min': 1, 'description': 'Particle stacks to use. Multiple stacks will be concatenated.', 'name': 'particles', 'repeat_allowed': False, 'slots': [{'description': '', 'name': 'blob', 'optional': False, 'title': 'Particle data blobs', 'type': 'particle.blob'}, {'description': '', 'name': 'ctf', 'optional': False, 'title': 'Particle ctf parameters', 'type': 'particle.ctf'}, {'description': '', 'name': 'alignments3D', 'optional': True, 'title': 'Particle 3D alignments (optional)', 'type': 'particle.alignments3D'}], 'title': 'Particle stacks', 'type': 'particle'}, {'connections': [{'group_name': 'volume', 'job_uid': 'J22', 'slots': [{'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'map', 'result_type': 'volume.blob', 'slot_name': 'map', 'version': 'F'}, {'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'map_sharp', 'result_type': 'volume.blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'map_half_A', 'result_type': 'volume.blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'map_half_B', 'result_type': 'volume.blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'mask_refine', 'result_type': 'volume.blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'mask_fsc', 'result_type': 'volume.blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'mask_fsc_auto', 'result_type': 'volume.blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'precision', 'result_type': 'volume.blob', 'slot_name': None, 'version': 'F'}]}], 'count_max': 1, 'count_min': 1, 'description': '', 'name': 'volume', 'repeat_allowed': False, 'slots': [{'description': '', 'name': 'map', 'optional': False, 'title': 'Initial volume raw data', 'type': 'volume.blob'}], 'title': 'Initial volume', 'type': 'volume'}, {'connections': [], 'count_max': 1, 'count_min': 0, 'description': '', 'name': 'mask', 'repeat_allowed': False, 'slots': [{'description': '', 'name': 'mask', 'optional': False, 'title': 'Static mask', 'type': 'volume.blob'}], 'title': 'Static mask', 'type': 'mask'}], 'instance_information': {'CUDA_version': '11.8', 'available_memory': '995.96GB', 'cpu_model': 'AMD EPYC 7713 64-Core Processor', 'driver_version': '12.7', 'gpu_info': [{'id': 0, 'mem': 50925535232, 'name': 'NVIDIA RTX A6000', 'pcie': '0000:01:00'}, {'id': 1, 'mem': 50925535232, 'name': 'NVIDIA RTX A6000', 'pcie': '0000:25:00'}, {'id': 2, 'mem': 50925535232, 'name': 'NVIDIA RTX A6000', 'pcie': '0000:81:00'}, {'id': 3, 'mem': 50925535232, 'name': 'NVIDIA RTX A6000', 'pcie': '0000:c1:00'}], 'ofd_hard_limit': 1048576, 'ofd_soft_limit': 1024, 'physical_cores': 128, 'platform_architecture': 'x86_64', 'platform_node': 'rado-tyan', 'platform_release': '6.8.0-49-generic', 'platform_version': '#49~22.04.1-Ubuntu SMP PREEMPT_DYNAMIC Wed Nov 6 17:42:15 UTC 2', 'total_memory': '1007.64GB', 'used_memory': '6.73GB'}, 'job_type': 'homo_refine_new', 'params_spec': {'crg_num_plots': {'value': 10}, 'crg_plot_binfactor': {'value': 2}, 'crl_df_range': {'value': 300}, 'prepare_window_dataset': {'value': False}, 'refine_ctf_global_refine': {'value': True}, 'refine_defocus_refine': {'value': True}, 'refine_do_ews_correct': {'value': True}, 'refine_ews_zsign': {'value': 'positive'}, 'refine_num_final_iterations': {'value': 1}, 'refine_scale_min': {'value': True}, 'refine_symmetry': {'value': 'O'}}, 'project_uid': 'P44', 'status': 'completed', 'uid': 'J36', 'version': 'v4.6.2'}

Good job on the Rocky Linux node:

{'_id': '675f71e8b6d3b9a9b3c16d88', 'input_slot_groups': [{'connections': [{'group_name': 'particles', 'job_uid': 'J25', 'slots': [{'group_name': 'particles', 'job_uid': 'J25', 'result_name': 'blob', 'result_type': 'particle.blob', 'slot_name': 'blob', 'version': 'F'}, {'group_name': 'particles', 'job_uid': 'J25', 'result_name': 'ctf', 'result_type': 'particle.ctf', 'slot_name': 'ctf', 'version': 'F'}, {'group_name': 'particles', 'job_uid': 'J25', 'result_name': 'alignments3D', 'result_type': 'particle.alignments3D', 'slot_name': 'alignments3D', 'version': 'F'}, {'group_name': 'particles', 'job_uid': 'J25', 'result_name': 'location', 'result_type': 'particle.location', 'slot_name': None, 'version': 'F'}, {'group_name': 'particles', 'job_uid': 'J25', 'result_name': 'alignments2D', 'result_type': 'particle.alignments2D', 'slot_name': None, 'version': 'F'}, {'group_name': 'particles', 'job_uid': 'J25', 'result_name': 'pick_stats', 'result_type': 'particle.pick_stats', 'slot_name': None, 'version': 'F'}]}], 'count_max': inf, 'count_min': 1, 'description': 'Particle stacks to use. Multiple stacks will be concatenated.', 'name': 'particles', 'repeat_allowed': False, 'slots': [{'description': '', 'name': 'blob', 'optional': False, 'title': 'Particle data blobs', 'type': 'particle.blob'}, {'description': '', 'name': 'ctf', 'optional': False, 'title': 'Particle ctf parameters', 'type': 'particle.ctf'}, {'description': '', 'name': 'alignments3D', 'optional': True, 'title': 'Particle 3D alignments (optional)', 'type': 'particle.alignments3D'}], 'title': 'Particle stacks', 'type': 'particle'}, {'connections': [{'group_name': 'volume', 'job_uid': 'J22', 'slots': [{'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'map', 'result_type': 'volume.blob', 'slot_name': 'map', 'version': 'F'}, {'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'map_sharp', 'result_type': 'volume.blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'map_half_A', 'result_type': 'volume.blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'map_half_B', 'result_type': 'volume.blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'mask_refine', 'result_type': 'volume.blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'mask_fsc', 'result_type': 'volume.blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'mask_fsc_auto', 'result_type': 'volume.blob', 'slot_name': None, 'version': 'F'}, {'group_name': 'volume', 'job_uid': 'J22', 'result_name': 'precision', 'result_type': 'volume.blob', 'slot_name': None, 'version': 'F'}]}], 'count_max': 1, 'count_min': 1, 'description': '', 'name': 'volume', 'repeat_allowed': False, 'slots': [{'description': '', 'name': 'map', 'optional': False, 'title': 'Initial volume raw data', 'type': 'volume.blob'}], 'title': 'Initial volume', 'type': 'volume'}, {'connections': [], 'count_max': 1, 'count_min': 0, 'description': '', 'name': 'mask', 'repeat_allowed': False, 'slots': [{'description': '', 'name': 'mask', 'optional': False, 'title': 'Static mask', 'type': 'volume.blob'}], 'title': 'Static mask', 'type': 'mask'}], 'instance_information': {'CUDA_version': '11.8', 'available_memory': '747.68GB', 'cpu_model': 'Intel(R) Xeon(R) Gold 6226R CPU @ 2.90GHz', 'driver_version': '12.3', 'gpu_info': [{'id': 0, 'mem': 25438126080, 'name': 'NVIDIA GeForce RTX 3090', 'pcie': '0000:3b:00'}, {'id': 1, 'mem': 25438126080, 'name': 'NVIDIA GeForce RTX 3090', 'pcie': '0000:5e:00'}, {'id': 2, 'mem': 25438126080, 'name': 'NVIDIA GeForce RTX 3090', 'pcie': '0000:86:00'}, {'id': 3, 'mem': 25438126080, 'name': 'NVIDIA GeForce RTX 3090', 'pcie': '0000:af:00'}], 'ofd_hard_limit': 262144, 'ofd_soft_limit': 1024, 'physical_cores': 32, 'platform_architecture': 'x86_64', 'platform_node': 'sv21', 'platform_release': '4.18.0-348.2.1.el8_5.x86_64', 'platform_version': '#1 SMP Mon Nov 15 20:49:28 UTC 2021', 'total_memory': '754.58GB', 'used_memory': '2.05GB'}, 'job_type': 'homo_refine_new', 'params_spec': {'crg_num_plots': {'value': 10}, 'crg_plot_binfactor': {'value': 2}, 'crl_df_range': {'value': 300}, 'prepare_window_dataset': {'value': False}, 'refine_ctf_global_refine': {'value': True}, 'refine_defocus_refine': {'value': True}, 'refine_do_ews_correct': {'value': True}, 'refine_ews_zsign': {'value': 'positive'}, 'refine_num_final_iterations': {'value': 1}, 'refine_scale_min': {'value': True}, 'refine_symmetry': {'value': 'O'}}, 'project_uid': 'P44', 'status': 'completed', 'uid': 'J32', 'version': 'v4.6.2'}

Scheduler targets:

[{'cache_path': '/scr/cs', 'cache_quota_mb': None, 'cache_reserve_mb': 10000, 'desc': None, 'gpus': [{'id': 0, 'mem': 25438126080, 'name': 'NVIDIA GeForce RTX 3090'}, {'id': 1, 'mem': 25438126080, 'name': 'NVIDIA GeForce RTX 3090'}, {'id': 2, 'mem': 25438126080, 'name': 'NVIDIA GeForce RTX 3090'}, {'id': 3, 'mem': 25438126080, 'name': 'NVIDIA GeForce RTX 3090'}], 'hostname': 'sv21', 'lane': 'default', 'monitor_port': None, 'name': 'sv21', 'resource_fixed': {'SSD': True}, 'resource_slots': {'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31], 'GPU': [0, 1, 2, 3], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96]}, 'ssh_str': 'cryosparc@sv21', 'title': 'Worker node sv21', 'type': 'node', 'worker_bin_path': '/home/cryosparc/cryosparc_worker/bin/cryosparcw'}, {'cache_path': '/scratch', 'cache_quota_mb': None, 'cache_reserve_mb': 10000, 'desc': None, 'gpus': [{'id': 0, 'mem': 50928943104, 'name': 'NVIDIA RTX A6000'}, {'id': 1, 'mem': 50928943104, 'name': 'NVIDIA RTX A6000'}, {'id': 2, 'mem': 50928943104, 'name': 'NVIDIA RTX A6000'}, {'id': 3, 'mem': 50928943104, 'name': 'NVIDIA RTX A6000'}], 'hostname': '192.168.51.61', 'lane': 'rado', 'monitor_port': None, 'name': '192.168.51.61', 'resource_fixed': {'SSD': True}, 'resource_slots': {'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, 130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142, 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155, 156, 157, 158, 159, 160, 161, 162, 163, 164, 165, 166, 167, 168, 169, 170, 171, 172, 173, 174, 175, 176, 177, 178, 179, 180, 181, 182, 183, 184, 185, 186, 187, 188, 189, 190, 191, 192, 193, 194, 195, 196, 197, 198, 199, 200, 201, 202, 203, 204, 205, 206, 207, 208, 209, 210, 211, 212, 213, 214, 215, 216, 217, 218, 219, 220, 221, 222, 223, 224, 225, 226, 227, 228, 229, 230, 231, 232, 233, 234, 235, 236, 237, 238, 239, 240, 241, 242, 243, 244, 245, 246, 247, 248, 249, 250, 251, 252, 253, 254, 255], 'GPU': [0, 1, 2, 3], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128]}, 'ssh_str': 'cryosparc@192.168.51.61', 'title': 'Worker node 192.168.51.61', 'type': 'node', 'worker_bin_path': '/home/cryosparc/cryosparc_worker/bin/cryosparcw'}]

-

Both jobs were under the same project and used the same project storage.

-

Each workstation has its own cache. I did consider the possibility of a corrupt cache and cleaned up the cache on the Ubuntu node to force re-copy of the particles. This did not change the outcome. Also, checked the SMART values of the cache drive and there were no abnormalities.

-

I did not try running the job with cache disabled. Unfortunately, I already cleaned up the project and cannot try that promptly. I am planning to upgrade the Ubuntu distribution and re-install the NVIDIA drivers on the workstation. Afterwards, I will re-run the jobs and see if disabling the cache makes any difference.