Hi,

I encountered a very strange error that never happened before the update to 4.5.1. After extraction, which finishes successfully, I run 2D classification, and after various amounts of iterations, I get:

[CPU: 4.16 GB Avail: 244.36 GB]

Traceback (most recent call last):

File "/home/cryosparc_user/cryosparc_gpu1/cryosparc_worker/cryosparc_compute/jobs/runcommon.py", line 2294, in run_with_except_hook

run_old(*args, **kw)

File "cryosparc_master/cryosparc_compute/gpu/gpucore.py", line 134, in cryosparc_master.cryosparc_compute.gpu.gpucore.GPUThread.run

File "cryosparc_master/cryosparc_compute/gpu/gpucore.py", line 135, in cryosparc_master.cryosparc_compute.gpu.gpucore.GPUThread.run

File "cryosparc_master/cryosparc_compute/jobs/class2D/newrun.py", line 619, in cryosparc_master.cryosparc_compute.jobs.class2D.newrun.class2D_engine_run.work

File "cryosparc_master/cryosparc_compute/engine/newengine.py", line 550, in cryosparc_master.cryosparc_compute.engine.newengine.EngineThread.read_image_data

File "/home/cryosparc_user/cryosparc_gpu1/cryosparc_worker/cryosparc_compute/particles.py", line 34, in get_original_real_data

data = self.blob.view()

File "/home/cryosparc_user/cryosparc_gpu1/cryosparc_worker/cryosparc_compute/blobio/mrc.py", line 145, in view

return self.get()

File "/home/cryosparc_user/cryosparc_gpu1/cryosparc_worker/cryosparc_compute/blobio/mrc.py", line 140, in get

_, data, total_time = prefetch.synchronous_native_read(self.fname, idx_start = self.page, idx_limit = self.page+1)

File "cryosparc_master/cryosparc_compute/blobio/prefetch.py", line 82, in cryosparc_master.cryosparc_compute.blobio.prefetch.synchronous_native_read

OSError:

IO request details:

Error ocurred (Invalid argument) at line 680 in mrc_readmic (1)

The requested frame/particle cannot be accessed. The file may be corrupt, or there may be a mismatch between the file and its associated metadata (i.e. cryosparc .cs file).

filename: /mnt/scratch/cryosparc/instance_10.0.90.83:39001/links/P4-J1327-1715432654/f18c26f6c0c700292a4ee93b729ae367572998e0.mrc

filetype: 0

header_only: 0

idx_start: 83

idx_limit: 84

eer_upsampfactor: 2

eer_numfractions: 40

num_threads: 6

buffer: (nil)

buffer_sz: 0

nx, ny, nz: 0 0 0

dtype: 0

total_time: -1.000000

io_time: 0.000000

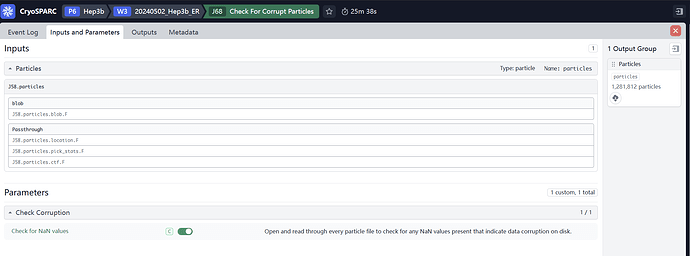

I also ran the check for the corrupt particles job, but it did not return any errors. I used this dataset before, and everything went well. The short investigation shows that it is rather the problem with extraction than the SSD copy, as the non-SSD run also fails. I would appreciate some ideas on how to fix it.

PS: The file itself in not empty:

-rw-rw-r-- 1 cryosparc_user domain_users 2.6M May 11 00:41 /mnt/scratch/cryosparc/instance_10.0.90.83:39001//store-v2/f1/f18c26f6c0c700292a4ee93b729ae367572998e0

and seems to have correct MRC data in it:

mrcfile.open('/mnt/scratch/cryosparc/instance_10.0.90.83:39001//store-v2/f1/f18c26f6c0c700292a4ee93b729ae367572998e0').data

array([[[-0.545 , 0.6787 , -0.906 , ..., 0.8677 , 0.2632 ,

-0.171 ],

[ 0.00933 , -0.1229 , -0.429 , ..., -0.6714 , -0.2866 ,

-0.03214 ],

[ 0.373 , 0.2352 , 0.4573 , ..., 0.9834 , -0.656 ,

0.2996 ],

...,

[-0.1368 , -0.7676 , 0.0905 , ..., 0.2947 , 0.1769 ,

0.2515 ],

[-1.277 , 0.01749 , -0.1985 , ..., -0.1833 , 0.02 ,

-0.1722 ],

[-0.568 , -0.7456 , -0.0936 , ..., 0.1059 , 0.4585 ,

0.3828 ]],

[[-0.1036 , -0.00622 , 1.105 , ..., 1.425 , 0.4885 ,

-0.437 ],

[ 0.2231 , 0.5845 , -0.8555 , ..., -0.5776 , -0.6157 ,

0.06976 ],

[-0.5815 , 0.1168 , -0.2161 , ..., -0.02008 , 0.3801 ,

-0.1897 ],

...,

[-0.569 , -0.08795 , -0.4204 , ..., -0.4531 , -0.03506 ,

-0.01866 ],

[ 0.784 , -0.682 , -0.0538 , ..., -0.433 , 0.1461 ,

-0.2615 ],

[-0.7603 , 0.11676 , -0.10974 , ..., 0.3801 , -0.1593 ,

0.4434 ]],

[[ 0.5557 , -1.033 , 0.5127 , ..., 0.3188 , 0.115 ,

-0.2216 ],

[-0.5664 , -0.001858, 0.2634 , ..., 0.1373 , -0.1454 ,

0.7183 ],

[ 0.0503 , 0.2732 , -1.192 , ..., -0.3132 , -0.1333 ,

0.5625 ],

...,

[ 1.128 , 0.0885 , -0.1423 , ..., 0.3215 , -0.5073 ,

0.539 ],

[-0.264 , -0.653 , 0.3455 , ..., 0.3254 , 0.869 ,

0.2727 ],

[-0.412 , 0.3765 , -0.10205 , ..., 0.6997 , 0.0564 ,

0.77 ]],

...,

[[ 0.0524 , 0.01288 , 0.714 , ..., -0.1938 , -0.9307 ,

0.633 ],

[ 0.3123 , 0.2301 , -0.2915 , ..., 0.405 , -0.2133 ,

0.4438 ],

[ 0.3967 , 0.5156 , -0.3208 , ..., 0.1609 , 0.516 ,

-0.0394 ],

...,

[ 0.03418 , 0.245 , -0.2365 , ..., 0.4922 , -0.343 ,

0.014336],

[-0.9146 , -0.02989 , -0.01746 , ..., -0.4229 , 0.1776 ,

0.1112 ],

[ 0.361 , 0.7344 , -0.4753 , ..., 0.462 , -0.3662 ,

0.5327 ]],

[[ 1.13 , -0.02214 , 1.055 , ..., -0.5215 , 0.442 ,

0.3564 ],

[-0.527 , 0.587 , -0.3025 , ..., -0.5864 , -0.1426 ,

-0.2998 ],

[ 0.08997 , 0.3374 , -0.3252 , ..., 0.407 , 0.2761 ,

-0.003357],

...,

[-0.007282, -0.3916 , 0.2644 , ..., -0.12396 , 0.2969 ,

0.3967 ],

[-0.05515 , 0.4001 , 0.1183 , ..., 0.1626 , 0.321 ,

0.8013 ],

[-0.292 , 0.6875 , -0.00401 , ..., 0.2888 , 0.16 ,

0.02405 ]],

[[ 0.318 , -0.6143 , -0.4092 , ..., -0.307 , 1.173 ,

0.1466 ],

[ 0.01765 , 0.381 , 1.221 , ..., -0.1182 , -0.03516 ,

0.4124 ],

[-0.0908 , -0.1321 , -0.2008 , ..., 0.3044 , 0.651 ,

0.293 ],

...,

[ 0.4333 , 0.2445 , -0.3245 , ..., -0.4429 , -0.2135 ,

0.1926 ],

[-0.4136 , 0.2413 , 0.5254 , ..., 0.2388 , 0.8677 ,

0.00493 ],

[-0.4097 , 0.563 , -0.2517 , ..., 0.345 , -0.5933 ,

0.05396 ]]], dtype=float16)

>>> mrcfile.open('/mnt/scratch/cryosparc/instance_10.0.90.83:39001//store-v2/f1/f18c26f6c0c700292a4ee93b729ae367572998e0').data.shape

(83, 128, 128)

PS2: Removing the faulty particle mrc file from CS files fixed the problem. Interestingly, the same error happened 3 times (2X restarted extract job using CPU, 1X GPU extraction). Previously, the same micrographs never showed an error after extraction.

Best,

Dawid