Hello,

I have some suggestions regarding some small aspects of the cryoSPARC system:

-

Periodically output meta files for long-running tasks.

These include, but not limited to:

a) “exposures.bson” for live sessions (not too important, because they are in the database too)

b) “particles.cs”, “exposures.cs”, etc., for jobs dealing with movies (patch, local, ref-mo, etc). This is important. If everything else takes time, please at least implement this.

For example, with the local motion job, it seems to me that the meta info of the extracted particles is held in the memory of the worker only. If the job dies (due to server restart, out of storage, out of memory, etc.), we won’t be able to use the already extracted particles.( The number of extracted particles is often different from the input stack due to rejection, therefore, trying to relink with the input particle.cs is troublesome.)

These files can also be used when “mark job as completed”. I realize that it might be too much work for many job types to implement “Continue from a certain point”. But the ability to rescue what has already been finished would be quite nice.

(Or, maybe I just haven’t found where that info is saved during the running? )

- Local log on the worker computer. Jobs sometimes die due to unexpected data. Therefore I suggest that the jobs save some sort of text log locally on the worker computer. Maybe a “cryosparc_run” dir in /tmp? The reason to save on the worker computer is that it avoids losing the info due to network or master problems. A “last millisecond” message can be saved this way just before the job fails. This should help troubleshooting.

(Not quite related and not a request, just a wild thought: can we keep the job running on worker during master restart? My impression is that sometimes jobs die or get marked failed simply because the heartbeats are missed. )

-

An extra option added to cryoSPARC live setup: “Stop Session if no exposure is discovered for ____ minutes.” The behaviuor would be: if no new exposure is discovered after this set length of time, then the session will stop when all current exposures are processed.

-

An extra option on workspaces that are also live sessions: “Remove temporary display images.” This is for removing all display images generated during the live session. It is very common that a live session will generate GBs of preview images and save them all in the database. If the live session is never deleted, these few GB of images will remain with the database. Deleting these files won’t affect processing, it only makes the live interface slightly uglier.

-

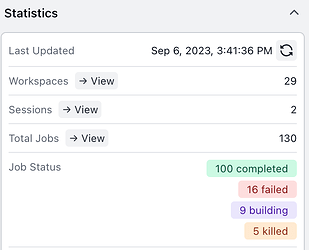

Scan database for zombie files. This will search fs.files for files belonging to deleted projects or deleted jobs. Somehow they do arise over time.

-

Suppress some unnecessary information. For example the tiff format error message in job.log of jobs dealing with eer files.

-

Do not generate a pdf version of the plots in 3Dvar job. The pdf version of the particle distribution plot in 3D var job is very large. It seems that they may contain up to millions of individual dots. No pdf viewer can view them. They can quite effectively increase the size of the database, though.

Zhijie