Hi,

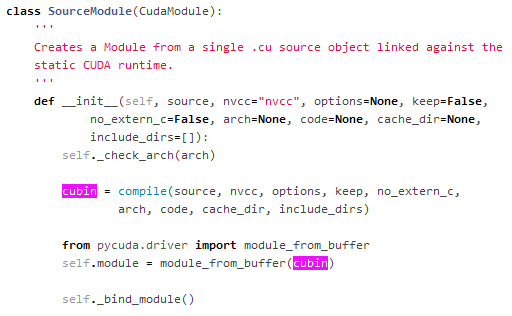

looks like that something does not recognize the architecture sm_89 of the gpu?

I mean when i search for this cubin stuff then this is as far as i understand to check/compile the compute capability/code/arch… of the gpu model.

What happens when you run under

cuda version: 11.8

Driver: 525

cryosparc_worker/bin/cryosparcw call python /path/to/hello_gpu.py from here pycuda 2022.2.2 documentation

Can you send the output when you run: cryosparc_worker/bin/cryosparcw call python /path/to/code.py

import pycuda

import pycuda.driver as cuda

import pycuda.autoinit

cuda.init()

print('Detected{}CUDACapabledevice(s)\n'.format(cuda.Device.count()))

for i in range(cuda.Device.count()):

gpu_device = cuda.Device(i)

print('Device {}: {}'.format( i, gpu_device.name() ))

compute_capability=float( '%d.%d' % gpu_device.compute_capability() )

print('\t Compute Capability: {}'.format(compute_capability))

print('\t Total Memory: {} megabytes'.format(gpu_device.total_memory()//(1024**2)))

print('%d device(s) found.' % cuda.Device.count())

dev=cuda.Device(0)

print('Device: %s', dev.name())

print(' Compute Capability: %d.%d' % dev.compute_capability())

print(' Total Memory: %s KB' % (dev.total_memory()//(1024)))

atts=[(str(att), value)

for att, value in dev.get_attributes().items()]

atts.sort()

for att, value in atts:

print(' %s: %s' % (att, value))

Maybe this post will help?

https://discuss.cryosparc.com/t/cryosparc-unable-to-run-any-2d-or-3d-job/4391/4?u=mo_o

Best,

Mo