Dear Cryosparc team,

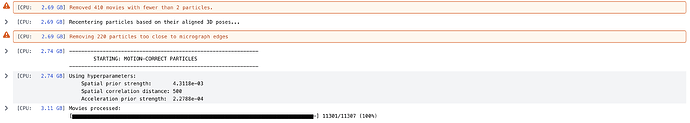

I encountered problem when I run reference based motion correction (cryosparc v4.5.1). The job runs well until only a few micrographs remains to be processed. As shown in the screenshot, it stays like that forever, and no error message is reported.

Do you have any idea why it happens? I tried to re-run the job several times, they end up with the same problem.

Best,

Jiangfeng

Welcome to the forum @Jiangfeng .

Please can you post the outputs of these commands

- On the CryoSPARC master

cryosparcm cli "get_job('P99', 'J199', 'job_type', 'version', 'params_spec', 'instance_information', 'status')" cryosparcm joblog P99 J199 | tail -n 20 cryosparcm eventlog P99 J199 | tail -n 20

where you replace P99 and J199 with the stuck job’s project and job IDs

- On the worker node

hostname cat /sys/kernel/mm/transparent_hugepage/enabled free -h nvidia-smi --query-gpu=index,name,compute_mode --format=csv

Hello,

Thanks for your answer, and sorry for my late response.

The output from cryosparc master are:

*cryosparcm cli “get_job(‘P435’, ‘J1495’, ‘job_type’, ‘version’, ‘params_spec’, ‘instance_information’, ‘status’)” *

{‘_id’: ‘66c088ee7134a261579afdcc’, ‘instance_information’: {‘CUDA_version’: ‘11.8’, ‘available_memory’: ‘497.46GB’, ‘cpu_model’: ‘AMD EPYC 7452 32-Core Processor’, ‘driver_version’: ‘12.2’, ‘gpu_info’: [{‘id’: 0, ‘mem’: 25425608704, ‘name’: ‘NVIDIA RTX A5000’, ‘pcie’: ‘0000:01:00’}, {‘id’: 1, ‘mem’: 25425608704, ‘name’: ‘NVIDIA RTX A5000’, ‘pcie’: ‘0000:41:00’}, {‘id’: 2, ‘mem’: 25425608704, ‘name’: ‘NVIDIA RTX A5000’, ‘pcie’: ‘0000:81:00’}, {‘id’: 3, ‘mem’: 25425608704, ‘name’: ‘NVIDIA RTX A5000’, ‘pcie’: ‘0000:c1:00’}], ‘ofd_hard_limit’: 131072, ‘ofd_soft_limit’: 1024, ‘physical_cores’: 32, ‘platform_architecture’: ‘x86_64’, ‘platform_node’: ‘gpu11’, ‘platform_release’: ‘6.1.0-23-amd64’, ‘platform_version’: ‘#1 SMP PREEMPT_DYNAMIC Debian 6.1.99-1 (2024-07-15)’, ‘total_memory’: ‘503.56GB’, ‘used_memory’: ‘2.11GB’}, ‘job_type’: ‘reference_motion_correction’, ‘params_spec’: {‘compute_num_gpus’: {‘value’: 4}}, ‘project_uid’: ‘P435’, ‘status’: ‘killed’, ‘uid’: ‘J1495’, ‘version’: 'v4.5.1

*cryosparcm joblog P435 J1495 | tail -n 30 *

refmotion worker 0 (NVIDIA RTX A5000)

BFGS iterations: 429

scale (alpha): 10.433222

noise model (sigma2): 43.831821

TIME (s) SECTION

0.000087205 sanity

2.030047313 read movie

0.036165983 get gain, defects

0.075999380 read bg

0.001080994 read rigid

0.730054137 prep_movie

0.574873451 extract from frames

0.000600435 extract from refs

0.000000441 adj

0.000000170 bfactor

0.086613108 rigid motion correct

0.000517909 get noise, scale

0.156248114 optimize trajectory

0.197749595 shift_sum patches

0.002173620 ifft

0.003044767 unpad

0.000092004 fill out dataset

0.011586037 write output files

3.906934662 — TOTAL —

followed by many lines like

========= sending heartbeat at 2024-08-18 10:20:52.647827

========= sending heartbeat at 2024-08-18 10:21:02.672777

========= sending heartbeat at 2024-08-18 10:21:12.697154

*cryosparcm eventlog P435 J1495 | tail -n 20 *

[Sat, 17 Aug 2024 11:30:28 GMT] [CPU RAM used: 7036 MB] Plotting trajectories and particles for movie 7426914197769274664

J166/imported/007426914197769274664_U_018_1-9.tif

[Sat, 17 Aug 2024 11:30:42 GMT] [CPU RAM used: 8215 MB] Plotting trajectories and particles for movie 14884769487704804355

J166/imported/014884769487704804355_U_004_1-4.tif

[Sat, 17 Aug 2024 11:30:52 GMT] [CPU RAM used: 7273 MB] Plotting trajectories and particles for movie 14351690595754745796

J166/imported/014351690595754745796_U_005_1-5.tif

[Sat, 17 Aug 2024 11:31:06 GMT] [CPU RAM used: 6434 MB] Plotting trajectories and particles for movie 14030516046577853997

J166/imported/014030516046577853997_U_031_1-4.tif

[Sat, 17 Aug 2024 11:31:11 GMT] [CPU RAM used: 7550 MB] Plotting trajectories and particles for movie 15358186270943389465

J166/imported/015358186270943389465_U_006_1-6.tif

[Sat, 17 Aug 2024 11:31:20 GMT] [CPU RAM used: 5608 MB] Plotting trajectories and particles for movie 12616451429076686867

J166/imported/012616451429076686867_U_013_1-4.tif

[Sat, 17 Aug 2024 11:31:28 GMT] [CPU RAM used: 5948 MB] Plotting trajectories and particles for movie 7159987702629950857

J166/imported/007159987702629950857_U_007_1-7.tif

[Sat, 17 Aug 2024 11:31:36 GMT] [CPU RAM used: 6827 MB] Plotting trajectories and particles for movie 13395720043776224586

J166/imported/013395720043776224586_U_009_1-9.tif

[Sat, 17 Aug 2024 11:31:47 GMT] [CPU RAM used: 5211 MB] Plotting trajectories and particles for movie 2048166277082130793

J166/imported/002048166277082130793_U_010_1-1.tif

[Sat, 17 Aug 2024 11:31:52 GMT] [CPU RAM used: 7138 MB] No further example plots will be made, but the job is still running (see progress bar above).

[Sun, 18 Aug 2024 08:29:41 GMT] **** Kill signal sent by unknown user ****

In the end, the kill signal was sent by me.

On the worker node I typed the following command:

hostname

cat /sys/kernel/mm/transparent_hugepage/enabled

free -h

nvidia-smi --query-gpu=index,name,compute_mode --format=csv

Here is the output:

sky71

[always] madvise never

total used free shared buff/cache available

Mem: 94Gi 3.5Gi 76Gi 3.8Mi 15Gi 90Gi

Swap: 29Gi 10Gi 18Gi

-bash: nvidia-smi: command not found

Best,

Jiangfeng

Based on information from the get_job() command above, you may want to run the commands

hostname

cat /sys/kernel/mm/transparent_hugepage/enabled

free -h

nvidia-smi --query-gpu=index,name,compute_mode --format=csv

should be run on the gpu11 computer instead of sky71. What is the commands’ output on computer gpu11?

Hi,

Here is the output from gpu11 computer.

root@gpu11:~# hostname

gpu11

root@gpu11:~# cat /sys/kernel/mm/transparent_hugepage/enabled

[always] madvise never

root@gpu11:~# free -h

total used free shared buff/cache available

Mem: 503Gi 87Gi 82Gi 23Mi 337Gi 415Gi

Swap: 29Gi 600Mi 29Gi

root@gpu11:~# nvidia-smi --query-gpu=index,name,compute_mode --format=csv

index, name, compute_mode

0, NVIDIA RTX A5000, Default

1, NVIDIA RTX A5000, Default

2, NVIDIA RTX A5000, Default

3, NVIDIA RTX A5000, Default

Best,

Jiangfeng

Thanks @Jiangfeng .

You may want to try whether the job still gets stuck after you disable transparent_hugepage (details).

If the issue persists, please can you post (from the compute node when the job is stuck but nominally still running)

- a screenshot of output from the command

htop - outputs of these commands

hostname cat /sys/kernel/mm/transparent_hugepage/enabled free -h

Hi,

Sorry for the late response. Recently, I tried to re-run the same job and didn’t change anything, sometimes it finished properly, but sometime it stuck when job is almost done. I don’t know why.

Best,

Jiangfeng

@Jiangfeng If you wish, you may

You can also try

- marking the job as complete. This action may or may not make available some outputs that have been generated already.

- splitting the exposures dataset into smaller chunks using the Exposure Sets Tool and run a reference-based motion correction job for each of the chunks.