Hi @builab,

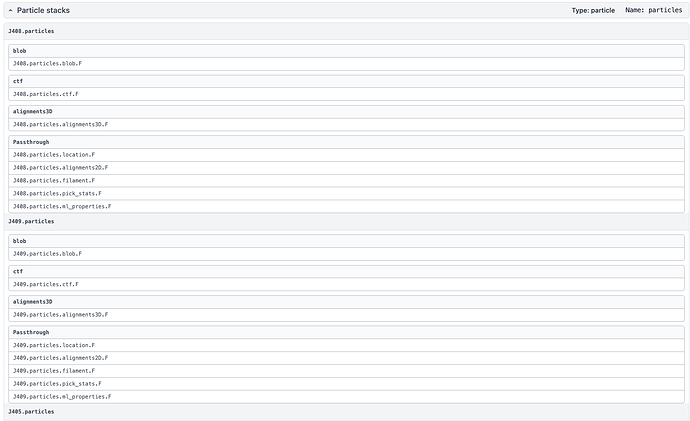

Thanks for this, and apologies for the delayed response. Based on your described workflow, we’ve identified what’s happening. When creating subtracted particles in the particle subtraction job, the unique ID’s (UID’s) of the particles are unmodified from the inputs. This is so that a correspondence can be maintained between the subtracted particle dataset and their originals.

(Side note: this correspondence is needed in a few cases, one example being:

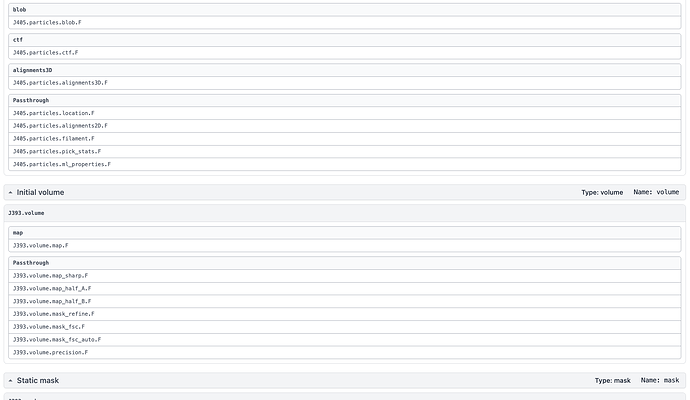

-

Following a Local Refinement on subtracted particles with a Homogeneous Reconstruction Only, where you want to use the original image data (

blob input). In this case, the reconstruct only job will need as input: the low-level alignments3D output of the local refinement on subtracted particles, together with the blob and ctf outputs from the original particles.)

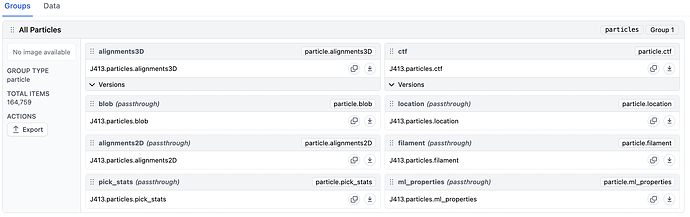

However, the workflow you’ve described is important and it should be supported. The main detail needed to get this to work is to ensure that the UID’s of the subtracted particles and original particles are different (disjoint), so that the final refinement will identify them as different particle sets. As you alluded to, you can get around this in CryoSPARC by re-extracting the original particles from J2 (which will regenerate their UID’s), then combining these with the subtracted particles from J5 into a single local refinement. But this method would waste space in writing out all of the particles again.

You can use CryoSPARC Tools to do this. See this link for usage info, including how to connect to a CryoSPARC instance in a script. The example script below will take the outputs of a specified job (e.g. J5), reassign the particles’ UID’s, then output the reassigned particles to a new “External” job in the specified workspace – just specify the info at the top of the script. These particles can then be combined with the original particles from J2.

import numpy as n

from cryosparc.tools import CryoSPARC

from cryosparc.dataset import Dataset

# Connect to your CryoSPARC Instance: see https://tools.cryosparc.com/intro.html#usage

cs = CryoSPARC(

license="xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

host="localhost",

base_port=39000,

email="youremail@example.com",

password="yourpassword"

)

puid = "<your project ID here>" # e.g. P100

wuid = "<your workspace ID here>" # e.g. W10

source_particle_juid = "<job UID of source particles here>" # e.g. J5

# =================================================

project = cs.find_project(puid)

job = project.create_external_job(wuid, title="Import Reassigned UID Subtracted Particles")

job.connect("subtracted_particles", source_particle_juid, "particles")

job.add_output("particle", "reassigned_uid_particles", slots=['blob', 'ctf', 'alignments3D'])

job.start()

particles = job.load_input("subtracted_particles", ['blob', 'ctf', 'alignments3D'])

out_particles = particles.copy()

out_particles.reassign_uids()

if len(n.intersect1d(particles['uid'], out_particles['uid'])) == 0:

print("No UID collisions found")

else:

print("UID collisions found!")

job.alloc_output('reassigned_uid_particles', out_particles)

job.save_output('reassigned_uid_particles', out_particles)

job.stop()

The workflow you’ve described is a great example of how to use Volume Alignment Tools together with Particle Subtraction to handle NCS. To support this without having to go through this UID-reassigning workaround, we’ve triaged this as something to support and are thinking about the simplest way to implement this!

EDIT: Amending my reply – re-extraction will not regenerate particles’ UIDs, therefore re-extracting the original particles won’t circumvent this issue. The only way to do this is via manual particle re-assignment, using the provided script.

Best,

Michael