Hello, I want to do particle subtraction in a map imported from outside cryosparc however you recommend the following:

Note: Both half-maps should be present in the input volume dataset; otherwise, if only the full map is present, signal from the full map will be introduced into each of the subtracted particles, which may result in particle half-sets that are no longer fully independent.

I also imported the half maps but the particle subtraction (and the local refinement) job does not allow me to input more than one map.

Anyone could help me with this problem?

Thank you

Adrian

Hi @Adrian,

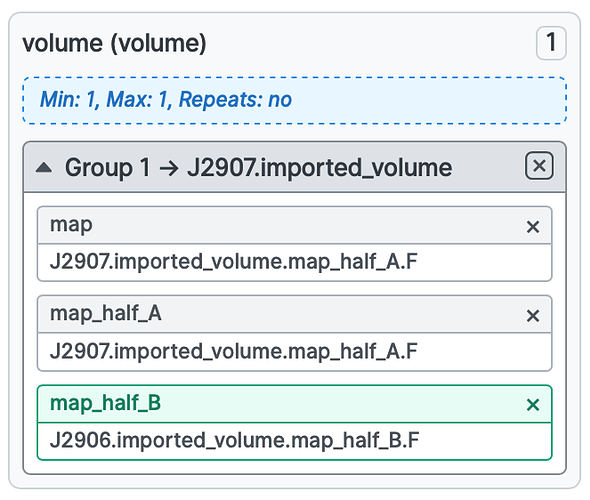

You can do this by using the low-level results feature of the job builder. For your particular case, you can follow these steps:

- Import each half map in separate Import Volumes job, making sure that one of them has volume type

map_half_Aand the other hasmap_half_B. - Connect the

map_half_Avolume group to the particle subtraction job as usual. - Navigate to the outputs tab of the

map_half_Bimport job. - Override the

map_half_Blow-level result in the particle subtraction job with the imported one (see image below)

Before queueing the job, the volume input should look like the image below. Note that the map_half_B result is green, and is sourced from a different job than the map_half_A result.

You should make sure that the job doesn’t print “Not using halfmaps” to the streamlog, which confirms that it read each half-map separately. Let me know if you have any questions or difficulties.

Best,

Michael

Hi @mmclean

I followed your instructions but unfortunetly I got the following error, do not know if it is related to the half maps thing

Traceback (most recent call last):

File “cryosparc_worker/cryosparc_compute/run.py”, line 84, in cryosparc_compute.run.main

File “cryosparc_worker/cryosparc_compute/jobs/local_refine/run_psub.py”, line 90, in cryosparc_compute.jobs.local_refine.run_psub.run_particle_subtract

File “/home/carlos/cryosparc/cryosparc_worker/cryosparc_compute/particles.py”, line 31, in init

self.from_dataset(d) # copies in all data

File “/home/carlos/cryosparc/cryosparc_worker/cryosparc_compute/dataset.py”, line 473, in from_dataset

if len(other) == 0: return self

TypeError: object of type ‘NoneType’ has no len()

Thank you

Adrian

Hi @Adrian,

Thanks for reporting. This looks like an issue with the particles, rather than the half-maps. Were the particles imported directly, or were they picked/extracted within cryoSPARC itself? As well, what is shown as the number of particles of the input particle stack (you can see this in the outputs tab of the parent job)?

Best,

Michael

Hi mmclean,

The particles were picked and extracted are aligned (NU-refinement) within cryoSPARC. The number of particles of the input particles stack is 797754.

Then I have two volumes I want to do particle subtraction and local refinement with, one comes from cryoSPARC itself (from the same NU-refinement the particles come) and the other from Relion. The mask was done with the map from Relion so I had to move the map from cryoSPARC to the same orientation as the one from Relion for the mask to match the region I want. I also changed the box of the mask because it is different in both maps.

Best,

Adrian

Hi @Adrian,

Just to confirm, was only one particle stack inputted into the particle subtraction? This error suggests something is going wrong when loading the particles – could you try inputting the same particles into to a “Homogeneous reconstruction only” job, along with the mask, and seeing if the job completes successfully and gives an expected reconstruction of the structure?

Best,

Michael