It turns out that the sister computer (identical spect in Jeff Lee’s lab) did have similar problems with the computer shutting off. Right now they have things working with

- CUDA 10.2

- NVIDIA 440.31

- CryoSparc 2.13.2

I did some testing of jobs:

Driver Version: 410.48.

Cuda V10.0.130.

CryoSparc: v2.12.4

I could run Import Movies (72 movies)

And then with 1 movie I could run

Full-frame motion, CTF Estimation, Patch motion, Patch CTF, Blob picker, extract_micrographs (GPU and CPU), Ab-Initio

I ran two Ab-Initios at the same time (SSD and no SSD caching) and the one with no SSD caching failed at Iteration 200 with ====== Job process terminated abnormally.

A refinement failed during -- Iteration 0:

-- DEV 0 THR 0 NUM 103 TOTAL 0.1339330 ELAPSED 1.1286489 --

Processed 205.000 images in 1.858s.

Computing FSCs...

Job is unresponsive - no heartbeat received in 30 seconds.

The job log of the ab initio that worked:

owner@owner-System-Product-Name:/run/media/owner/gw/P28$ more J33/job.log

================= CRYOSPARCW ======= 2020-02-14 08:40:40.158212 =========

Project P3 Job J33

Master owner-System-Product-Name Port 39002

===========================================================================

========= monitor process now starting main process

MAINPROCESS PID 8689

========= monitor process now waiting for main process

MAIN PID 8689

abinit.run cryosparc2_compute.jobs.jobregister

***************************************************************

Running job J33 of type homo_abinit

Running job on hostname %s owner-System-Product-Name

Allocated Resources : {u'lane': u'default', u'target': {u'monitor_port': None, u'lane': u'default', u'name': u

'owner-System-Product-Name', u'title': u'Worker node owner-System-Product-Name', u'resource_slots': {u'GPU': [0

, 1], u'RAM': [0, 1, 2, 3, 4, 5, 6, 7], u'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17,

18, 19, 20, 21, 22, 23]}, u'hostname': u'owner-System-Product-Name', u'worker_bin_path': u'/home/owner/cryospar

c2a/cryosparc2_worker/bin/cryosparcw', u'cache_path': u'/home/owner/cryosparc2a/', u'cache_quota_mb': None, u'r

esource_fixed': {u'SSD': True}, u'cache_reserve_mb': 10000, u'type': u'node', u'ssh_str': u'owner@owner-System-

Product-Name', u'desc': None}, u'license': True, u'hostname': u'owner-System-Product-Name', u'slots': {u'GPU':

[0], u'RAM': [0], u'CPU': [0, 1]}, u'fixed': {u'SSD': True}, u'lane_type': u'default', u'licenses_acquired': 1}

cryosparc2_compute/jobs/runcommon.py:1490: RuntimeWarning: divide by zero encountered in float_scalars

run_old(*args, **kw)

cryosparc2_compute/jobs/runcommon.py:1490: RuntimeWarning: divide by zero encountered in double_scalars

run_old(*args, **kw)

cryosparc2_compute/jobs/runcommon.py:1490: RuntimeWarning: invalid value encountered in float_scalars

run_old(*args, **kw)

cryosparc2_compute/jobs/runcommon.py:1490: RuntimeWarning: invalid value encountered in double_scalars

run_old(*args, **kw)

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

/home/owner/cryosparc2a/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/matplotlib/pyplot.py:516: R

untimeWarning: More than 20 figures have been opened. Figures created through the pyplot interface (`matplotlib

.pyplot.figure`) are retained until explicitly closed and may consume too much memory. (To control this warning

, see the rcParam `figure.max_open_warning`).

max_open_warning, RuntimeWarning)

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

***************************************************************

========= main process now complete.

========= monitor process now complete.

The one that didn’t work

owner@owner-System-Product-Name:/run/media/owner/gw/P28$ more J34/job.log

================= CRYOSPARCW ======= 2020-02-14 08:41:11.079270 =========

Project P3 Job J34

Master owner-System-Product-Name Port 39002

===========================================================================

========= monitor process now starting main process

MAINPROCESS PID 8876

========= monitor process now waiting for main process

MAIN PID 8876

abinit.run cryosparc2_compute.jobs.jobregister

***************************************************************

Running job J34 of type homo_abinit

Running job on hostname %s owner-System-Product-Name

Allocated Resources : {u'lane': u'default', u'target': {u'monitor_port': None, u'lane': u'default', u'name': u

'owner-System-Product-Name', u'title': u'Worker node owner-System-Product-Name', u'resource_slots': {u'GPU': [0

, 1], u'RAM': [0, 1, 2, 3, 4, 5, 6, 7], u'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17,

18, 19, 20, 21, 22, 23]}, u'hostname': u'owner-System-Product-Name', u'worker_bin_path': u'/home/owner/cryospar

c2a/cryosparc2_worker/bin/cryosparcw', u'cache_path': u'/home/owner/cryosparc2a/', u'cache_quota_mb': None, u'r

esource_fixed': {u'SSD': True}, u'cache_reserve_mb': 10000, u'type': u'node', u'ssh_str': u'owner@owner-System-

Product-Name', u'desc': None}, u'license': True, u'hostname': u'owner-System-Product-Name', u'slots': {u'GPU':

[1], u'RAM': [1], u'CPU': [2, 3]}, u'fixed': {u'SSD': True}, u'lane_type': u'default', u'licenses_acquired': 1}

cryosparc2_compute/jobs/runcommon.py:1490: RuntimeWarning: invalid value encountered in float_scalars

run_old(*args, **kw)

cryosparc2_compute/jobs/runcommon.py:1490: RuntimeWarning: invalid value encountered in double_scalars

run_old(*args, **kw)

cryosparc2_compute/jobs/runcommon.py:1490: RuntimeWarning: divide by zero encountered in float_scalars

run_old(*args, **kw)

cryosparc2_compute/jobs/runcommon.py:1490: RuntimeWarning: divide by zero encountered in double_scalars

run_old(*args, **kw)

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

malloc(): memory corruption

malloc(): memory corruption

========= main process now complete.

========= monitor process now complete.

Then I updated the NVIDIA driver to the most recent one (440.59).

I kept CUDA 10.0

cryoSPARC 2.12.4

Full-frame motion (1 movie) completed but I could not get Ab initio to run. The job logs didn’t show anything, as if the computer restarted while everything was working fine

owner@owner-System-Product-Name:/run/media/owner/gw/P28$ more J45/job.log

================= CRYOSPARCW ======= 2020-02-14 10:44:34.059865 =========

Project P3 Job J45

Master owner-System-Product-Name Port 39002

===========================================================================

========= monitor process now starting main process

MAINPROCESS PID 3422

========= monitor process now waiting for main process

MAIN PID 3422

abinit.run cryosparc2_compute.jobs.jobregister

***************************************************************

Running job J45 of type homo_abinit

Running job on hostname %s owner-System-Product-Name

Allocated Resources : {u'lane': u'default', u'target': {u'monitor_port': None, u'lane': u'default', u'name': u

'owner-System-Product-Name', u'title': u'Worker node owner-System-Product-Name', u'resource_slots': {u'GPU': [0

, 1], u'RAM': [0, 1, 2, 3, 4, 5, 6, 7], u'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17,

18, 19, 20, 21, 22, 23]}, u'hostname': u'owner-System-Product-Name', u'worker_bin_path': u'/home/owner/cryospar

c2a/cryosparc2_worker/bin/cryosparcw', u'cache_path': u'/home/owner/cryosparc2a/', u'cache_quota_mb': None, u'r

esource_fixed': {u'SSD': True}, u'cache_reserve_mb': 10000, u'type': u'node', u'ssh_str': u'owner@owner-System-

Product-Name', u'desc': None}, u'license': True, u'hostname': u'owner-System-Product-Name', u'slots': {u'GPU':

[0], u'RAM': [0], u'CPU': [0, 1]}, u'fixed': {u'SSD': True}, u'lane_type': u'default', u'licenses_acquired': 1}

cryosparc2_compute/jobs/runcommon.py:1490: RuntimeWarning: divide by zero encountered in float_scalars

run_old(*args, **kw)

cryosparc2_compute/jobs/runcommon.py:1490: RuntimeWarning: divide by zero encountered in double_scalars

run_old(*args, **kw)

cryosparc2_compute/jobs/runcommon.py:1490: RuntimeWarning: invalid value encountered in float_scalars

run_old(*args, **kw)

cryosparc2_compute/jobs/runcommon.py:1490: RuntimeWarning: invalid value encountered in double_scalars

run_old(*args, **kw)

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

========= sending heartbeat

Then I updated to CUDA 10.2.

So now the driver is the most recent 440.59,

but there is still an older version of cryoSPARC v2.12

This didn’t change anything from CUDA 10.0. I could get Full-frame motion to complete, but not Ab-Initio. The computer rebooted in iteration 0. I think there was not even time to update the job.log since it seems truncated at

owner@owner-System-Product-Name:/run/media/owner/gw/P28$ more J47/job.log

================= CRYOSPARCW ======= 2020-02-14 11:11:24.754086 =========

Project P3 Job J47

Master owner-System-Product-Name Port 39002

===========================================================================

========= monitor process now starting main process

MAINPROCESS PID 6524

========= monitor process now waiting for main process

MAIN PID 6524

abinit.run cryosparc2_compute.jobs.jobregister

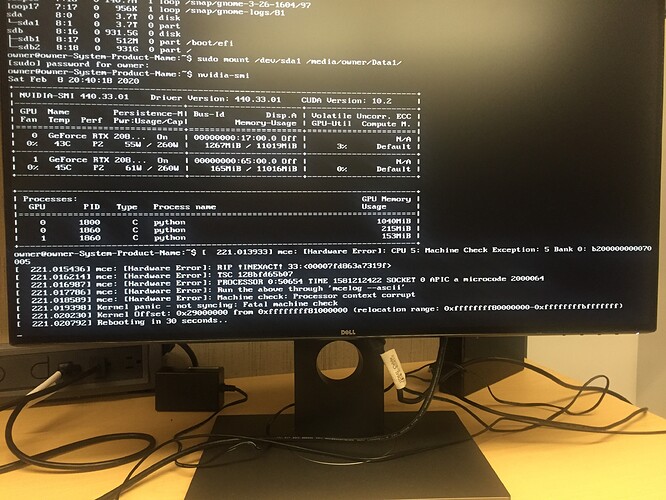

I then updated cryoSPARC to v 2.13.2. So now

CUDA 10.2

NVIDIA DRIVER 440.59

I can complete Full-frame motion, Patch CTF, Blob picker, extract_micrographs (light load of just 1 exposure). However I’m getting some informative errors in Ab-Initio, 2D Class and Refinement

Ab initio

owner@owner-System-Product-Name:/run/media/owner/gw/P28$ more J49/job.log

================= CRYOSPARCW ======= 2020-02-14 11:28:15.311179 =========

Project P3 Job J49

Master owner-System-Product-Name Port 39002

===========================================================================

========= monitor process now starting main process

MAINPROCESS PID 3329

========= monitor process now waiting for main process

MAIN PID 3329

abinit.run cryosparc2_compute.jobs.jobregister

/home/owner/cryosparc2a/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/skcuda/cublas.py:284: UserW

arning: creating CUBLAS context to get version number

warnings.warn('creating CUBLAS context to get version number')

***************************************************************

Running job J49 of type homo_abinit

Running job on hostname %s owner-System-Product-Name

Allocated Resources : {u'lane': u'default', u'target': {u'monitor_port': None, u'lane': u'default', u'name': u

'owner-System-Product-Name', u'title': u'Worker node owner-System-Product-Name', u'resource_slots': {u'GPU': [0

, 1], u'RAM': [0, 1, 2, 3, 4, 5, 6, 7], u'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17,

18, 19, 20, 21, 22, 23]}, u'hostname': u'owner-System-Product-Name', u'worker_bin_path': u'/home/owner/cryospar

c2a/cryosparc2_worker/bin/cryosparcw', u'cache_path': u'/home/owner/cryosparc2a/', u'cache_quota_mb': None, u'r

esource_fixed': {u'SSD': True}, u'gpus': [{u'mem': 11554717696, u'id': 0, u'name': u'GeForce RTX 2080 Ti'}, {u'

mem': 11551440896, u'id': 1, u'name': u'GeForce RTX 2080 Ti'}], u'cache_reserve_mb': 10000, u'type': u'node', u

'ssh_str': u'owner@owner-System-Product-Name', u'desc': None}, u'license': True, u'hostname': u'owner-System-Pr

oduct-Name', u'slots': {u'GPU': [0], u'RAM': [0], u'CPU': [0, 1]}, u'fixed': {u'SSD': True}, u'lane_type': u'de

fault', u'licenses_acquired': 1}

**custom thread exception hook caught something

**** handle exception rc

set status to failed

Traceback (most recent call last):

File "cryosparc2_compute/jobs/runcommon.py", line 1547, in run_with_except_hook

run_old(*args, **kw)

File "cryosparc2_worker/cryosparc2_compute/engine/cuda_core.py", line 110, in cryosparc2_compute.engine.cuda_

core.GPUThread.run

File "cryosparc2_worker/cryosparc2_compute/engine/cuda_core.py", line 111, in cryosparc2_compute.engine.cuda_

core.GPUThread.run

File "cryosparc2_worker/cryosparc2_compute/engine/engine.py", line 991, in cryosparc2_compute.engine.engine.p

rocess.work

File "cryosparc2_worker/cryosparc2_compute/engine/engine.py", line 101, in cryosparc2_compute.engine.engine.E

ngineThread.load_image_data_gpu

File "cryosparc2_worker/cryosparc2_compute/engine/cuda_kernels.py", line 1803, in cryosparc2_compute.engine.c

uda_kernels.prepare_real

File "cryosparc2_worker/cryosparc2_compute/engine/cuda_core.py", line 362, in cryosparc2_compute.engine.cuda_

core.context_dependent_memoize.wrapper

File "cryosparc2_worker/cryosparc2_compute/engine/cuda_kernels.py", line 1707, in cryosparc2_compute.engine.c

uda_kernels.get_util_kernels

File "/home/owner/cryosparc2a/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/pycuda/compiler.py"

, line 294, in __init__

self.module = module_from_buffer(cubin)

pycuda._driver.LogicError: cuModuleLoadDataEx failed: device kernel image is invalid - error : Binary format

for key='0', ident='' is not recognized

========= main process now complete.

========= monitor process now complete.

2D Class

owner@owner-System-Product-Name:/run/media/owner/gw/P28$ more J54/job.log

================= CRYOSPARCW ======= 2020-02-14 11:32:04.829898 =========

Project P3 Job J54

Master owner-System-Product-Name Port 39002

===========================================================================

========= monitor process now starting main process

MAINPROCESS PID 3902

========= monitor process now waiting for main process

MAIN PID 3902

class2D.run cryosparc2_compute.jobs.jobregister

/home/owner/cryosparc2a/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/skcuda/cublas.py:284: UserW

arning: creating CUBLAS context to get version number

warnings.warn('creating CUBLAS context to get version number')

***************************************************************

Running job J54 of type class_2D

Running job on hostname %s owner-System-Product-Name

Allocated Resources : {u'lane': u'default', u'target': {u'monitor_port': None, u'lane': u'default', u'name': u

'owner-System-Product-Name', u'title': u'Worker node owner-System-Product-Name', u'resource_slots': {u'GPU': [0

, 1], u'RAM': [0, 1, 2, 3, 4, 5, 6, 7], u'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17,

18, 19, 20, 21, 22, 23]}, u'hostname': u'owner-System-Product-Name', u'worker_bin_path': u'/home/owner/cryospar

c2a/cryosparc2_worker/bin/cryosparcw', u'cache_path': u'/home/owner/cryosparc2a/', u'cache_quota_mb': None, u'r

esource_fixed': {u'SSD': True}, u'gpus': [{u'mem': 11554717696, u'id': 0, u'name': u'GeForce RTX 2080 Ti'}, {u'

mem': 11551440896, u'id': 1, u'name': u'GeForce RTX 2080 Ti'}], u'cache_reserve_mb': 10000, u'type': u'node', u

'ssh_str': u'owner@owner-System-Product-Name', u'desc': None}, u'license': True, u'hostname': u'owner-System-Pr

oduct-Name', u'slots': {u'GPU': [0], u'RAM': [0, 1, 2], u'CPU': [0, 1]}, u'fixed': {u'SSD': True}, u'lane_type'

: u'default', u'licenses_acquired': 1}

**custom thread exception hook caught something

**** handle exception rc

set status to failed

Traceback (most recent call last):

File "cryosparc2_compute/jobs/runcommon.py", line 1547, in run_with_except_hook

run_old(*args, **kw)

File "cryosparc2_worker/cryosparc2_compute/engine/cuda_core.py", line 110, in cryosparc2_compute.engine.cuda_

core.GPUThread.run

File "cryosparc2_worker/cryosparc2_compute/engine/cuda_core.py", line 111, in cryosparc2_compute.engine.cuda_

core.GPUThread.run

File "cryosparc2_worker/cryosparc2_compute/engine/engine.py", line 991, in cryosparc2_compute.engine.engine.p

rocess.work

File "cryosparc2_worker/cryosparc2_compute/engine/engine.py", line 101, in cryosparc2_compute.engine.engine.E

ngineThread.load_image_data_gpu

File "cryosparc2_worker/cryosparc2_compute/engine/cuda_kernels.py", line 1803, in cryosparc2_compute.engine.c

uda_kernels.prepare_real

File "cryosparc2_worker/cryosparc2_compute/engine/cuda_core.py", line 362, in cryosparc2_compute.engine.cuda_

core.context_dependent_memoize.wrapper

File "cryosparc2_worker/cryosparc2_compute/engine/cuda_kernels.py", line 1707, in cryosparc2_compute.engine.c

uda_kernels.get_util_kernels

File "/home/owner/cryosparc2a/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/pycuda/compiler.py"

, line 294, in __init__

self.module = module_from_buffer(cubin)

pycuda._driver.LogicError: cuModuleLoadDataEx failed: device kernel image is invalid - error : Binary format

for key='0', ident='' is not recognized

========= main process now complete.

========= monitor process now complete.

Refinement

owner@owner-System-Product-Name:/run/media/owner/gw/P28$ more J55/job.log

================= CRYOSPARCW ======= 2020-02-14 11:56:22.342156 =========

Project P3 Job J55

Master owner-System-Product-Name Port 39002

===========================================================================

========= monitor process now starting main process

MAINPROCESS PID 4110

========= monitor process now waiting for main process

MAIN PID 4110

refine.run cryosparc2_compute.jobs.jobregister

/home/owner/cryosparc2a/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/skcuda/cublas.py:284: UserW

arning: creating CUBLAS context to get version number

warnings.warn('creating CUBLAS context to get version number')

========= sending heartbeat

***************************************************************

Running job J55 of type homo_refine

Running job on hostname %s owner-System-Product-Name

Allocated Resources : {u'lane': u'default', u'target': {u'monitor_port': None, u'lane': u'default', u'name': u

'owner-System-Product-Name', u'title': u'Worker node owner-System-Product-Name', u'resource_slots': {u'GPU': [0

, 1], u'RAM': [0, 1, 2, 3, 4, 5, 6, 7], u'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17,

18, 19, 20, 21, 22, 23]}, u'hostname': u'owner-System-Product-Name', u'worker_bin_path': u'/home/owner/cryospar

c2a/cryosparc2_worker/bin/cryosparcw', u'cache_path': u'/home/owner/cryosparc2a/', u'cache_quota_mb': None, u'r

esource_fixed': {u'SSD': True}, u'gpus': [{u'mem': 11554717696, u'id': 0, u'name': u'GeForce RTX 2080 Ti'}, {u'

mem': 11551440896, u'id': 1, u'name': u'GeForce RTX 2080 Ti'}], u'cache_reserve_mb': 10000, u'type': u'node', u

'ssh_str': u'owner@owner-System-Product-Name', u'desc': None}, u'license': True, u'hostname': u'owner-System-Pr

oduct-Name', u'slots': {u'GPU': [0], u'RAM': [0, 1, 2], u'CPU': [0, 1, 2, 3]}, u'fixed': {u'SSD': True}, u'lane

_type': u'default', u'licenses_acquired': 1}

**custom thread exception hook caught something

**** handle exception rc

set status to failed

Traceback (most recent call last):

File "cryosparc2_compute/jobs/runcommon.py", line 1547, in run_with_except_hook

run_old(*args, **kw)

File "cryosparc2_worker/cryosparc2_compute/engine/cuda_core.py", line 110, in cryosparc2_compute.engine.cuda_

core.GPUThread.run

File "cryosparc2_worker/cryosparc2_compute/engine/cuda_core.py", line 111, in cryosparc2_compute.engine.cuda_

core.GPUThread.run

File "cryosparc2_worker/cryosparc2_compute/engine/engine.py", line 991, in cryosparc2_compute.engine.engine.p

rocess.work

File "cryosparc2_worker/cryosparc2_compute/engine/engine.py", line 101, in cryosparc2_compute.engine.engine.E

ngineThread.load_image_data_gpu

File "cryosparc2_worker/cryosparc2_compute/engine/cuda_kernels.py", line 1803, in cryosparc2_compute.engine.c

uda_kernels.prepare_real

File "cryosparc2_worker/cryosparc2_compute/engine/cuda_core.py", line 362, in cryosparc2_compute.engine.cuda_

core.context_dependent_memoize.wrapper

File "cryosparc2_worker/cryosparc2_compute/engine/cuda_kernels.py", line 1707, in cryosparc2_compute.engine.c

uda_kernels.get_util_kernels

File "/home/owner/cryosparc2a/cryosparc2_worker/deps/anaconda/lib/python2.7/site-packages/pycuda/compiler.py"

, line 294, in __init__

self.module = module_from_buffer(cubin)

pycuda._driver.LogicError: cuModuleLoadDataEx failed: device kernel image is invalid - error : Binary format

for key='0', ident='' is not recognized

========= main process now complete.

========= monitor process now complete.