Here is the output of the command ‘cryosparcm cli “get_scheduler_targets()”’: The five worker nodes are called kphgpuw01-05 (there are also two SLURM queues, 4gpu and 6pu):

$ cryosparcm cli "get_scheduler_targets()"

[{'cache_path': '/scratch', 'cache_quota_mb': 4697225, 'cache_reserve_mb': 1000, 'desc': None, 'gpus': [{'id': 0, 'mem': 8370061312, 'name': 'NVIDIA GeForce RTX 2080'}, {'id': 1, 'mem': 8370061312, 'name': 'NVIDIA GeForce RTX 2080'}], 'hostname': 'kphgpuw01.gcm.genzentrum.lmu.de', 'lane': 'default', 'monitor_port': None, 'name': 'kphgpuw01.gcm.genzentrum.lmu.de', 'resource_fixed': {'SSD': True}, 'resource_slots': {'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95], 'GPU': [0, 1], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31]}, 'ssh_str': 'cryosparc@kphgpuw01.gcm.genzentrum.lmu.de', 'title': 'Worker node kphgpuw01.gcm.genzentrum.lmu.de', 'type': 'node', 'worker_bin_path': '/home/strubio/cryosparc/software/cryosparc_v2/cryosparc2_worker/bin/cryosparcw'}, {'cache_path': '/scratch', 'cache_quota_mb': 4566153, 'cache_reserve_mb': 1000, 'desc': None, 'gpus': [{'id': 0, 'mem': 8370061312, 'name': 'NVIDIA GeForce RTX 2080'}, {'id': 1, 'mem': 8370061312, 'name': 'NVIDIA GeForce RTX 2080'}], 'hostname': 'kphgpuw02.gcm.genzentrum.lmu.de', 'lane': 'default', 'monitor_port': None, 'name': 'kphgpuw02.gcm.genzentrum.lmu.de', 'resource_fixed': {'SSD': True}, 'resource_slots': {'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95], 'GPU': [0, 1], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31]}, 'ssh_str': 'cryosparc@kphgpuw02.gcm.genzentrum.lmu.de', 'title': 'Worker node kphgpuw02.gcm.genzentrum.lmu.de', 'type': 'node', 'worker_bin_path': '/home/strubio/cryosparc/software/cryosparc_v2/cryosparc2_worker/bin/cryosparcw'}, {'cache_path': '/scratch', 'cache_quota_mb': 4566153, 'cache_reserve_mb': 1000, 'desc': None, 'gpus': [{'id': 0, 'mem': 8370061312, 'name': 'NVIDIA GeForce RTX 2080'}, {'id': 1, 'mem': 8370061312, 'name': 'NVIDIA GeForce RTX 2080'}], 'hostname': 'kphgpuw03.gcm.genzentrum.lmu.de', 'lane': 'default', 'monitor_port': None, 'name': 'kphgpuw03.gcm.genzentrum.lmu.de', 'resource_fixed': {'SSD': True}, 'resource_slots': {'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95], 'GPU': [0, 1], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31]}, 'ssh_str': 'cryosparc@kphgpuw03.gcm.genzentrum.lmu.de', 'title': 'Worker node kphgpuw03.gcm.genzentrum.lmu.de', 'type': 'node', 'worker_bin_path': '/home/strubio/cryosparc/software/cryosparc_v2/cryosparc2_worker/bin/cryosparcw'}, {'cache_path': '/scratch', 'cache_quota_mb': 4566153, 'cache_reserve_mb': 1000, 'desc': None, 'gpus': [{'id': 0, 'mem': 8370061312, 'name': 'NVIDIA GeForce RTX 2080'}, {'id': 1, 'mem': 8370061312, 'name': 'NVIDIA GeForce RTX 2080'}], 'hostname': 'kphgpuw04.gcm.genzentrum.lmu.de', 'lane': 'default', 'monitor_port': None, 'name': 'kphgpuw04.gcm.genzentrum.lmu.de', 'resource_fixed': {'SSD': True}, 'resource_slots': {'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95], 'GPU': [0, 1], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31]}, 'ssh_str': 'cryosparc@kphgpuw04.gcm.genzentrum.lmu.de', 'title': 'Worker node kphgpuw04.gcm.genzentrum.lmu.de', 'type': 'node', 'worker_bin_path': '/home/strubio/cryosparc/software/cryosparc_v2/cryosparc2_worker/bin/cryosparcw'}, {'cache_path': '/scratch', 'cache_quota_mb': 3754427, 'cache_reserve_mb': 1000, 'desc': None, 'gpus': [{'id': 0, 'mem': 25434652672, 'name': 'NVIDIA RTX A5000'}, {'id': 1, 'mem': 25434652672, 'name': 'NVIDIA RTX A5000'}], 'hostname': 'kphgpuw05.gcm.genzentrum.lmu.de', 'lane': 'default', 'monitor_port': None, 'name': 'kphgpuw05.gcm.genzentrum.lmu.de', 'resource_fixed': {'SSD': True}, 'resource_slots': {'CPU': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95], 'GPU': [0, 1], 'RAM': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31]}, 'ssh_str': 'cryosparc@kphgpuw05.gcm.genzentrum.lmu.de', 'title': 'Worker node kphgpuw05.gcm.genzentrum.lmu.de', 'type': 'node', 'worker_bin_path': '/home/strubio/cryosparc/software/cryosparc_v2/cryosparc2_worker/bin/cryosparcw'}, {'cache_path': '/scratch', 'cache_quota_mb': 1850000, 'cache_reserve_mb': 10240, 'desc': None, 'hostname': '4gpu', 'lane': '4gpu', 'name': '4gpu', 'qdel_cmd_tpl': 'scancel {{ cluster_job_id }}', 'qinfo_cmd_tpl': 'sinfo', 'qstat_cmd_tpl': 'squeue -j {{ cluster_job_id }}', 'qsub_cmd_tpl': 'sbatch {{ script_path_abs }}', 'script_tpl': '#!/bin/bash -e\n#\n# cryoSPARC script for SLURM submission with sbatch\n#\n# 24-01-2022 Dirk Kostrewa\n\n#SBATCH --partition=4gpu\n#SBATCH --job-name=cryosparc_{{ project_uid }}_{{ job_uid }}\n#SBATCH --output={{ job_log_path_abs }}\n#SBATCH --error={{ job_log_path_abs }}\n#SBATCH --nodes=1\n#SBATCH --ntasks-per-node=1\n#SBATCH --cpus-per-task={{ num_cpu }}\n#SBATCH --mem={{ (ram_gb)|int }}G\n#SBATCH --gres=gpu:{{ num_gpu }}\n#SBATCH --gres-flags=enforce-binding\n#SBATCH --mail-type=NONE\n#SBATCH --mail-user={{ cryosparc_username }}\n\navailable_devs=""\nfor devidx in $(seq 0 5);\ndo\n if [[ -z $(nvidia-smi -i $devidx --query-compute-apps=pid --format=csv,noheader) ]] ; then\n if [[ -z "$available_devs" ]] ; then\n available_devs=$devidx\n else\n available_devs=$available_devs,$devidx\n fi\n fi\ndone\nexport CUDA_VISIBLE_DEVICES=$available_devs\n\n{{ run_cmd }}\n', 'send_cmd_tpl': '{{ command }}', 'title': 'slurm 4gpu', 'type': 'cluster', 'worker_bin_path': '/home/strubio/cryosparc/software/cryosparc_v2/cryosparc2_worker/bin/cryosparcw'}, {'cache_path': '/scratch', 'cache_quota_mb': 1850000, 'cache_reserve_mb': 10240, 'desc': None, 'hostname': '6gpu', 'lane': '6gpu', 'name': '6gpu', 'qdel_cmd_tpl': 'scancel {{ cluster_job_id }}', 'qinfo_cmd_tpl': 'sinfo', 'qstat_cmd_tpl': 'squeue -j {{ cluster_job_id }}', 'qsub_cmd_tpl': 'sbatch {{ script_path_abs }}', 'script_tpl': '#!/bin/bash -e\n#\n# cryoSPARC script for SLURM submission with sbatch\n#\n# 24-01-2022 Dirk Kostrewa\n\n#SBATCH --partition=6gpu\n#SBATCH --job-name=cryosparc_{{ project_uid }}_{{ job_uid }}\n#SBATCH --output={{ job_log_path_abs }}\n#SBATCH --error={{ job_log_path_abs }}\n#SBATCH --nodes=1\n#SBATCH --ntasks-per-node=1\n#SBATCH --cpus-per-task={{ num_cpu }}\n#SBATCH --mem={{ (ram_gb)|int }}G\n#SBATCH --gres=gpu:{{ num_gpu }}\n#SBATCH --gres-flags=enforce-binding\n#SBATCH --mail-type=NONE\n#SBATCH --mail-user={{ cryosparc_username }}\n\navailable_devs=""\nfor devidx in $(seq 0 5);\ndo\n if [[ -z $(nvidia-smi -i $devidx --query-compute-apps=pid --format=csv,noheader) ]] ; then\n if [[ -z "$available_devs" ]] ; then\n available_devs=$devidx\n else\n available_devs=$available_devs,$devidx\n fi\n fi\ndone\nexport CUDA_VISIBLE_DEVICES=$available_devs\n\n{{ run_cmd }}\n', 'send_cmd_tpl': '{{ command }}', 'title': 'slurm 6gpu', 'type': 'cluster', 'worker_bin_path': '/home/strubio/cryosparc/software/cryosparc_v2/cryosparc2_worker/bin/cryosparcw'}]

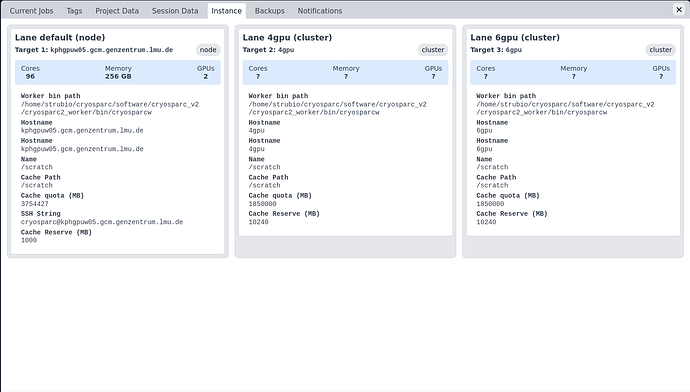

And here a screenshot of the “Instance” tab:

Best regards,

Dirk.