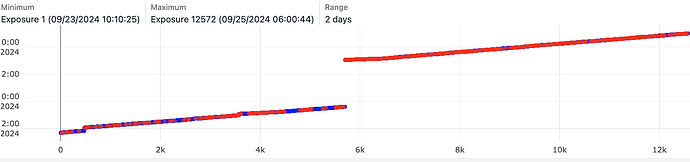

v4.4.1, after using live to process 5000 micrographs, I pause the session. I then add an additional exposure group pointing to different micrograph location and start session. CSlive then “finds” the original 5000micrographs and starts reprocessing them. This has happened multiple times. It should ignore the 5000 that were done and be searching for the new ones to add on and continue. Curiously, the new exposure group 2 says “5020 micrographs found” despite that folder location having only the 20. And if I start a new session and point to the 20 then it finds only the 20 (so the workaround is to run two sessions and merge particles. unfortunately I would much rather continue one session for datakeeping and to utilize well-established picking strategy and 2D templates in live2D).

And after, I can’t export the particles (or use the ones I already exported):

AssertionError: Dataset was the wrong length! Got 1482 expected 1481, Exposure UID: 1