Hi,

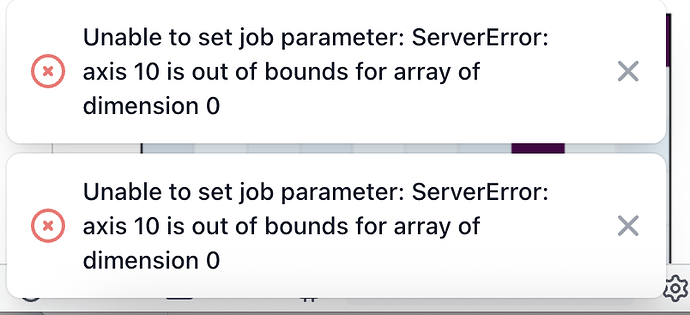

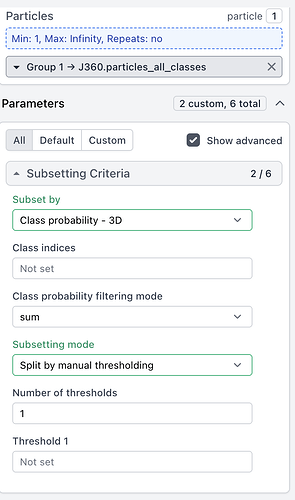

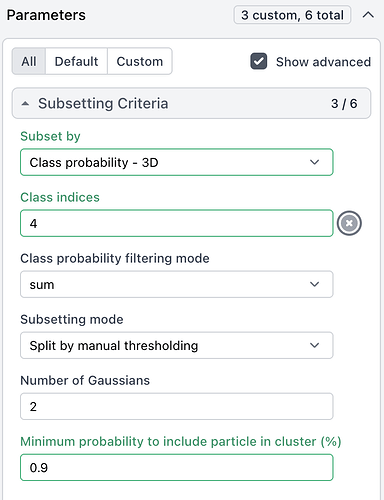

In v4.7.1, Subset particles by statistic gives the attached error when provided with the particles_all_classes output of a 3D classification job when Class Probability is selected with manual thresholding, no matter what I enter for the threshold or class indices fields. The job then subsequently fails because these fields remain unset.

This happens on two different workstations, and as far as I can remember did not happen prior to v4.7.1.

Cheers

Oli

I have run into the same error after upgrading to v4.7.1.

I have encountered the same issue after updating to 4.7.1.

@olibclarke @emgreen @SamCorthaut Thanks for reporting. We reproduced the bug and will include a fix in a future release.

2 Likes

@olibclarke @emgreen @SamCorthaut Patch 250811 for v4.7.1 has just been released and includes a fix for this issue.

Update 2025-08-14. Patch 250811 includes the fix only for v4.7.1-cuda12, not for “standard” v4.7.1. The fix for the latter is included in Patch 250814 is available for CryoSPARC v4.7.1.

2 Likes

@wtempel it doesn’t seem to be fixed - is a new heterogeneous reconstruction required as well as a new subset particles job?

Thanks for reporting @DanielAsarnow .

Please can you

- confirm that CryoSPARC has been restarted after patching

- post the outputs of these commands

cd /path/to/cryosparc_master/

stat patch

cat patch

ps -eo pid,ppid,start,cmd | grep -e cryosparc_ -e mongo -e node

@DanielAsarnow I’ve been able to get it to work, but it is a bit finicky with the GUI. Specifically, after changing “Subset by” to “Class Probability - 3D” I need to reset the “Subsetting mode” back to Gaussian fitting, and then selecting manual threshold. When it works, the “Class indices” and “Threshold” options turn green to indicate custom parameters. After this workaround it runs properly with pre-patch Heterogenous Refinement jobs, but I still see error message Oli posted originally.

@wtempel I did restart after patching. I’ll send out the outputs to the commands to you directly.

I was able to run a job with 3D class probability selected, but it reverted to a clustering job. I can’t get a manual threshold to “go green” or even for the input field to reappear once clustering is chosen.

Here are the patch status outputs…I did have to reinstall the current version with override and set the patch manually be copying from the worker as described in another post.

(base) [cryosparc@tyr cryosparc2_master]$ stat patch

File: ‘patch’

Size: 7 Blocks: 8 IO Block: 4096 regular file

Device: 2dh/45d Inode: 54042900 Links: 1

Access: (0644/-rw-r--r--) Uid: ( 2002/cryosparc) Gid: ( 1002/ da)

Context: unconfined_u:object_r:nfs_t:s0

Access: 2025-08-12 23:51:50.468060581 -0700

Modify: 2025-08-12 23:51:50.468060581 -0700

Change: 2025-08-12 23:51:50.468060581 -0700

Birth: -

(base) [cryosparc@tyr cryosparc2_master]$ cat patch

250811

(base) [cryosparc@tyr cryosparc2_master]$ ps -eo pid,ppid,start,cmd | grep -e cryosparc_ -e mongo -e node

29231 1 12:10:54 python /mnt/tyr-data/cryosparc/cryosparc2/cryosparc2_master/deps/anaconda/envs/cryosparc_master_env/bin/supervisord -c /mnt/tyr-data/cryosparc/cryosparc2/cryosparc2_master/supervisord.conf

29354 29231 12:11:00 mongod --auth --dbpath /opt/cryosparc_db --port 38001 --oplogSize 64 --replSet meteor --wiredTigerCacheSizeGB 4 --bind_ip_all

29470 29231 12:11:04 python /mnt/tyr-data/cryosparc/cryosparc2/cryosparc2_master/deps/anaconda/envs/cryosparc_master_env/bin/gunicorn -n command_core -b 0.0.0.0:38002 cryosparc_command.command_core:start() -c /mnt/tyr-data/cryosparc/cryosparc2/cryosparc2_master/gunicorn.conf.py

29475 29470 12:11:06 python /mnt/tyr-data/cryosparc/cryosparc2/cryosparc2_master/deps/anaconda/envs/cryosparc_master_env/bin/gunicorn -n command_core -b 0.0.0.0:38002 cryosparc_command.command_core:start() -c /mnt/tyr-data/cryosparc/cryosparc2/cryosparc2_master/gunicorn.conf.py

29512 29231 12:11:12 /mnt/tyr-data/cryosparc/cryosparc2/cryosparc2_master/deps/anaconda/envs/cryosparc_master_env/bin/python3.10 /mnt/tyr-data/cryosparc/cryosparc2/cryosparc2_master/deps/anaconda/envs/cryosparc_master_env/bin/flask --app cryosparc_command.command_vis run -h 0.0.0.0 -p 38003 --with-threads

29534 29231 12:11:13 python /mnt/tyr-data/cryosparc/cryosparc2/cryosparc2_master/deps/anaconda/envs/cryosparc_master_env/bin/gunicorn cryosparc_command.command_rtp:start() -n command_rtp -b 0.0.0.0:38005 -c /mnt/tyr-data/cryosparc/cryosparc2/cryosparc2_master/gunicorn.conf.py

29539 29534 12:11:13 python /mnt/tyr-data/cryosparc/cryosparc2/cryosparc2_master/deps/anaconda/envs/cryosparc_master_env/bin/gunicorn cryosparc_command.command_rtp:start() -n command_rtp -b 0.0.0.0:38005 -c /mnt/tyr-data/cryosparc/cryosparc2/cryosparc2_master/gunicorn.conf.py

29589 29231 12:11:24 node dist/server/index.js

29606 29231 12:11:25 /mnt/tyr-data/cryosparc/cryosparc2/cryosparc2_master/cryosparc_app/nodejs/bin/node ./bundle/main.js

30368 28923 12:17:35 grep --color=auto -e cryosparc_ -e mongo -e node

(base) [cryosparc@tyr cryosparc2_master]$

Hi @DanielAsarnow and all, due to a mixup in our release process, this fix was in the patch for the special v4.7.1-cuda12 version, but unintentionally omitted from the normal v4.7.1. We’ve issued a new patch with the correct fix: Patch 250814 is available for CryoSPARC v4.7.1

Installing that patch should fix this. My apologies for this!

Thanks, it’s working now!

2 Likes