Dear community,

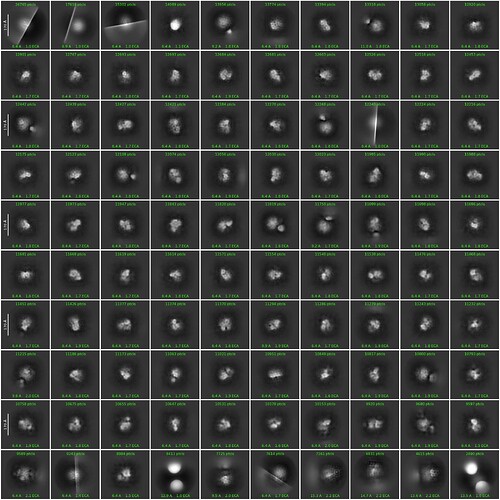

I am processing a dataset of a 13x10nm particle with C1 symmetry. Samples were prepared on graphene oxide grids. The core (200 kDa) should be stable with some variability coming from two smaller subunits (70kDa each). I am trying to improve my 2D classes. In general, I can identify the particles, but they look dotty or perhaps overfitted. The box size is 482px (0.83 A/px) scaled to 128 px. Large box helped, perhaps due to delocalized CTF, as discussed before. See example below:

After doing a first round of 2D classifications, I picked the “best” (although dotty) classes and trained a Topaz model for particle picking. My particles are clearly identifiable on the micrographs and Topaz was able to effectively exclude any carbon area and ethane contaminants. In the example above, 1.1M particles were classified using 100 classes, 20 OE iterations and 10 full iterations. 500 particles batchsize. I observed that increasing to 10 full iterations improved the classification of views but not the quality of the classes.

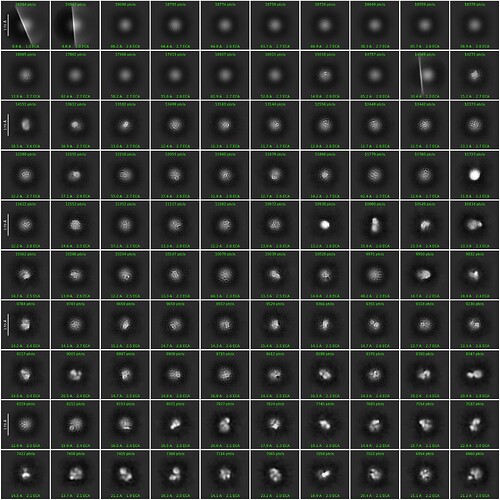

Trying the well-reported 40/400 OEM-iterations/particle batchsize with maximization over poses and shifts OFF yielded almost no convergence:

I also tried to play around with the uncertainty factor from 2 to 8, without significant improvement compared to the default value. At this point, I am not sure if I should keep going with the iterations, play with the annealing or set max resolution. That is why I would appreciate any feedback from the community with their ample expertise. Any suggestions will be welcome and tested.